Big Data – a little analysis

This post was inspired by a conversation I had with a VC friend a few weeks back, just as he was about to head out to the Structure conference that would be covering this topic.

Big Data seems to be one of the huge industry buzz phrases at the moment. From the marketing it would seem like any problem can be solved simply by having a bigger pile of data and some tools to do stuff with it. I think that’s manifestly untrue – here’s why…

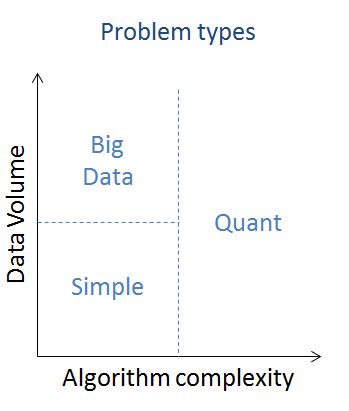

If we look at data/analysis problems there are essentially three types:

Simple problems

Low data volume, simple algorithm(s)

This is the stuff that people have been using computers for since the advent of the PC era (and in some cases before that). It’s the type of stuff that can be dealt with using a spreadsheet or a small relational database. Nothing new or interesting to see here…

Of course small databases grow into large databases, especially when individual or department scale problems flow into enterprise problems; but that doesn’t change the inherent simplicity. If the stuff that once occupied a desktop spreadsheet or database can be rammed into a giant SQL database then by and large the same algorithms and analysis can be made to work. This is why we have big iron, and when that runs out of steam we have the more recent move to scale out architectures.

Quant problems

Any data volume, complex algorithm(s)

These are the problems where you need somebody that understands algorithms – a quantitative analyst (or quant for short). I spent some time before writing this wondering if there was any distinction between ‘small data’ quant problems and ‘big data’ quant problems, but I’m pretty sure there isn’t. In my experience quants will grab as much data (and as many machines to process it) as they can lay their hands on. The trick in practice is achieving the right balance between computational tractability and time consuming optimisation in order to optimise the systemic costs[1].

Solving quant problems is an expensive business both in computation and brain power, so it tends to be confined to areas where the pay-off justifies the cost. Financial services is an obvious example, but there are others – reservoir simulation in the energy industry, computational fluid dynamics in aerospace, protein folding and DNA sequencing in pharmaceutics and even race strategy optimisation in Formula 1.

Big Data problems

Large data volume, simple algorithm(s)

There are probably two sub types here:

- Inherent big data problems – some activities simply throw off huge quantities of data. A good example is security monitoring where devices like firewalls and intrusion detection sensors create voluminous logs for analysis. Here the analyst has no choice over data volume, and must simply find a means to bring appropriate algorithms to bear.The incursion of IT into more areas of modern life is naturally creating more instances of this sub type. As more things get tagged and scanned and create a data path behind them we get bigger heaps of data that might hold some value.

- Big data rather than complex algorithm. There are cases when the overall performance of a system can be improved by using more data rather than a more complex algorithm. Google are perhaps the masters of this, and their work on machine language translation illustrates the point beautifully.

So where’s the gap between the marketing hype and reality?

If Roger Needham were alive today he might say:

Whoever thinks his problem is solved by big data, doesn’t understand his problem and doesn’t understand big data[2]

The point is that Google’s engineers are able to make an informed decision between a complex algorithm and using more data. They understand the problem, they understand algorithms and they have access to massive quantities of data.

Many businesses are presently being told that all they need to gain stunning insight that will help them whip their competition is a shiny big data tool. But there can be no value without understanding, and a tool on its own doesn’t deliver that (and it would be foolish to believe that a consultancy engagement to implement a tool helps matters much).

What is good about ‘big data’?

This post isn’t intended to be a dig at the big data concept, or the tools used to manage it. I’m simply pointing out that it’s not a panacea. Some problems need big data, and others don’t. Figuring out the nature of them problem is the first step. We might call that analysis, or we might call that ‘data science’ – perhaps the trick is figuring out where the knowledge border lies between the two.

What’s great is that we now have a ton of (mostly open source) tools that can help us manage big data problems when we find them. Hadoop, Hive, HBase, Cassandra are just some examples from the Apache stable, there are plenty more.

What can be bad about big data?

Many organisations now have in place automated systems based on simple algorithms that process vast data sets – credit card fraud detection being one good example. This has consequences for process visibility and ignoring specific data points that can ruin user experience and customer relationships. I’ll take this up further in a subsequent post, but I’m sure we’ve all at some stage been a victim of the ‘computer says no’ problem where nobody can explain why the computer said no, and it’s obviously a bad call given a common sense analysis.

Conclusion

For me big data is about a new generation of tools that allow us to work more effectively with large data sets. This is great for people who have inherent (and obvious) big data problems. For cases when it’s less obvious there’s a need for some analysis work to understand whether analysis of a larger data set might deliver more value versus using more complex algorithms.

[1] I’ve come across many IT people who only look at the costs of machines and the data centres they sit within. Machines in small numbers are cheap, but lots of them become expensive. Quants (even in small numbers) are always expensive, so there are many situations where the economic optimum is achieved by using more machines and fewer quants.

A good recent example of this is the news that Netflix never implemented the winning algorithm for its $1m challenge.

[2] Original `Whoever thinks his problem is solved by encryption, doesn’t understand his problem and doesn’t understand encryption’

Filed under: technology | 2 Comments

Tags: algorithm, analytics, big data, complexity, quant

Great clarification Chris.

Big Data has become yet another misunderstood industry buzzword used by new and out dated companies to pitch their product into unsuspecting and (mostly) uneducated users who have not really thought about their problem space.

I have seen loads of Big Data implementations where the basic reasoning was “we have lots of data so we need Big Data tools”… Or worse still some implementations occurred where Big Data tools were used to wrestle control away from Quants and other information specialists.

Unsurprisingly all failed to achieve any measurable value and waste millions of dollars.

As you say Big Data does have uses but it may not apply to all data related problems and potential users have to think about it an differentiate the issues to be resolved.

There was a great post on this topic ‘Beware The Hype Over Big Data Analytics’ over at Seeking Alpha which managed to cover a lot of what I had in the back of my mind as I pulled this post together.