Wireless doorbell extension

TL;DR

A relay board from eBay combined with a cheap wireless doorbell from Amazon allowed me to extend my existing wired doorbell.

Background

I got the loft of my house converted into a home office. I love it up there, but if I shut the door (to keep noise out) then I can’t hear the doorbell (or anybody shouting up the stairs for me).

Some research led me to wired to wireless doorbell extenders such as the Friedland D3202N, but they didn’t seem like an ideal choice due to:

- Being hard to come by (obsolete?).

- Lock in to a given (expensive) wireless bell system.

- An extra box to mount near the existing bell (and wires to hide).

I also checked out the Honeywell DC915SCV system, but that had many of the same flaws/limitations.

What I really wanted was a setup where I could have a bell in my office, another in $son0’s room, integration with the existing doorbell, and a summoning button in the kitchen/living room.

The bells

I found the 1byone Easy Chime system on Amazon, which seemed to offer multiple bells and bell pushes that could work together. I bought one to see how hackable the bell push would be, and the answer was good enough – the button is a standard push button surface mounted onto the PCB (and thus very easy to remove/replace). I also found pads on the PCB marked SW2 that aren’t connected, which seem to change the send code.

Having confirmed that the system would do what I wanted I ordered a second bell and push.

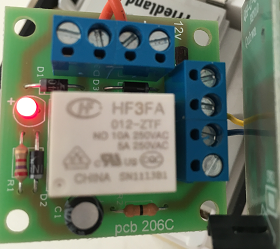

Relay board

Now I needed some way to take the 12V AC from the wired bell system and turn it into a button push. A solid state system would have been nice, but I couldn’t find anything off the shelf, and I wasn’t going to design something from scratch[1]. The ‘12v ac/dc Mini Handy little Relay board‘ I found on eBay seemed to be ideal.

Putting it all together

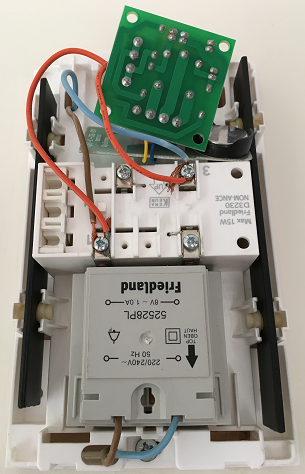

The doorbell has space for some batteries that isn’t used, so I was able to tuck the relay and wireless push in there.

I’d have liked to wire things up so that the relay was activated when somebody pushed the bell, and I probably could have done that if I’d dug into the system; but with the available connections the best I could do was to wire it across the wired switch, so the relay is spending most of its time on (with consequential power draw, heat, and expected lower component life… but it works).

So the relay is wired across connections 1 and 3 of the wired bell, to the same places as the wired bell push. The wireless bell push is connected to the C (common) and NC (normally closed) connections on the relay board. When the doorbell is pushed the relay briefly powers down and opens, causing the wireless bells to be activated.

Conclusion

The entire system cost £26.23 and took no longer to put together than I expect it would have taken to install an off the shelf wired to wireless extender. The key parts fit inside the existing bell, so no new boxes and wires to worry about. I’m very happy with the outcome.

Note

[1] This is where one of my smart hardware hacking friends points out that I could have used a 555, a twig and a rusty nail.

Filed under: howto | 2 Comments

Tags: bell, door, doorbell, relay, wired, wireless

Skiing in Andorra

I’ve spent the last two winter half term breaks in Andorra skiing with my daughter and some neighbours. It’s been great both times.

Ski areas

Andorra has two ski areas. On my first trip I went to Arinsal, which is part of the Vallnord area. More recently I went to Pas De La Casa, which is part of Grandvalira area.

Arinsal is very much a beginner resort, which was fine for my daughter just starting out, but I was pretty bored of it after a week (and I’d worked my way through most of connected Pal) so although I loved the town, and its restaurants etc. I’m not in any rush to go back (though I never got the bus link over to Arcalis to try that out).

Pas De La Casa is just the opposite. It needs confidence on red runs to get around it (and a willingness to take on blacks to get full advantage of the place). We were able to ski out pretty much all of the Pas and Grau Roig sectors, but the bottleneck of the Cubil lift out of Grau Roig meant that we left a good chunk of Soldeu and El Tarter sectors untouched.

Getting there

Transfers are available from Barcelona and Toulouse airports. I went via Barcelona both times due to better timings and prices for flights. Shared buses can be booked at Arinsal.co.uk and take about four hours each way (though the border crossing back to Spain can cause delays, especially later in the day – my neighbours missed their flight home last year).

Accommodation

I stayed at the Hotel Arinsal on the first trip, which was absolutely made by receptionist and barman Danny (and would likely be a totally different experience without him).

For the Pas trip I went with the Hotel Les Truites, which certainly lived up to it’s #1 billing on TripAdvisor. My neighbours stayed at Hotel Camelot, which seemed OK, and had a decent bar (and happy hour) for getting together apres ski. Dinner at the Camelot wasn’t great though – we only used it once because of a ski injury to one of our group making it hard to go further afield. Some friends stayed at the very fancy Hotel Kandahar, which they loved (not least for its well appointed but surprisingly reasonable bar).

Equipment

For both trips I ordered ski passes and ski/boot/helmet hire from Arinsal.co.uk, and both times around I treated myself to the top of the range ski package. In Arinsal I got Racetiger SC Uvo, which were excellent, but nowhere near as good as the brand new pair of Lacroix Mach Carbon I had last week- easily the most fantastic skis I’ve ever had the pleasure of using

Good value

I found Arinsal surprisingly inexpensive (after spending most of my previous ski trips in Alpine French resorts). Pas seemed a little more expensive, but not so much that it changed any behaviour – it wasn’t pocket breaking to eat out on the slopes every day for lunch and the town restaurants every night for dinner.

Conclusion

Arinsal was ideal for my daughter to learn to ski, but it lacks variety for more experienced skiers. I’d go back to Pas again, though maybe I’d be better off choosing El Tarter or Soldeu for an alternative entry point to the Grandvalira area.

Filed under: travel | 6 Comments

Tags: Andorra, Arinsal, Pas De La Casa, skiing, skis

Background

The last two interviews that I’ve done for InfoQ have been with Anil Mahavapeddy and Bryan Cantrill, and in both cases we talked about unikernels. Anil is very much pro unikernels, whilst Bryan takes the opposing view.

A long and rambling Twitter thread about oncoming architecture diversity in Docker images took a turn into the unikernel cul-de-sac the other day, and I was asked what I thought. It wasn’t something I was willing to address in 140 characters or less, so this post is here to do that.

Smoking the whole pack

Bryan made a point that anybody advocating unikernels should be ‘forced to smoke the whole pack’ and that soundbite was used by a bunch of people to promote the interview. It wasn’t traditional clickbait, but it seemed to have the desired effect. I must however confess that I was somewhat uncomfortable about being so closely associated with that quote – it’s what Bryan said, not my own opinion on the matter.

Debugging

The point behind Bryan’s position is that it’s basically impossible to debug unikernels in situ – they either work or they don’t. As most of us know that software fails all the time it should therefore be obvious that software that can’t be debugged is a major hazard and QED it shouldn’t ever be used.

In which Bryan makes Anil’s point for him

Elsewhere in the interview Bryan says that we’re surrounded by correct software:

Correct software is timeless, and people say “Yes, but software is never correct”, that’s not true, there is lots of correct software, there is correct software every day that our lives silently come into contact with correct perfectly working software.

Arguably if you have correct software in a unikernel then there’s no need to debug it, and the argument that it can’t effectively be debugged in production being a major problem starts to subside.

So how do we make correct software?

That’s probably the multi $Bn question for many industries, and at this point the formal methods wonks that do safety critical stuff for planes and cars start to poke their noses in and it all gets very complicated…

But in general, keep it simple, keep it small, avoid side effects are all signposts on the path to righteousness, and I can almost hear Anil saying – ‘and that’s why we use OCAML’.

My conclusion

I don’t think unikernels are a general panacea, Bryan makes some very good points. I do however think that there are some use cases where it’s possible for software that’s small and simple, and most importantly likely to be correct where unikernels can be appropriate.

Feel free to continue the argument/debate in the comments below.

Filed under: Docker, InfoQ news, technology | 2 Comments

Tags: Anil Madhavapeddy, Bryan Cantrill, Docker, InfoQ, OCAML, unikernel, Unikernels

Copying GPT SSDs

TL;DR

Copying the contents of one SSD to a larger one (and making use of the extra space) should be simple, but there are a few gotchas. A combination of AOMEI Partition Assistant Standard and some command line tools got the job done though.

Background

SSDs have been reasonably cheap for some time, but now they’re really cheap. In the run up to Christmas I got a Sandisk Ultra II 960GB SSD for less than £150 (and right after Christmas they were on sale at Amazon for even less, so I got a couple for the in laws).

Copying rig

I used one of my old NL40 HP Microservers running Windows 7 to do the copying as it’s easy to get disks in and out of it. To put 2.5″ drives into the 3.5″ bays I got a couple of Icydock adaptors, and to get mSATA drives in place I used an existing mSATA to 2.5″ adaptor, and bought a new one[1], which is uncased – but fine for the job.

Right tool for the job

That new SSD became the base of the pyramid for a drive shuffle that rippled through a bunch of my PCs. For the older systems I could have easily used my trusty old version 11 copy of Paragon Partition Manager[2], but for the newer systems using GUID partition tables (GPT) I needed something else. I found AOMEI Partition Assistant Standard did the job (mostly).

Almost there

AOMEI works pretty much the same as the Paragon Partition Manager I was used to, though if anything the copy disk function was easier to use (and offered a means to resize the main partition as part of the operation, which was the whole point of the exercise). The problem that I found with AOMEI is that it didn’t copy over the partition types and attributes, meaning that I had to fix things up with the DISKPART utility.

Fixing type and attributes

I launched two CMD windows using ‘Run As Administrator’, and then compared the source disk (e.g. disk 1) with the destination dist (e.g. disk 2) e.g. this is what I see on the source disk:

DISKPART> select disk 1 Disk 1 is now the selected disk. DISKPART> list partition Partition ### Type Size Offset ------------- ---------------- ------- ------- Partition 1 Recovery 300 MB 1024 KB Partition 2 System 99 MB 301 MB Partition 3 Reserved 128 MB 400 MB Partition 4 Primary 930 GB 528 MB DISKPART> select partition 1 Partition 1 is now the selected partition. DISKPART> detail partition Partition 1 Type : de94bba4-06d1-4d40-a16a-bfd50179d6ac Hidden : Yes Required: Yes Attrib : 0X8000000000000001 Offset in Bytes: 1048576 Volume ### Ltr Label Fs Type Size Status Info ---------- --- ----------- ----- ---------- ------- --------- -------- * Volume 3 Recovery NTFS Partition 300 MB Healthy Hidden DISKPART>

To make the partition on the target disk have the same attributes (in my second CMD window running DISKPART):

DISKPART> select disk 2 Disk 2 is now the selected disk. DISKPART> select partition 1 Partition 1 is now the selected partition. DISKPART> gpt attributes=0X8000000000000001 DiskPart successfully assigned the attributes to the selected GPT partition. DISKPART> set id=de94bba4-06d1-4d40-a16a-bfd50179d6ac DiskPart successfully set the partition ID. DISKPART>

and then work through the other partitions ensuring that the type and attributes are set accordingly.

Fixing boot

Even after all of that I ended up with disks that wouldn’t boot. In each case I needed to boot from a Windows install USB (for the correct version of Windows[3]) and run the following on the repair command line (automatic repair never worked):

bootrec /fixmbr bootrec /fixboot bootrec /rebuildbcd

Notes

[1] I knew I’d be copying from mSATA to mSATA at various stages, which is why I needed two adaptors. I could have introduced an intermediate drive, but that would have just added time and risk, which wasn’t worth it for the sake of a less than £10.

[2] A little while ago I gave version 12 a try, but it seemed to be lacking all of the features I found useful in version 11.

[3] My father in law’s recent upgrade to Windows 10 (when I thought he was still on 8.1) caused a small degree of anxiety when the /scanos and /rebuildbcd commands kept returning “Total identified Windows installations: 0”

Filed under: howto | 3 Comments

Tags: attributes, clone, copy, diskpart, GPT, migrate, partition, ssd, type, Windows

“Installation is a Software Hate Crime” – Pat Kerpan, then CTO of Borland circa 2004.

Today’s hate – meta installers

I’ve noticed a trend for some time that when downloading an installer rather than being the 20MB or so for the actual thing I’m installing it’s just a 100KB bootstrap that then goes off and downloads the actual installer from the web.

I’ve always hated this, because if I’m installing an app on multiple computers then it means waiting around for multiple downloads (rather than just running the same thing off a share on my NAS). This is why most good stuff comes with an option for an ‘offline’ installer intended for use behind enterprise firewalls.

One possibly good reason for this approach is that you always get the latest version. That however is a double edged sword – what if you don’t actually want the latest version? What if version 10.5 is an adware laden, anti virus triggering turd (I’m looking at you DivX) and you want to go back to version 10.2.1 that actually worked? Bad luck – you don’t actually have the installer for 10.2.1.

Yesterday’s great hope – virtual appliances

When I talked to Pat a few years later about the concern of virtual appliances he figured out straight away that it would ‘solve the installation problem’. Furthermore he went and built Elastic Server on Demand (ESoD) to help people make and deploy virtual appliances.

Virtual appliances are still hugely useful, as anybody launching an Amazon Machine Image (AMI) out of the AWS Marketplace or using Vagrant will tell. Actually those people won’t tell – using virtual appliances has become so much part of regular workflows that people don’t even think of using ‘virtual appliances’ and the terminology has all but disappeared from regular techie conversation.

Virtual appliances had a problem though – there were just so many different target environments – every different cloud, every different server virtualisation platform, every different desktop virtualisation platform, every different flavour of VMware. Pat and the team at Cohesive bravely tried to fight that battle with brute force, but thing sprawl eventually got the better of them.

Today’s salvation – Docker

Build, Ship, Run is the Docker manta.

- Build – an image from a simple script (a Dockerfile)

- Ship – the image (or the Dockerfile that made it) anywhere that you can copy a file

- Run – anywhere with a reasonably up to date Linux Kernel (and subsequently Windows and SmartOS)

A Docker image is a virtual appliance in all but name[1], but the key is that there’s only one target – the Linux Kernel – so no thing sprawl to deal with this time[2].

The most important part of this is Docker Hub – a place where people can share their Docker images. This saves me (and everybody else using it) from having to install software.

If I want to learn Golang then I don’t have to install Go, I just run the Go image on Docker Hub.

If I want to use Chef then I don’t have to install it, I just run the Chef image on Docker Hub.

If I want to run the AWS CLI, or Ansible, or build a kernel for WRTNode[3] then I don’t have to Yak shave an installation, I can just use one that’s already been done by somebody else.

Docker gives you software installation superpowers

Docker gives you software installation superpowers, because the only thing you need to install is Docker itself, and then it’s just ‘docker run whatever’.

This is why I’ll probably not ever get myself another PC running Windows, because I’ve done enough installing for this lifetime.

Notes

[1] Docker images and Xen images are basically just file system snapshots without kernels, so there’s precious little to tell between them.

[2] This isn’t strictly true now that Windows is on the scene – so 2 targets; and then there’s the ARM/x86 split – so at least 4 targets. Oh dear – this binary explosion could soon get quite hard to manage… the point is that there’s not a gazillion different vendor flavours. Oh look – CoreOS Rocket :0 Ah… now we have RunC to bring sense back to the world. Maybe the pain will stop now?

[3] These are all terrible examples, because they’re things where I made my own Docker images (so they’re in my page on Docker Hub), but even then I was able to stand on the shoulders of giants, and use well constructed base images whilst relying on Dockerfile to do the hard work for me.

Filed under: Docker | 2 Comments

Tags: divx, Docker, installation, installer, virtual appliance

Three days with Bryan Cantrill

On my first day with Bryan Cantrill he did a wonderful (and very amusing) presentation on Debugging Microservices in Production on the containers track at QCon SF.

On my second day with Bryan Cantrill we talked about Containers, Unikernels, Linux, Triton, Illumos, Virtualization and Node.js – it was something of a geekfest[1].

On my third day with Bryan Cantrill I said, ‘if I see you tomorrow something’s gone terribly wrong for both of us’.

[1] If you enjoy that interview then you’ll probably like the one I did with John Graham-Cumming too (and maybe all of the others).

Filed under: Docker, technology | Leave a Comment

Tags: Bryan Cantrill, containers, Docker, Illumos, Linux, node.js, Triton, Unikernels

Moving on from Cohesive Networks

I’m writing this on my last day as CTO for Cohesive Networks, and by the time it’s published I’ll have moved on to a new role as CTO for Global Infrastructure Services at CSC.

Looking Back

It’s been a pretty incredible (almost) three years at Cohesive.

Year 1 – focus on networking. When I joined Cohesive in March 2013 we had a broad product portfolio covering image management, deployment automation and networking. It was clear however that most of our customers were driven by networking, and hence that was what we should concentrate our engineering resources and brand on. We finished 2013 with a strategic commitment to put all of our energy into VNS3, and in many ways that transition ended with renaming the company to Cohesive Networks at the beginning of this year.

Year 2 – containers everywhere. By the summer of 2013 we had a number of customers clamouring for additional functions in VNS3. This was generally for things they were running in ancillary VMs such as load balancing, TLS termination and caching. Putting all of those things into the core product (and allowing them to be customised to suit every need) was an impossible task. Luckily we didn’t need to do that; the arrival of Docker provided a way to plug in additional functions as containers, giving a clean separation between core VNS3 and user customisable add ons. The Docker subsystem went into VNS3 3.5, which we released in April 2014. This turned out to be a very strategic move, as not only did it shift VNS3 from being a closed platform to an open platform, but it also allowed us and our customers to embrace the rapidly growing Docker ecosystem.

Year 3 – security. By the end of 2014 some customers were coming looking at the NIST Cyber Security Framework and trying to figure out how to deal with it. Section PR.AC-5 ‘Network integrity is protected, incorporating network segregation where appropriate’ was of particular concern as organisations realised that the hard firewall around the soft intranet no longer provided effective defence. It was time for the cloud network security model to return home to the enterprise network, and VNS3:turret was born to provide curated security services along with the core encrypted overlay technology.

What I’ve learned

The power of ‘no’ – what something isn’t. Products can be defined as much by what they don’t do as what they do. Many products that I see in the marketplace today are the integral of every (stupid) feature request that’s ever been made, and they end up being lousy to work with because of that. VNS3 doesn’t fall into that trap because we spent a lot of time figuring out what it wasn’t going to be (as well as plenty of time working on what it is and will be).

Write less software. Is a natural follow on to what something isn’t, as you don’t have to code features that you decide not to implement. But even when you do decide to do something writing code isn’t necessarily the best way to get things done. The world of open source provides a cornucopia of amazing stuff, and it’s often much better to collaborate and integrate rather than cutting new code (and creating new bugs) from scratch.

CTOs are part of the marketing team. I’d previously observed CTOs elsewhere that seemed to spend most of their visible time on marketing, and I think that’s become ever more prevalent over the past few years. There’s little point to a commercial product that nobody knows about, and successful communities require engagement. It’s been fantastic to work with our customers, partners, prospects, industry associations, and open source communities over the past few years.

What’s next

I’m going to one of the world’s biggest IT outsourcing companies just as three of the largest transitions our industry has ever seen start to hit the mainstream:

- Cloud Computing – is now consuming around half of new server CPU shipments, marking a tilt in the balance away from traditional enterprise data centres.

- Infrastructure as code – is just a part of the shift towards design for operations and the phenomenon that’s been labelled ‘DevOps’, bringing changes and challenges across people, process, and technology.

- Open source – not only as a replacement for proprietary technologies or the basis of software as a service, but as a key part of some business strategies.

I hope that my time at Cohesive has prepared me well for helping the new customers I’ll meet deal with those transitions.

This also isn’t the end of my involvement with Cohesive, as I’ll stay involved via the Board of Advisors.

This post originally appeared on the Cohesive Networks blog titled CTO Chris Swan Moving On

Filed under: CohesiveFT | 2 Comments

Tags: Cohesive, CSC, CTO

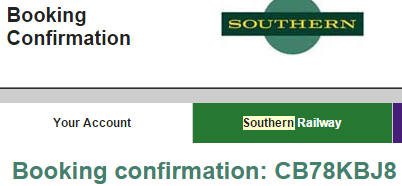

Southern Railway recently upgraded their train ticket buying website. The new user interface (UI) is very pretty, and I would guess it’s an easier place to buy train tickets online if you’ve never done that before.

If you buy tickets frequently, and particularly if you need receipts for expenses then it’s a user experience (UX) disaster. Here’s how…

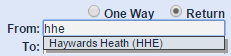

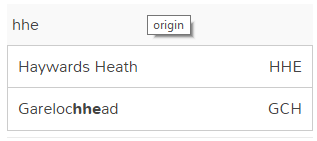

If you know the short code for your station

I live in Haywards Heath, which has a short code of HHE. In the old setup (still used by South Eastern trains) this is what happens:

Southern’s new site also tries to offer me Garelochhead (some hundreds of miles outside their franchise) because it has the letters ‘hhe’ in it.

On my first try using the new site I managed to accidentally select Garelochhead, and boy are those trains to London expensive, slow and infrequent.

One way

The old default used to be a return journey. The new one is a single. I find it hard to believe that’s a decision supported by data – people generally want to go and come back.

Too specific

The new site makes you choose a train for each leg of the journey, which was only required if making seat reservations on the old site. Not only is this unnecessary and potentially confusing when buying a ticket or travelcard that offers some choice over when to travel, but it leads to…

The fraudulent receipt

Having made you specify a train for each leg of a journey that information then makes it onto the order confirmation that I expect many people (like me) use as a receipt[1]. That’s fine if you actually end up taking those exact trains, but what if you don’t? I can see a situation arising where a boss approving expenses knows that a particular train wasn’t taken, so the receipt turns into a lie.

The receipt should be for the ticket bought, not the journey (forcibly) planned.

Just take my money

Southern used to store my credit card information as part of my account, but now they make me type it in every time.

and do you really need a phone number?

I can’t think of a time that I’d ever want an online ticket provider to call me.

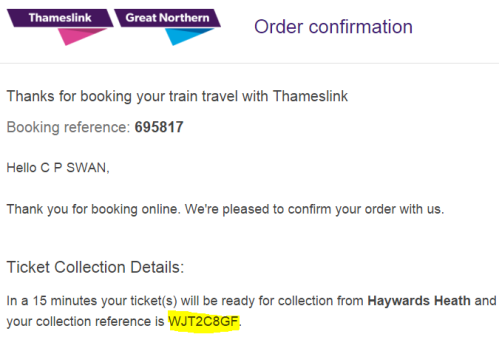

A booking reference AND a collection reference

and neither of them in the email subject.

When picking up tickets bought online the machine needs a collection reference to be typed in (on the not awfully responsive touch screen keyboards)[2]. This used to be presented at the top of the email:

Now the first thing presented is some totally irrelevant ‘booking reference’ and the vital ‘collection reference’ is further down.

I’d also note that the email comes from Thameslink – not Southern who’s website I bought the ticket at. Some extra confusion for those unfamiliar with exactly how private enterprise has taken over our railway networks.

For the record the right thing to do here is put the number I need for the machine into the email subject – so I don’t have to actually open the email to find it when I need it.

Conclusion

The new Southern Railway ticketing website might look prettier than the old one, it might even be friendlier for occasional users, but it’s a disaster for frequent users like me and a terrible example of how too much attention to user interface can ruin user experience.

Notes

[1] Arguably the ticket itself is a receipt, but that’s not much help when it’s been swallowed by a ticket machine after a return journey.

[2] I have in the past been able to collect tickets just by presenting my credit card, which seems to me how things should work; and I’m struggling with what the threat model looks like for people picking up tickets with a card that ties to an order but that’s somehow illegitimate without the 8 character code presented at the time of the order (which a fraudster with card details would see anyway).

Filed under: could_do_better, grumble, technology | 1 Comment

Tags: Southern, ticket, train, UI, UX

The Dell Lesson on Trust Scope

Dell has been in trouble for the last few days for shipping a self signed CA ‘eDellRoot'[1] in the trusted root store on their Windows laptops. From a public relations perspective they’ve done the right thing by saying sorry and providing a fix.

This post isn’t going to pick apart the rights and wrongs – that’s being done to death elsewhere. What I want to do instead is examine what this means from the perspective of trust boundaries.

Fine On Your Own Turf

It’s completely acceptable to use private CAs (which might be self signed) within a constrained security scope. This is regular practice within many enterprises. Things can get a little underhand if those CAs are being used to break TLS at corporate proxies, but usually there’s a reason for this (e.g. ‘data leakage prevention’) and it’s flagged up front as part of employee contracts etc.

Where Dell went wrong here was doing something that had a limited scope to them ‘it’s just to help support our customers’, but global scope in implementation[2].

Who Says?

When a company adds a CA certificate to its corporate desktop image then not only is the scope limited to users within that company, but the decision making process and engineering to put it there falls onto a relatively small number of people. That small group will be able to reason about which certificates to add in (and maybe also which to pull out).

It’s completely opaque who at Dell (or Lenovo etc.) got to make the call on adding in their CA to their OEM build, but I’m guessing that this wasn’t a decision that got run by a central security team (otherwise I expect this would never have happened).

The Lesson

Something that can impact many people (perhaps even a global population) should not be subject to the whims of individual product managers. Mechanisms need to be set up to identify security/privacy sensitive areas, and provide governance over changes to them.

Note

[1] It now seems that there’s also a second certificate ‘DSDTestProvider ‘

[2] That mistake was further compounded by making the private key available, which thus amounted to a compromise toolkit for anybody to stage man in the middle attacks against Dell customers, a pattern seen previously with Lenovo’s Superfish adventure. Dell’s motivations may have been purer, but the outcome was the same.

Filed under: could_do_better, security | Leave a Comment

Tags: CA, certificate, Dell, trust