TL;DR

Coding is no longer the constraint. It’s now cheaper than ever to make software. But there are supply side constraints on innovation, and getting apps to market. Who dreams up something worth making? How do apps get in front of users? There’s also a demand side constraint on adoption – how do people learn about the new possibilities available to them?

Background

I forwarded this post about multi-agent orchestration (and token exhaustion) to a colleague, and it got me thinking about where the bottlenecks are if agents can code whilst you sleep.

The irony here might be that it was Gene Kim who put Michael Tomcal’s post into my timeline, and he knows a thing or two about Theory of Constraints after re-spinning ‘The Goal‘ as ‘The Phoenix Project‘ and again as ‘The Unicorn Project‘.

Theory of Constraints

Let’s back up a moment… why am I even talking about constraints?

I’m very much a disciple of Theory of Constraints, through the work of Gene Kim and others in the DevOps community. It was the central organising principle for my work at DXC Technology, and continues to be a lens that I often peek at the world through.

Most systems will have a small number of constraints, and it’s a good idea to figure out which is the primary constraint; because if you optimise anywhere except for that constraint you’ll either be piling up work faster at the choke point, or building a wider highway down the road from where all the traffic is stuck.

Constraints and Software

Building software is expensive. I know that because I work in an industry where people get paid a lot (relative to say nurses and teachers, or even doctors and professors). Just take a look at levels.fyi for data.

We often conflate software engineering with coding, but is coding actually the constraint? Over my time in the industry there’s been a succession of (often overlapping) changes that have brought down the cost of coding:

- Higher level languages

- Integrated Development Environments

- Context aware autocomplete

- Visual programming

- Component libraries

- Open Source Libraries

- Outsourcing / offshoring / nearshoring

- No Code / Low Code

- AI coding assistants

- Agentic development

Getting from idea to code is cheaper than it’s ever been. But then the cost of getting from idea to code has kept falling (something like) 10x per decade, so arguably no seismic shift is happening.

Where do the ideas come from?

OK, so it’s cheaper than ever to get from idea to code. But who’s even having the ideas, and are they good ideas?

Richard Seroter recently wrote about an agentic adventure with ‘Will Google Antigravity let me implement a terrible app idea?‘. Refuting Betteridge’s Law, the answer here is yes. The AI tools let him implement a terrible app idea. They also let him implement the app terribly, but that’s probably material for another post, another time (meanwhile there’s discussion over on LinkedIn).

Why didn’t Richard implement a great app to show off Antigravity? My guess is that he didn’t have an idea for a great app formed an ready to go; whilst it was easy to dream up a terrible app.

The limit case – personalised apps for everything

I sometimes like to wonder what the limit case is for something – if we take it to the ultimate extreme. In this case, “what if everybody could imagine up perfectly customised apps for their every need?”.

It’s clearly ludicrous, because most people don’t even customise the settings on the apps they have. They don’t have the time, or the inclination to even learn what’s possible; which is why sensible defaults are so important, because that’s what most people will use.

People just want to get on with their lives and work, with minimal friction imposed by the tools they choose to help them with that.

The innovators and change makers spot problems they feel obliged to solve – itches they have to scratch. Everybody else is glad that somebody else is doing that work.

Supply side constraint #1 – innovation

This brings us to a limiting factor – a constraint. The set of people who have ideas for apps worth building is not everybody. It might be a larger set than the people currently making apps, but it’s finite, and probably not much larger.

It’s also worth noting that innovation is generally a team sport[1], which is why it’s great to see my pal Killian (along with his former colleagues from Meta) building tools expressly for teams.

Supply chain friction

Coding is only a small part of engineering, and engineering is only a small part of getting a product to market.

It’s one thing to get my app working at http://localhost:8000, or in the phone emulator on my IDE. Quite another to get it running on secure and robust infrastructure, or through the review process and published on an app store. That might not matter for the limit case above where I’m the only user. But it matters a lot if you want other people to use it; and a whole lot more if you want other people to pay for it. Chris Gregori sum’s this up with ‘Code Is Cheap Now. Software Isn’t‘.

Supply side constraint #2 – syndication

Entry level cloud hosting might be free, but there’s still work involved in using it; and if people show up then the bills will follow.

It’s a similar story for mobile apps. Apple and Google might not charge to get things into their stores. But the review process can often be slow and frustrating; and that can repeat every time there’s an update.

How do people know?

Ok. You’ve had a great idea, you’ve built the app in no time, it’s in a marketplace where people can find it (and maybe even pay). How does anybody with the need for what the app does even know?

This is a demand side constraint. People have limited time and attention; and they’re mostly already at saturation point with everybody else who’s shouting ‘buy my stuff’.

It’s really hard to get noticed. Dare Obasanjo recently posted some X screencaps of a Hendrik Haandrik thread that observed that:

In the last 3 months, about 24k new subscription apps were shipped…

Out of those 24,000 apps, a grand total of 700 (less than 3%) made more than $100.

Read that again

$100, not $100k

The odds are very much stacked against you

Demand side constraint – attention

How’s your better belly button fluff remover app going to get noticed in the sea of other apps? How do people worried about their belly button fluff even know that there’s now salvation? A lot of brands are spending a lot of money to get people’s attention (that’s what’s gotten us ‘filter failure at the outrage factory‘). You want your thing to be a viral hit, but all the social media sites are suppressing organic content in favour of more paid ads. It’s tough.

Conclusion

People have been asking ‘if AI coding is so great, where are all the apps?’. Hopefully I’ve shed some light on answering that question. The apps are stuck at various constraints that exist on both the supply side (having good ideas, and the dealing with supply chain beyond coding) and the demand side (getting people’s attention to know there’s something new that’s worth their time).

Theory of constraints tells us we should move our attention now to unblocking those constraints. It’s going to be a LOT of work…

And…

It would also be remiss of me not to mention Peter Evans-Greenwood’s excellent work on this topic, particularly ‘Are We in 1886? And 1919? ‘ subtitled “When a technology wave requires two grammars that history kept separate”:

The UK 1873-1896: Supply-Side Grammar. The technology worked but deployment was bespoke and expensive. The missing piece was coordination infrastructure for installation.

The US 1919-1925: Demand-Side Grammar. The technology was deployable but unaffordable for the mass market. The missing piece was coordination infrastructure for purchasing.

History kept these gaps separate. They never overlapped. Electrification solved its supply-side problem (1873-1896) decades before automobiles faced their demand-side problem (1919-1925).

Today, for the first time, both gaps must be solved simultaneously.

Update 19 Jan 2026

I had meant to say something about this post from Aporia (but forgot to weave it in):

What Claude Code has revealed is that most people either have mediocre ideas or no ideas at all. The tool is a force multiplier for those who already know what they want to build and how to think through it systematically; it elevates competence, rewards clarity, and accelerates execution for people who would have gotten there anyway, just slower. If you have a sharp vision and can break it into coherent steps, Claude Code becomes an extension of your own capability.

But there’s another mode of use entirely. For people without that clarity, the appeal is precisely that the input can stay vague; you gesture at something, hit enter, and wait to see what comes out. This is structurally identical to a slot machine: low effort, variable reward, and that intermittent reinforcement loop that hooks the susceptible. So the same tool that elevates the focused and capable is also manufacturing a kind of gambling behavior in people prone to it.

Note

[1] My friend Jamie Dobson has a great post that includes a rebuttal of ‘The Lone Genius Myth’.

Filed under: code, technology | Leave a Comment

Tags: AI, attention, cloud, coding, demand, DevOps, economics, ideas, innovation, saas, supply, theory of constraints

CHOP #4 has worked, and Milo’s scan today shows that he’s in remission again (before even getting his Epirubicin).

This cycle of chemo seemed to go better than previous protocols, until we got to the planned Epirubicin last week, and his neutrophils were too low. So we were back at North Downs Specialist Referals (NDSR) today for another go, and things were in better shape.

Chlorambucil prescription

For previous protocols NDSR have provided the Cholorambucil (and previously Cyclophosphamide) that’s been administered by my local vet. 6mg (3x 2mg tablets) was coming in at £41.77.

This time around the oncologist suggested that he give me a prescription (£20) and that I get the tablets from an online pharmacy such as Weldricks, where the tablets are £2.49ea. I registered an account, uploaded a scan of the prescription, and placed an order as soon as I got home from NDSR. With refrigerated shipping all 12 tablets (for the whole protocol) came in at £36.67, saving £110.41 over the protocol.

But then nothing happened for a few days, and the clock was ticking towards the day the first dose was due. On closer reading of the confirmation email I found:

If you have a Vet controlled drug order you must send your paper prescription to the address below.

There had been no mention of this in the ordering process :0

I got the paper prescription into the post. But later that day (before it could possibly have arrived) there was a shipping confirmation, and I was able to pick the next day to receive the tablets.

It was a little stressful, but we’re all set now :)

Prompt payment from ManyPets

As I write this ManyPets are up to date on paying all claims, with the last two settled same day :)

The claim for the prescription mentioned above took a little longer to settle, as I hadn’t claimed for the online pharmacy invoice whilst waiting for it to be fulfilled. But that’s all straightened out now.

Past parts:

Filed under: MiloCancerDiary | Leave a Comment

Tags: chemo, chemotherapy, Cholorambucil, Epirubicin, insurance, lymphoma, ManyPets, Miniature Dachshund, online pharmacy

December 2025

Pupdate

It’s been quite dry over the Christmas break, which has encouraged some longer than usual walks that the boys have enjoyed.

After a scan at the start of the month Milo has now almost completed the first cycle of his 4th modified ‘CHOP’ chemotherapy protocol. As before, low neutrophils mean we’re a little behind the ideal schedule; but also he’s never made it through the early cycles without some delay.

Gigs

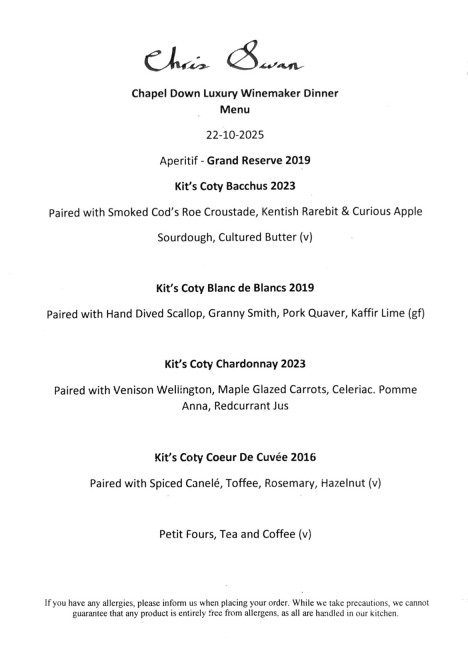

Steve Hogarth

This was our third time in as many years seeing ‘h natural’ at Trading Boundaries, and he really seems to have settled into enjoying the venue. He treated us to another selection of covers, solo material and Marillion tracks; and there was even more audience participation than before, including a performance of Talking Heads ‘Once in a Lifetime’ that had about half a dozen people joining Steve at his keyboard.

Wakeman and Wilson

On a trip with the kids to look at the Roger Dean gallery $wife was persuaded to get some tickets for the Christmas event featuring Adam Wakeman and Damian Wilson. It was a lot of fun, and seems likely to become a regular feature for future festive seasons.

Hackers in the House

I was aware of the first Hackers in the House, last year, but only after the fact. So when it popped up in my Mastodon feed this year I applied to participate.

It was weird to do an event where I didn’t know anybody else; though I did get to meet a couple of folk I knew from the Internet :)

Was it worth a day to learn how the policy sausage is made (and hopefully make future policy better for practitioners)? I think yes – the folk from government seemed very receptive to the input from the room.

My one big takeaway (my own analysis). During Brexit we talked about Britain as a ‘rule taker’ or ‘rule maker’. My read on what’s now happening is that we’re a ‘rule fudger’. The EU is pushing ahead with some pretty big legislation in the cyber security space, such as the Cyber Resilience Act (CRA). Meanwhile the UK government is publishing voluntary codes of practice. For a lot of the areas we talked about it felt like it doesn’t really matter what UK policy is, because the CRA will be shaping what most suppliers actually do.

Health & Fitness

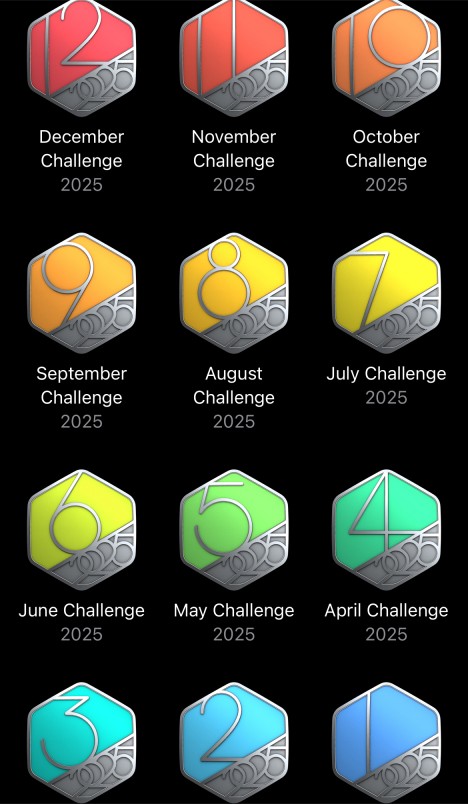

A year of monthly challenges

I’ve had a couple of years where I’ve completed 11 monthly challenges, with one where I frustratingly missed December; but this is my first time getting the complete set.

NHS Healthcheck

“You might think you’re healthy, but you really have no idea, as you’ve not seen a doctor since before Covid”, was becoming a frequent refrain from $wife. I was also a little concerned that I seem to be getting more colds than usual in the past few years. Some sort of deficiency? It was time to find out.

My doctor’s surgery online booking system offered an NHS Health Check, which seemed to be what I needed (and without bothering an actual doctor for an appointment). I had to book two appointments – one for blood to be taken for tests, and another a couple of weeks later to go over the results.

I knew ahead of the second appointment that my blood tests were all normal, as the results appeared in the NHS app on my phone. The consultation was spent going through a lifestyle questionnaire, and that didn’t reveal any surprises or demand any changes. Hurray :) Except I still don’t know why I’m getting so many colds? Aging, more stuff going around post Covid, population wide immune problems post Covid – they’re all in the mix, with no clear answers.

Shingrix

The evidence is mounting that the Shingles vaccine provides protection against dementia, and I don’t want to wait another decade to qualify for it on the NHS. So that was quite an expensive trip to my local pharmacy :0

The pharmacist warned me that it would likely kick my ass, and she wasn’t wrong. I went to bed with a sore arm, and aches all over, and woke a few hours later to shivers. But, by the morning I was feeling OK. Apparently the second dose (due in 2 months) isn’t usually so bad.

Washing machine repair

Our 13 year old Bosch washing machine started leaving puddles on the floor. As the door seal was disgusting, I ordered a replacement, which took a couple of days to arrive. I did that despite not finding any obvious damage that would allow water out.

The new seal took about an hour to fit, following this excellent guide. Running a test wash afterwards seemed fine, but then there was another puddle :(

Somehow I’d failed to spot that the hose for the fabric conditioner had come off. So the machine was getting to quite late in a wash cycle then squirting water.

Getting to the hose to reattach it meant repeating some steps from the seal replacement, but by then I knew what I was doing with my new hook pick and the other tools involved.

Although the replacement seal wasn’t strictly necessary, it’s nice that the machine is looking like new again :)

New IT bits

I didn’t really want a new printer and graphics card, but events forced my hand.

Printer

The Dell 1320CN that I got 15y ago started printing with coloured stripes that weren’t going away. Perhaps a victim of too little use now that $wife does most of her printing at work.

I considered not replacing it, but when a deal came up on a Brother HL-L3240CDW I went for it. It’s small, quiet, network connected, and does duplex colour printing; so everything I need. Consumables look reasonable, but only time will tell on that front…

Graphics card

I did a separate post on my Silent PC GPU upgrade, but it was a bunch of money and time just to avoid forced obsolescence because Nvidia doesn’t play nicely with Linux :(

Maybe one day I’ll do some gaming where I can marvel at how much smoother the pixels are :/

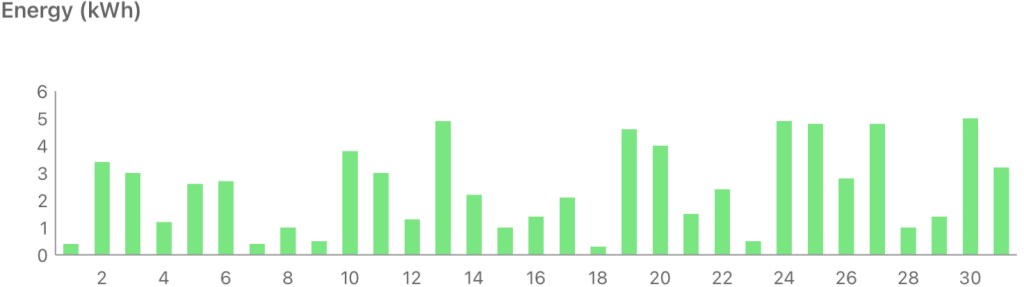

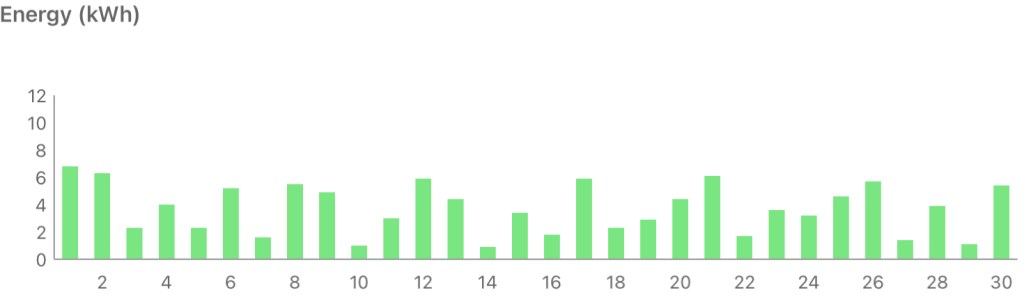

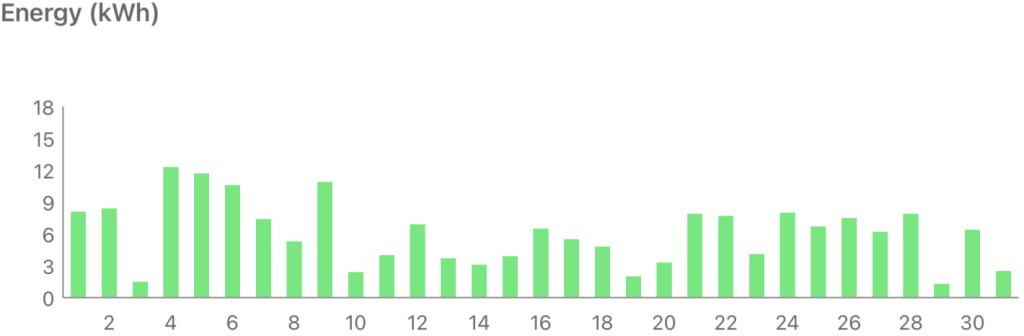

Solar diary

December is cold and dark, but this December was less dark than the past couple of years, with some bright sunny days.

VR

Last month I felt that practice in Clay Hunt VR was throwing off my real world clays game. This month, not so much.

Filed under: monthly_update | Leave a Comment

Tags: Adam Wakeman, chemotherapy, clays, Damian Wilson, door seal, fitness, gig, GPU, graphics card, Hackers in the House, healthcheck, Miniature Dachshund, printer, pupdate, repair, shingles, Shingrix, solar, Steve Hogarth, Trading Boundaries, vaccine, vr, washing machine

Silent PC GPU upgrade

TL;DR

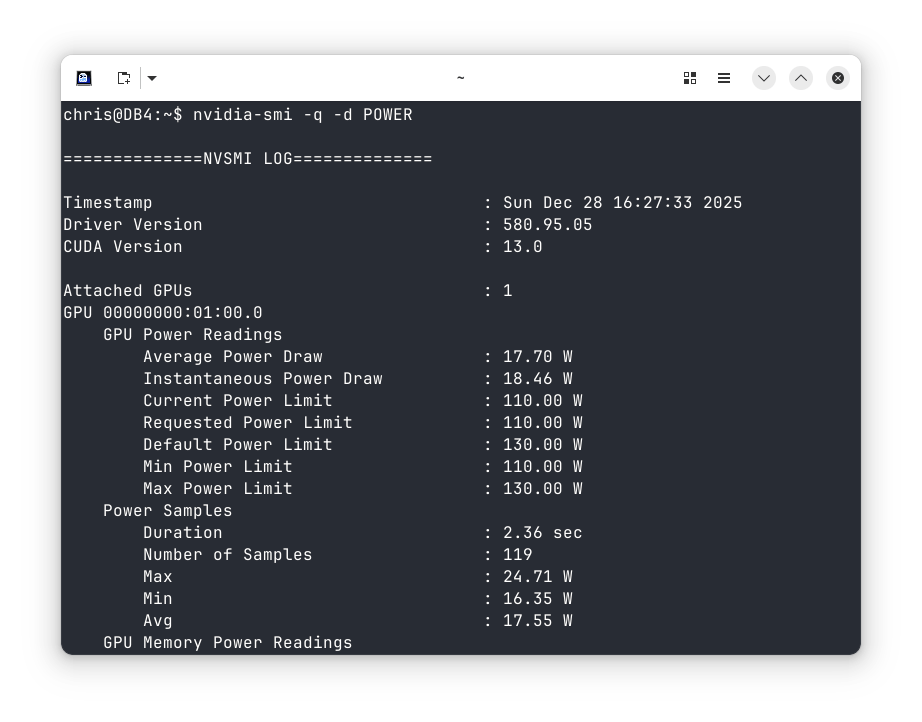

Nvidia have ended Linux support for my ‘Pascal’ GTX 1050 Ti GPU. I’ve been able to fit an RTX 5050 card in its place, though the process was problematic due to driver issues. And I’m still concerned that it can only be limited to 110W when my passive cooling is rated up to 75W.

Background

When I upgraded my silent PC earlier in the year I kept the original graphics card, with an Nvidia GTX 1050Ti. I couldn’t find anything better that fitted into the 75W power budget.

Then I saw Justin Garrison’s post linking to ‘NVIDIA Drops Pascal Support On Linux, Causing Chaos On Arch Linux‘.

I replied:

Grrr. I was considering options to upgrade the 1050Ti in my silent desktop, but nothing was compelling (mostly as Nvidia don’t do a 75W card any more).

Forced obsolescence sucks 🙁

It was clear that I was now on an obsolete platform, and I’d need a new card sooner or later. I decided to get the trouble out of the way before the Christmas break ended.

New card

A quick look at Quiet PC suggested that the Palit GeForce RTX 5050 StormX 8GB Semi-fanless Graphics Card would be the way to go. Unfortunately they were closed for Christmas.

Luckily Scan also had the card, and they were offering next day delivery. I opted to save £8.99 by not going for Sunday delivery, but (hurrah) it came on Sunday anyway. Top marks to Scan (and DPD) :)

Although it’s a 130W card, I found these instructions showing how to limit power usage – ‘Set lower power limits (TDPs) for NVIDIA GPUs‘.

I also ordered a 6pin to 8pin PCIe converter cable, as I knew my PSU didn’t have a newer GPU cable.

Cooling

Job 1 was to remove the heatsink and fan, which just needed a few screws to be taken out. I then set about swapping the DB4 GPU cooling kit from the old card to the new. Thankfully it was possible to do that without completely dismantling the PC. I was even able to leave everything apart from the display cables and power plugged in.

The mounting holes for the heat pipe cooler block were in the same spacing as before, and there was just enough space around the GPU. So no drama with this bit. I also had some heatsinks spare that I could attach to the RAM and other chips that were in contact with the OEM heatsink/fan arrangement.

Power

I’d bought the 6pin to 8pin converter knowing that I didn’t have an 8pin connector; but falsely thinking that the existing card used a 6pin.

It did not :( The old card just took power from the PCIe bus, which can supply the 75W it used.

There is a 6pin connector, but that goes to the motherboard.

So… I did this crime against cabling by sacrificing the SATA and Molex cables I don’t use and splicing their power to the adaptor cable I’d bought.

That got me to a system that would power up and show a screen, which is when the real fun began.

Drivers

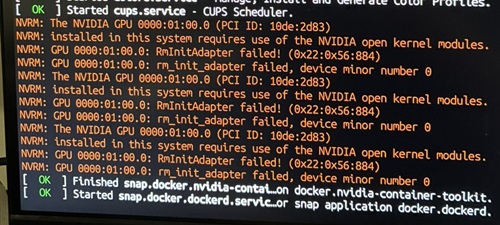

As I already had an Nvidia card using their official drivers I expected the new card to ‘just work’. It did not.

I got a BIOS boot screen, and then the Kubuntu splash screen as it booted. But no login screen. Just a cursor blinking top left of an empty black screen.

Worse still, the keyboard driver was being disabled at some stage during boot, so I couldn’t just jump into a console and fix things from there.

When I tried unplugging the keyboard and plugging it back in again I got:

usbhid: couldn't find an interrupt endpointDisabling secure boot in the BIOS didn’t improve things.

Getting to grub

I needed to get to a console, which meant interrupting the regular boot.

According to many sources online all I needed to do was hold down (right) shift during boot. This accomplished nothing.

Next I tried hitting Esc. Unfortunately my repeated presses bounced me through the grub menu and into the grub command line. I needed to hit Esc, once, at precisely the right time.

On one of my failed attempts Esc got me the dmesg output during a regular boot, which revealed this gem:

Eventually I hit Esc at just the right time, which let me boot into the console and uninstall the existing Nvidia drivers with:

apt purge ^nvidia-.*That got me a system that would properly boot. But only a single screen. I still needed the proper drivers:

sudo apt install nvidia-driver-580-open

Finally… I was back to a proper multi monitor setup. All that remained was to configure power limiting. Unfortunately it turns out I can’t set things to 75W, as the lowest limit is 110W. On the other hand it does seem that quiescent power consumption is about half what the old card used to consume, so maybe I’ll save some pennies on my electricity bill :/

This could have gone much easier if…

- I’d known to uninstall the existing drivers first (and maybe even get the -open drivers in place)

- I’d reconfigured grub to make it easier to get into a console.

Performance (per watt)

I’ve not noticed any improvement in performance, but then I don’t generally use this PC for gaming.

According to GPU Monkey my old 1050 Ti scores 2mp or 0.0267mp/W.

The new 5050 scores 11mp, so 5.5x faster, but also consumes 130W to do that, so 1.73x more power. So only a 3.17x improvement in performance per Watt to give 0.0846mp/W.

Conclusion

This was an upgrade for necessity rather than something I really wanted. My daily use of the PC isn’t improved in any noticeable way.

I’m also a little concerned that I can’t limit the power to the rated capacity of the passive cooling, so if I ever do drive it hard with some gaming it’s likely to overheat and hit thermal throttling.

Filed under: howto, technology | Leave a Comment

Tags: 1050 Ti, 5050, console, cooling, drivers, GPU, grub, GTX, Kubuntu, Linux, NVidia, Palit, passive, power, RTX, silent, StormX

Milo’s had a fantastic long remission – it’s been almost nine months since his last chemo. Long enough that we started hoping for a miracle, and that he might not relapse again.

But… the good folk at North Downs Specialist Referrals (NDSR) were right to be concerned about his last scan, and get him back sooner than we’d originally planned. Although there are no signs of him being unwell, the lymph nodes are definitely growing :(

So… we’re back to weekly vet visits, for blood tests, and chemo if the bloods are looking OK. He’ll be doing the same modified CHOP protocol as last time – so Vincristine, Chlorambucil, Vincristine and Epirubicin over four cycles, along with some Prednisilone for the first few weeks.

Also… wow! I did not expect to still be adding entries to this diary (almost) three years later when I started in Jan ’23. Milo’s responded really well to past treatment, and we can only hope that keeps on going.

Past parts:

Filed under: MiloCancerDiary | Leave a Comment

Tags: cancer, chemo, chemotherapy, Chlorambucil, CHOP, Epirubicin, Milo, NDSR, Prednisilone, protocl, relapse, remission, Vincristine

November 2025

Pupdate

It’s been pretty cold and wet, so the boys are needing to wear their coats outside.

Milo had a scan at the start of the month. Initially things were looking good, and the plan was to stretch out the next visit to three months time :) But then the technician noticed some lymph node density changes, so we’ll be back next week…

Briana Corrigan

Another brilliant act at Trading Boundaries. She sang a fab mix of Beautiful South material, her own songs, and some covers.

RC2014 Picasso

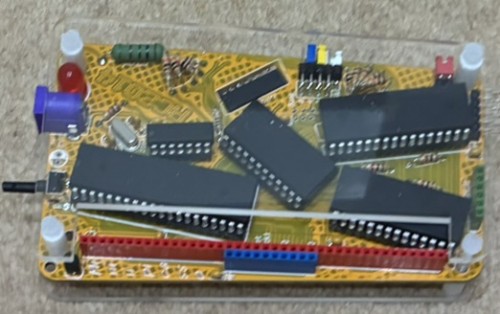

Having ordered my kit back in the Easter holidays I’d been waiting for a wet (and quiet) weekend to put it together – not expecting it to be quite so many months :/

Annoyingly it didn’t work straight away, and the cause wasn’t obvious. After swapping components with my working RC2014 Mini, and checking all the obvious stuff with a meter and scope I paused.

Jason Byrne

Another act that we would have usually seen at the Fringe, but also one of the kids’ favourites. So we caught his tour as it passed through London. Hilarious as always. I think he only got to about 10m of prepared material in almost 2h as there was a LOT of audience interaction.

Retro meetup

The Centre for Computing History in Cambridge held a retro gaming event that was a good excuse to get (some of) the meetup crowd together in person. It was also great to meet and chat with some of the exhibitors.

RC2014 Picasso cont.

I’d hoped to chat to RC2014 creator Spencer at the event, but sadly he was unwell and didn’t make the trip. So the debugging continued using the name badge card from RC2014 Assembly. Eventually I noticed a difference in how I held the system – a loose connection or dry joint I’d somehow missed. It was good to see it reliably booting :)

LUX

I’d not previously heard of Rosalía, but I noticed some raving about her new album on Bluesky. Then I saw the video for Berghain. Wow! Instant buy.

I’ve probably listened to it almost every day since the CD arrived, as it’s a masterpiece.

Of course I’ve now gone into the back catalogue and bought ‘El Mal Querer’ and ‘Motomami’.

It’s a little weird listening to stuff where I don’t understand most of the lyrics. But I realised years ago that it’s possible to enjoy singing without understanding it, when a friend gave me an album from the Icelandic band he was part of.

Boots

I got some Sorel Boots at Rei in Tysons Corner on one of my last DXC trips, so they’ve lasted me well considering I’ve worn them a LOT over the 6y or so. They were comfortable from day one, and so they’ve joined me on many adventures.

But… the soles were starting to wear through, and then when I checked them over before sending away for a resole I noticed splits between the upper and sole on both boots. I’m amazed that I hadn’t regularly got wet feet :/

Finding some replacements wasn’t straightforward. I’ve settled for a pair of Panama Jack P03 Aviator C23. Early signs are promising (despite $wife saying the sheepskin lining looks ‘chavvy’), though I’m taking some care breaking them in.

Solar Diary

It’s been cold, and dark, so not a great time of the year for solar generation, but also not the worst November.

VR

After practicing all month I’ve managed to get much better and more consistent at Clay Hunt VR. I can shoot perfect rounds of skeet and trap, and my scores on the various sporting rounds are edging upwards. Did this translate into a real world improvement at the clays ground? No :( If anything my score was worse than usual, and I found myself missing shots I’d usually expect to find easy.

Filed under: monthly_update | Leave a Comment

Tags: boots, Briana Corrigan, clays, comedy, dachshund, Jason Byrne, Miniature Dachshund, music, pupdate, RC2014, retro, Rosalía, solar, vr

TL;DR

Our online discourse is the victim of industrial scale pollution, and the incentives are being aligned in the wrong direction. Rather than polluters being penalised there’s now an entire industry that’s paid to pollute.

Filter Failure at the Outrage Factory is no longer just the work of ‘amateur’ fringe trolls and state sponsored propaganda; it’s become a profession.

Pollution of our lived environment is perhaps more visceral than our information space.

We lost our best watchers

The Stanford Internet Observatory (and particularly Renée DiResta[1]) did sterling work tracking and educating about the spread of online misinformation. Sadly they fell victim to a concerted lawfare campaign. In many ways their shutdown tells us all we need to know about the present situation in terms of politics and incentives.

Yet sometimes the rot is too obvious to ignore

X recently added an account location feature that exposed numerous highly visible US political accounts as being located elsewhere. The BBC ran with ‘How X’s new location feature exposed big US politics accounts‘, whilst 404 went with ‘America’s Polarization Has Become the World’s Side Hustle‘, noting:

The ‘psyops’ revealed by X are entirely the fault of the perverse incentives created by social media monetization programs.

It turns out you can fund a reasonable lifestyle in a ‘low income’ country by shit stirring online; and (as Terry Pratchett might put it), “it’s indoor work with no heavy lifting”.

What’s to be done?

We’ve dealt with pollution before. Early industrialists poisoned their workers and the land around their factories. The costs were borne by society whilst they continued to rake in the profits – what economists call an ‘externality‘.

We’re facing exactly the same problem again, only this time the pollution is to our information space rather than our lived environment. But that doesn’t make the toxic effects any less damaging. Our information space shapes our lived environment (and the policies that apply to it), so it’s vital to ensure that everything is kept clean.

Regulate away the poor incentives

We know how and why all this is happening. Outrage drives engagement, and engagement brings in advertising revenue. That flywheel has been amplified by taking a tiny fraction of the ad revenue to drive more outrage.

This is largely happening because social media companies have dodged the (reasonably effective) advertising regulation that applies to more traditional media. But there’s no reason to give them a pass.

Start with political will

This is the hard bit… regulation only happens when lawmakers feel a sense of urgency.

Kids dying from being poisoned gets an immediate response.

But the harms from the pollution of our information space aren’t so obvious. Worse they’re being actively obfuscated by… the pollution of our information space. It’s like the smog from the factory stopping anybody from noticing the kids choking to death.

In many cases one side of the political divide has persuaded itself that the outrage supports their case. Meanwhile their opponents are too in thrall of media power.

There are some glimmers of hope, that I’ll return to in another post; but right now it seems we’re a long way from solving this pollution problem.

Note

[1] Renée’s ‘Invisible Rulers: The People Who Turn Lies into Reality‘ provides an excellent overview of this problem.

Filed under: Uncategorized | Leave a Comment

Background

We build a bunch of stuff for RISC-V using the Dart official Docker image, but the RISC-V images can often arrive some time (days) after the more mainstream images[1]. That means that if we merge a Dependabot PR for an updated image it might well be missing RISC-V, causing the Continuous Delivery (CD) pipeline to break when trying to do a release :(

More testing

The answer is to have an additional test e.g. check_riscv_image.yml. This is triggered by any PR that’s changing a Dockerfile that might go awry because of an incomplete manifest. It then uses docker buildx to inspect the manifest, along with some jq to pick the bits we need out of the json. If we find a riscv64 image in there then all is good; otherwise the test fails and we know not to merge the offending PR (and wait a while longer for a more complete manifest to show up).

Note

[1] This isn’t just a problem for Dart, it happens for all of the official images that include RISC-V (and other less popular architectures). The underlying problem is the Docker folk just don’t have sufficient build infrastructure, and it’s particularly acute when lots of images are being (re)built at once (e.g. because of a new Debian stable release).

Filed under: Dart, howto, technology | Leave a Comment

Tags: CD, CI, Dart, Debian, Docker, GitHub Actions, image, manifest, RISC-V, testing

Don’t huff the fumes

TL;DR

Agentic systems are the latest thing being used to solve IT integration issues, becoming the glue squirted into the gaps between systems. But the use of natural language means that the distinction between ‘data’ and ‘code’ is almost impossible to make, which causes a whole raft of security concerns. This new glue may be powerful, but it gives off fumes that can cause a bunch of problems. Handle with care!

Agents are being used as space filling glue

Agentic AI agents are being put to use filling the gaps between systems in order to get them to integrate. Zack Akil has a post about this “AI Agents are the new 3D Printers“, which I might boil down the the observation that it’s fine to make a disposable prototype out of hot glue, but maybe consider other things if you want a load bearing structure.

Zack’s post inspired me to comment on LinkedIn:

This reminds me of some of the conversations around serverless a few years back.

The analogy I used was ‘space filling glue’, and 3D printing is (approximately) “what if we make things entirely out of space filling glue”.

Serverless functions also make a great (virtual) space filling glue. If you have some apps or services that don’t quite join together then you can squirt some functions into the gap and get a fit that works.

Agents are the new shiny, and so of course people are finding novel ways to use them to fill those annoying gaps between systems. More space filling glue. But once again, you might wish to think twice about building something load bearing entirely out of this stuff.

Glues through the ages

I’m sure there’s historical stuff about tree sap or whatever I could dig into; but there’s no need to go so far back.

My first memory of glue was 1970s adverts for ’10 second bonding’ Superglue; but cyanoacrylate is not ‘space filling’ and relies on perfectly matched surfaces that fit together. I came to discover that epoxy resin, and impact glue and various other forms were better for fixing many things. When my dad first showed me a hot glue gun it seemed like magic, but I came to discover it too had (many) limitations.

Of course another feature of the 70s was the scourge of ‘glue sniffers’ – people getting off their heads by inhaling the toxic solvents used in some glues.

It’s been a similar story with integrating IT systems. At first we had to arrange for the perfect fit, but over the years various forms of ‘middleware‘ have come along to facilitate integration. Before agents, serverless was the latest hotness (or hot glue); which caused me to observe at the time that serverless is great if you have a joining things together problem, but you might not want to construct entire systems from it.

And yet, we still have ‘swivel chair‘ integration; and mostly because it’s been deemed too risky to join systems with the glues at hand. I’ll speculate that agentic approaches don’t magically fix that.

IT’s original sin, repeated, and worse

We chose the Von Neumann architecture over the Harvard architecture because memory was expensive and thus rare; and its use could be better optimised if code and data shared the same space. Arguably this is the original sin of IT security, as many of the issues that beggar us today track back to not properly separating code from data. There have of course been successive attempts to remedy this, with something like Capability Hardware Enhanced RISC Instructions (CHERI) representing the state of the art.

Agentic systems double down on this original sin, turbocharged, and on steroids. Everything is in natural language, so there’s no clear way to separate ‘code’ from ‘data’. Sequences of tokens might be innocuous in isolation, but add a couple together and you get an attack. It seems the only way to tell is to ‘run’ it and find out. Halting problem anybody?

Is our new agentic integration glue ‘better’ than what we had before? For some situations undoubtedly yes. Safer? Hell no, this stuff makes gluing stuff together in an unventilated cupboard with giant open pots of contact adhesive look like the sane option. Don’t huff the fumes.

Filed under: security | Leave a Comment

Tags: agentic, agents, AI, CHERI, glue, integration, middleware, security