Impedance mismatches – in data and education

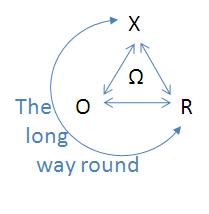

Impedance was one of those things that I really didn’t understand until I did my degree in electronics engineering. For those of you that are interested WikiPedia is as ever a good resource, though the short version is that matching is all about ensuring efficient transfer of energy from source to load. I can’t recall whether Pete Lacey coined the term when talking about different data representations or if he was simply the first that I heard it from; regardless, it’s a great analogy. There is work involved in getting data between different representations such as relational [R] (as used in common database management systems), object [O] (as used in most popular programming languages) and XML [X] (as used for many web services). This work is often referred to as serialisation (converting to a given format) and deserialisation (converting from a given format), and the effort is often not symmetrical – in computational terms it’s fairly cheap to create XML, and pretty expensive to convert it back to other forms. This is what led me a few years ago to talk about the X-O-R triangle, and the ‘long way round’ – where data only gets between XML and a relational database via an object representation in some programming language and runtime. The ‘long way round’ involves two lots of impedance mismatch, each with their cost in terms of CPU cycles (and garbage objects) and potential loss of (meta)information along the way. Whilst organisations continue to use relational databases as their default system of persistence this is one of the key reasons why a service oriented architecture based on XML web services will struggle to get by.

Sean is right that we need open data to make the next generation of financial platforms work. I think it’s worth unpacking the various models (and underlying data representations) used in trading derivatives in order to see where the mismatches exist, as these are fundamental to the inefficiency of existing market participants (and probably go a long way towards explaining some of the misalignment of incentives that has landed us in a global financial crisis):

- The risk model. This is what the trader needs to price (and therefore sell) a derivative instrument. Such a model is normally a hybrid of a spreadsheet, which is used to marshal market data and other input variables and a quantitative calculator, which is used to munge the variables held in the spreadsheet into a price. In simplistic cases the calculator can itself be part of the spreadsheet (and make use of the spreadsheet program’s calculation engine); but this isn’t a simplistic business, so the calculator tends to be an external model written in C++ or a mathematical simulation language. It’s easy to get fooled into thinking that spreadsheets are a simple case of relational data, as they both share the rows and columns. The trouble with spreadsheets is that they have no formal schema – the data is only structured in the mind of the spreadsheet author.

- The structural model. This is what the guys in the back office need in order to book, confirm and settle a trade. The structural model is implicit within the risk model, but it needs to be made explicit so that data can be passed around between systems. Over the years the format of these models has followed the fashions of the IT industry, starting with relational, passing through object and most recently adventuring into XML. Each fad has had compelling advantages, but each has ended up suffering from the same fundamental problem – X-O-R – this isn’t the language of the risk model (or the person that made it).

- The lifecycle model. Derivative instruments are ultimately a set of contingent cash flows, and therefore can be defined by their lifecycle, and yet this is rarely explicitly modelled. In part this has been because the appropriate tools have only just become available, with WS-CDL looking like the first standards based approach. One of the key things that an interaction model like this allows is an understanding of transaction costs (which may be large in the tail of a long lived instrument), which need to be set against any margin associated with the primary cash flows of the trade. Without such a model it’s entirely feasible for a trader to book what looks like a profitable trade (and run off with his bonus) when in fact it then saddles the issuing organisation with a ton of long tail transaction costs.

Clearly there are potential mismatches between each of these models, and these can be resolved by moving back to front (rather than the way things are done today). If we start with a lifecycle model (in WS-CDL) then this implies a structural model in XML, and that in turn can be used to construct the framework for a risk model. This is where I get onto education, as I think the key issue here is that traders (and most quants) don’t ever have an opportunity to learn enough about data representation (and its tradeoffs) to make an informed decision about which to choose.

I could characterise three generic mechanism by which knowledge is imparted – education, training and experience. Computer science degree courses are so chock full of other stuff that they barely cover the basics of data representation (in object and relational forms). So… for hardcore XML (or RDF[1]) expertise that leaves us to training (and there’s precious little of that) and experience. This brings me to my other impedance mismatch. Industry (the ‘load’ for education) clearly needs more XML and similar skills, but students (our ‘source’) have no tolerance for extra time on this stuff being wedged into their curriculum; in fact most engineering and computer science courses are struggling to survive as their input gets drained away to easier, softer options. Maybe this is where things like the Web Science Research Initiative (WSRI) can help out, but until that comes to pass… JP, Graham – Help!

Perhaps the real problem here is the owner of the risk calculator that is so closely tied to the risk model – the modern day quant. In the great days of people like Emanuel Derman these guys came from academia and industry with sharp minds and a broad range of skills in their tool bag. Now quants are factory farmed on financial ‘engineering'[2] courses, producing a monoculture of techniques and implementations. If there’s a point of maximum impact for getting data representations onto the agenda then it’s probably these courses, but again the issue of what can be safely dropped rears its head. This is perhaps why Sean is right to focus on statisticians and those employing the semantic web in other disciplines; to slightly misquote Einstein the thinking that got us into this mess won’t be what gets us out.

[1] Thanks @wozman for the amusing alt definition. For a more serious rundown on the Semantic Web James Kobielus has just posted a good overview.

[2] Engineering here in quotes as I can’t think of any of these courses that are certified by an engineering institution (which is why they are MSc rather than MEng). Yes, I know I’m being an engineering snob – that’s what happens to people who spend their time in the lab (learning about impedance) whilst their fellow students are playing pool.

Filed under: technology | 2 Comments

Tags: data, derivatives, education, experience, impedance, model, quants, risk, semantic, training, web, xml

Chris,

It’s a nice piece.

I thinks fundamental ambiguity and mismatch in pragmatics of domain conceptualizations present a bigger problem than semantic and syntactic differences between models.

Vlad

Chris,

I got the term form Mark Luppi when he asked me to write up a paper on the XML Schema to RDBMS impedance mismatch. However, my understanding is that the term has a long history, at least as far back as object to relational mapping discussions in the ’80s.

Pete