TL;DR

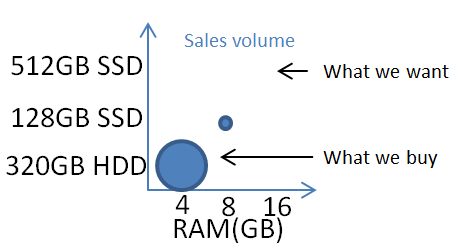

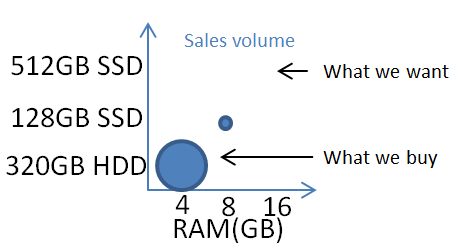

Anybody wanting a high spec laptop that isn’t from Apple is probably getting a low end model with small RAM and HDD and upgrading themselves to big RAM and SSD. This skews the sales data, so the OEMs see a market where nobody buys big RAM and SSD, from which they incorrectly infer that nobody wants big RAM and SSD.

Background

I’ve been crowing for some time that it’s almost impossible to buy the laptop I want – something small, light and with plenty of storage. The 2012 model Lenovo X230 I have sports 16GB RAM and could easily carry 3TB of SSD, so components aren’t the problem. The newer Macbook and Pixel 2 are depressingly close to perfect, but each just misses the mark. I’m not alone in this quest. Pretty much everybody I know in the IT industry finds themselves in what I’ve called the “pinnacle IT clique”. So why are the laptop makers not selling what we want?

I came to a realisation the other day that the vendors have bad data about what we want – data from their own sales.

The Lenovo case study

I spent a long time pouring over the Lenovo web sites in the UK and US before buying my X230 (which eventually came off eBay[1]). One of the prime reasons I was after that model was the ability to put 16GB RAM into it.

Lenovo might have had a SKU with 16GB RAM factory installed, but if they did I never saw it offered anywhere that I could buy it. The max RAM on offer was always 8GB. Furthermore the cost of a factory fitted upgrade from 4GB to 8GB was more than buying an after-market 8GB DIMM from somewhere like Crucial. Anybody (like me) wanting 16GB RAM would be foolish not to buy the 4GB model, chuck out the factory fit DIMM and fit RAM from elsewhere. The same logic also applies to large SSDs. Top end parts generally aren’t even offered as factory options, and it’s cheaper to get a standalone 512GB[2] drive than it is to choose the factory 256GB SSD – so you don’t buy an SSD at all you buy the cheapest HDD on offer because it’s going in a bin (or sitting in a drawer in case of warranty issues).

The outcome of this is that everybody who wanted an X230 with 16GB RAM and 512GB SSD (a practical spec that was purely hypothetical in the price list) bought one with 4GB RAM and a 320GB HDD (the cheapest model).

Looking at the sales figures the obvious conclusion is that nobody buys models with large RAM and SSD, so should we be surprised that the next version, the X240, can’t even take 16GB RAM.

The issue here is that the perverse misalignment in pricing between factory options and after-market options has completely skewed what’s sold, breaking any causal relationship between what customers want and what customers buy.

Apple has less bad data

Mac laptops have become almost impossible to upgrade at home so even though the factory pricing for larger RAM and SSD can be heinous there’s really no choice.

It’s still entirely credible that Apple are looking at their sales data and seeing that the only customers that want 16GB RAM are those that buy 13″ Macbook Pros – because that’s the only laptop model that they sell with 16GB RAM.

I’d actually be happy to pay the factory premium for a Macbook (the new small one) with 16GB RAM and 1-2TB SSD, but that simply isn’t an option.

Intel’s share of the blame

I’d also note that I’m hearing increasing noise from people who want 32GB RAM in their laptops, which is an Intel problem rather than an OEM problem because all of the laptop chipsets that Intel makes max out at 16GB.

Of course it’s entirely likely that Intel are basing their designs on poor quality sales data coming from the OEMs. It’s likely that Intel sees essentially no market for 16GB laptops, so how could there possibly be a need for 32GB.

Intel pushing their Ultrabook spec as the answer to the Macbook Air for the non Apple OEMs has also distorted the market. Apple still has the lead on both form factor and price whilst the others struggle to keep up. It’s become impossible to buy the nice cheap 11.6″ laptops that were around a few years ago[3], and the market is flooded with stupid convertible tablet form factors where nobody seems to have actually figured out what people want.

Conclusion

If Intel and the OEMs it supplies are using laptop sales figures to determine what spec people want then they’re getting a very distorted view of the market. A view twisted by ridiculous differences between factory option pricing for RAM and SSD versus market pricing for the same parts. Only by zooming back a little and looking at the broader supply chain (or actually talking to customers) can it be seen that there’s a difference between what people want, what people buy and what vendors sell. Maybe I do live amongst a “pinnacle IT clique” of people who want small and light laptops with big RAM and SSD. Maybe that market is small (even if the components are readily available). I’m pretty sure that the market is much bigger than the vendors think it is because they’re looking at bad data. If the Observation in your OODA loop is bad then the Orientation to the market will be bad, you’ll make a bad Decision, and carry out bad Actions.

Update

20 Aug 2015 – it’s good to see the Dell Project Sputnik team engaging with the Docker core team on this Twitter thread. I really liked the original XPS13 I tried out back in 2012, but that was just before I discovered that 8GB RAM really wasn’t enough, and that limit has been one of the reasons keeping me away from Sputnik. There’s some further objection to (mini) DisplayPort and the need to carry dongles, and proprietary charging ports, but I reckon both of those things will be sorted out by USB-C.

Notes

[1] Though I so nearly bought one online in the US during the 2012 Black Friday sale.

[2] I’ll run with 512GB SSD for illustration as that’s what I put into my X230 a few years back, though with 1TB mSATA and 2TB 2.5″ SSDs now readily available it’s fair to conclude that the want today is 2x or even 4x. I’ve personally found that (just like 16GB RAM) 1TB SSD is about right for a laptop carrying a modest media library and running a bunch of VMs.

[3] That form factor is now dominated by low end Chromebooks.

The DNS resolver for a VPC is always at the +2 address, so if the VPC is 172.31.0.0/16 then the DNS server will be at 172.31.0.2. Amazon and the major OS distros do a good job of folding that knowledge into VM images, so pretty much everything just works with that DNS, which will resolve any private zones in Route 53 and also resolve names for public resources on the Internet (much like an ISP’s DNS does for home/office connections).

The DNS resolver for a VPC is always at the +2 address, so if the VPC is 172.31.0.0/16 then the DNS server will be at 172.31.0.2. Amazon and the major OS distros do a good job of folding that knowledge into VM images, so pretty much everything just works with that DNS, which will resolve any private zones in Route 53 and also resolve names for public resources on the Internet (much like an ISP’s DNS does for home/office connections).