Zurich to Munich by Bus

TL;DR

If you happen to be in Zurich, and you want to get to Munich (or vice versa), then it turns out that the Inter City Bus (ICB) is probably the least worst option.

Background

I was in Zurich last week speaking at the Open Cloud Forum hosted by UBS, where it was my great honour to share a stage with Mark Shuttleworth. I’d originally planned to fly back to London City straight after the event, but a customer meeting in Munich cropped up.

Train

The first thing I checked was the train schedule, and what it revealed was not what I expected. Rather than some shiny high speed service that would whisk me from city to city in no time I was presented with two awful choices:

- Four different trains (== 3 changes) shuffling from one place to another taking a grand total of around 4h45 (assuming everything worked, which is usually a safe assumption for Swiss trains).

- Bouncing in an out of Stuttgart on high speed trains for a journey taking 5h40.

Plane

There are fairly frequent direct flights from Zurich to Munich, which take about an hour; but they’re not cheap (~£200) and then there’s the whole palaver of get to the airport, security, lounge, gate, boarding, arrivals, get to the city. A one hour flight was going to be a 4-5 hour journey, and that time wasn’t going to be at all productive.

Automobile

It crossed my mind that I could probably just get an Uber or something like that, but the complexities of crossing borders put me off.

Bus

The train schedule actually showed the bus as an option. I’m guessing this particular route is something of a bandaid over what would otherwise be a clockwork machine for getting people around the continent.

Fare was advertised as ‘from €19.90’, which is in fact what I ended up paying. The following day a colleague commented that he’d paid more for a taxi from another hotel in the city.

Getting to the bus was easy, as the bus station is rich by Zurich HB. I expected a bustling place, but for the whole time I was there the only bus present was the one I was getting.

The seats were spacious and comfortable, offering about as much space as extra legroom seats on flights.

Although the booking process offered me a seat reservation it wasn’t shown anywhere on my ticket. The first seat I chose turned out to be reserved by somebody who could see their number, and it seems I wasn’t the only one playing musical chairs.

Free WiFi was offered, but didn’t work for me, which didn’t matter as my various mobile devices all worked perfectly.

Overhead space for carry on was minimal, and I regretted handing over my bag to go in the hold given that once boarding was complete the bus was less than half full – so I could easily have just dropped it on the empty seat beside me; though retrieving it on arrival cost me mere seconds.

Looking at the other passengers my read was that there were a fair number of business travellers (~60%), some tourists (~30%) and backpackers (~10%).

Punctuality was about what I expected – the trip scheduled for 3h45 took 4h30. We hit bad traffic on the motorway outside of Zurich, and there were lots of roadworks along the way.

The terminus was right by the S-Bahn station, so getting to my hotel was a breeze – I think 3 trains that I could have caught whisked through the station in the time it took me to figure out what sort of ticket I needed from the machine.

Conclusion

Bus wouldn’t be my first choice for this sort of travel, but it was comfortable, inexpensive, productive and punctuality didn’t break my expectations.

Filed under: travel | Leave a Comment

Tags: bus, Munich, travel, Zurich

Accelerate

TL;DR

Accelerate is now my top book recommendation for people looking for practical guidance on how to do DevOps. It’s a quick read, actionable, and data driven.

Background

I’ve previously recommended the following books for DevOps:

- The Phoenix Project – Gene Kim’s respin of The Goal is an approachable tale of how manufacturing practices can be applied to IT to get the ‘three DevOps ways’ of flow, feedback and continuous learning by experimentation. It’s very accessible, but also leaves much to the reader’s interpretation.

- The DevOps Handbook – is a much more of a practitioner’s guide, what to do (and what not to do) with copious case studies to illustrate things that have worked well for others.

- The SRE Book – explains how Google do DevOps, which they call Site Reliability Engineering (SRE). The SRE prescription seems to have worked in many places beyond Google (usually at the hands of Xooglers), so if you’re happy to follow a strict prescription this is a known working approach[1].

I’ve also been a big fan of the annual State of DevOps Reports emerging from DevOps Research and Assessment (DORA) that have been sponsored by Puppet Labs, as they took a very data rich approach to the impact and potential of DevOps practices.

Bringing it all together

Accelerate is another practitioner’s guide like The DevOps Handbook, but it’s much shorter, and replaces case studies with analysis of the data that fueled the State of DevOps Reports. It’s more suitable as a senior leader’s guide – explaining the why and what, whilst the DevOps Handbook is better fitted to mid level managers who want to know how.

If you’ve been fortunate enough to hear Nicole, Jez and Gene speak at conferences over the past few years you won’t find anything groundbreaking in Accelerate – it’s very much the almanac of what they’ve been saying for some time; but it spares you having to synthesise the guidance for yourself as it’s all clear, concise and consistent in one place.

Roughly half of the book is spend explaining their homework on the data that drove the process. Showing their working I think is necessary to the credibility of what’s being presented, but it need not be read and understood in detail if you just want to get on with it.

Conclusion

If you’re going to read one book about DevOps this is the one.

Update

Jez and Nicole were interviewed on the a16z Podcast: Feedback Loops — Company Culture, Change, and DevOps, which provides a great overview of the Accelerate material.

Note

[1] It’s also worth noting that Google have worked with some of their cloud customers to create Customer Reliability Engineering (CRE), which breaks through the normal shared responsibility line for a cloud service provider.

Filed under: review | Leave a Comment

Tags: Accelerate, book, DevOps, review

Why?

Everything you access on the Internet starts with a Domain Name System (DNS) query to turn a name like google.com into an IP address like 216.58.218.14. Typically the DNS server that provides that answer is run by your Internet Service Provider (ISP) but you might also use alternative DNS servers like Google (8.8.8.8). Either way regular DNS isn’t encrypted – it’s just plain text over UDP Port 53, which means that anybody along the way can snoop on or interfere with your DNS query and response (and it’s by no means unheard of for unscrupulous ISPs to do just that).

Cloudflare recently launched their 1.1.1.1 DNS service, which is fast (due to their large network of local data centres plus some clever engineering) and offers some good privacy features. One of the most important capabilities on offer is DNS over TLS, where DNS queries are sent over an encrypted connection, but you don’t get that by simply changing the DNS settings on your device or your home router. To take advantage of DNS over TLS you need your own (local) DNS server that can answer queries in a place where they won’t be snooped or altered; and to do that there are essentially two approaches:

- Run a DNS server on every machine that you use. This is perhaps the only workable approach for devices that leave the home like laptops, mobiles and tablets, but it isn’t the topic of his Howto – maybe another day.

- Run a DNS server on your home network that encrypts queries that leave the home. I already wrote about doing this with OpenWRT devices, but that assumes you’re able and willing to find routers that run OpenWRT and re-flash firmware (which usually invalidates any warranty). Since many people have a Raspberry Pi, and even if you don’t have one they’re inexpensive to buy and run, this guide is for using a Pi to be the DNS server.

Prerequisites

This guide is based on the latest version of Raspbian – Stretch. It should work equally well with the full desktop version or the lite minimal image version.

If you’re new to Raspberry Pi then follow the stock guides on downloading Raspbian and flashing it onto an SD card.

If you’re already running Raspbian then you can try bringing it up to date with:

sudo apt-get update && sudo apt-get upgrade -ythough it may actually be faster to download a fresh image and reflash your SD card.

Installing Unbound and DNS utils

Unbound is a caching DNS server that’s capable of securing the connection from the Pi to 1.1.1.1. Other options are available. This guide also uses the tool dig for some testing, which is part of the DNS utils package.

First ensure that Raspbian has up to date package references:

sudo apt-get updateThen install Unbound and DNS utils:

sudo apt-get install -y unbound dnsutilsAt the time of writing this installs Unbound v1.6.0, which is a point release behind the latest v1.7.0, but good enough for the task at hand. Verify which version of Unbound was installed using:

unbound -hwhich will show something like:

usage: unbound [options] start unbound daemon DNS resolver. -h this help -c file config file to read instead of /etc/unbound/unbound.conf file format is described in unbound.conf(5). -d do not fork into the background. -v verbose (more times to increase verbosity) Version 1.6.0 linked libs: libevent 2.0.21-stable (it uses epoll), OpenSSL 1.1.0f 25 May 2017 linked modules: dns64 python validator iterator BSD licensed, see LICENSE in source package for details. Report bugs to [email protected]At this stage Unbound will already be running, and the installer will have taken care of reconfiguring Raspbian to make use of it; but it’s not yet set up to provide service to other machines on the network, or to use 1.1.1.1.

Configuring Unbound

The Raspbian package for unbound has a very simple configuration file at /etc/unbound/unbound.conf that includes any file placed into /etc/unbound/unbound.conf.d, so we need to add some config there.

First change directory and take a look at what’s already there:

cd /etc/unbound/unbound.conf.d lsThere should be two files: qname-minimisation.conf and root-auto-trust-anchor-file.conf – we don’t need the former as it will be part of the config we introduce, so remove it with:

sudo rm qname-minimisation.confCreate an Unbound server configuration file:

sudo bash -c 'cat >> /etc/unbound/unbound.conf.d/unbound_srv.conf \ <<UNBOUND_SERVER_CONF server: tls-cert-bundle: /etc/ssl/certs/ca-certificates.crt qname-minimisation: yes do-tcp: yes prefetch: yes rrset-roundrobin: yes use-caps-for-id: yes do-ip6: no interface: 0.0.0.0 access-control: 0.0.0.0/0 allow UNBOUND_SERVER_CONF'It’s necessary to use ‘sudo bash’ and quotes around the content here as ‘sudo cat’ doesn’t work with file redirection.

These settings are mostly lifted from ‘What is DNS Privacy and how to set it up for OpenWRT‘ by Torsten Grote, but it’s worth taking a look at each in turn to see what they do (based on the unbound.conf docs):

- qname-minimisation: yes – Sends the minimum amount of information to upstream servers to enhance privacy.

- do-tcp: yes – This is the default, but better safe than sorry – DNS over TLS needs a TCP connection (rather than UDP that’s normally used for DNS).

- prefetch: yes – Makes sure that items in the cache are refreshed before they expire so that the network/latency overhead is taken before a query needs an answer.

- rrset-roundrobin: yes – Rotates the order of Round Robin Set (RRSet) responses according to a random number based on the query ID.

- use-caps-for-id: yes – Use 0x20-encoded random bits in the query to foil spoof attempts. This perturbs the lowercase and uppercase of query names sent to authority servers and checks if the reply still has the correct casing. Disabled by default. This feature is an experimental implementation of draft dns-0x20.

- do-ip6: no – Turns off IPv6 support – obviously if you want IPv6 then drop this line.

- interface: 0.0.0.0 – Makes Unbound listen on all IPs configured for the Pi rather than just localhost (127.0.0.1) so that other machines on the local network can access DNS on the Pi.

- access-control: 0.0.0.0/0 allow – Tells Unbound to allow queries from any IP (this could/should be altered to be the CIDR for your home network).

Next configure Unbound to use Cloudflare’s DNS servers:

sudo bash -c 'cat >> /etc/unbound/unbound.conf.d/unbound_ext.conf \ <<UNBOUND_FORWARD_CONF forward-zone: name: "." forward-addr: 1.1.1.1@853#one.one.one.one forward-addr: 1.0.0.1@853#one.one.one.one forward-addr: 9.9.9.9@853#dns.quad9.net forward-addr: 149.112.112.112@853#dns.quad9.net forward-tls-upstream: yes UNBOUND_FORWARD_CONF'There are two crucial details here:

- @853 at the end of the primary and secondary server IPs tells unbound to connect to Cloudflare using port 853, which is the secured end point for the service.

- forward-ssl-upstream: yes – is the instruction to use DNS over TLS, in this case for all queries (name: “.”)

Restart Unbound to pick up the config changes:

sudo service unbound restartTesting

First check that the Unbound server is listening on port 53:

netstat -an | grep 53Should return these two lines (not necessarily together):

tcp 0 0 0.0.0.0:53 0.0.0.0:* LISTEN udp 0 0 0.0.0.0:53 0.0.0.0:*Then try looking up google.com

dig google.comThe output should end something like:

;; ANSWER SECTION: google.com. 169 IN A 172.217.4.174 ;; Query time: 3867 msec ;; SERVER: 127.0.0.1#53(127.0.0.1) ;; WHEN: Wed Apr 04 14:46:09 UTC 2018 ;; MSG SIZE rcvd: 55Note that the Query time is pretty huge when the cache is cold[1]. Try again and it should be much quicker.

dig google.com;; ANSWER SECTION: google.com. 169 IN A 172.217.4.174 ;; Query time: 0 msec ;; SERVER: 127.0.0.1#53(127.0.0.1) ;; WHEN: Wed Apr 04 14:46:09 UTC 2018 ;; MSG SIZE rcvd: 55Using it

Here’s where you’re somewhat on your own – you have a Pi working as a DNS server, but you now need to reconfigure your home network to make use of it. There are two essential elements to this:

- Make sure that the Pi has a known IP address – so set it up with a static IP, or configure DHCP in your router (or whatever else is being used for DHCP) to have a reservation for the Pi.

- Configure everything that connects to the network to use the Pi as a DNS server, which will usually be achieved by putting the (known from the step above) IP of the Pi at the top of the list of DNS servers passed out by DHCP – so again this is likely to be a router configuration thing.

Updates

10 Apr 2018 – Thanks to comments from Quentin and TJ I’ve added the access-control line to the server config. Also check out Quentin’s repo (and Docker Hub) for putting Unbound into a Docker container.

4 Jun 2018 – Modified the config to use #cloudflare-dns.com per comment from Daniel Aleksandersen (see this thread for background).

20 Jul 2018 – I was just trying to use the Pi that I first tested this on, and it was stubbornly refusing to work. The issue turned out to be time. The Pi hadn’t got an NTP sync when it came up, and was unable to establish a trusted connection with Cloudflare when the clock was too far off.

27 Apr 2021 – Updated configs for correct name validation.Note

[1] Almost 4 seconds is ridiculous, but that’s what I’m getting with a Pi on a WiFi link to a US cable connection. From my home network it’s more like 160ms. I suspect that Spectrum might be tarpitting TCP to 1.1.1.1

Filed under: howto, networking, Raspberry Pi | 40 Comments

Tags: 1.1.1.1, CloudFlare, DNS, privacy, Raspberry Pi, tls, Unbound

Using 1.1.1.1

TL;DR

One of the best features of Cloudflare’s new 1.1.1.1 DNS service is the privacy provided by DNS over TLS, but some setup is required to make use of it. I put Unbound onto the OpenWRT routers I use as DNS servers for my home network so that I could use it.

Background

Yesterday Cloudflare launched its public DNS service 1.1.1.1. It’s set up to be amazingly fast, but has some great privacy features too – Nick Sulivan tweeted some of the highlights:

The 1.1.1.1 resolver also implements the latest privacy-enhancing standards such as DNS-over-TLS, DNS-over-HTTPS, QNAME minimization, and it removes the privacy-unfriendly EDNS Client Subnet extension. We’re also working on new standards to fix issues like dnscookie.com

The thing that I’m most interested in is DNS over TLS, as that prevents queries from being snooped, blocked or otherwise tinkered with by ISPs and anybody else along the path; but to use that feature isn’t a trivial case of putting 1.1.1.1 into DNS config. So I spent a good chunk of yesterday upgrading the DNS setup on my home network.

Unbound

I’ve written before about my BIND on OpenWRT setup, which takes care of the (somewhat complex) needs I have for DNS whilst running on kit that would be powered on anyway and that reliably comes up after (all too frequent) power outages. Whilst it’s probably possible to configure BIND to forward to 1.1.1.1 over TLS the means to do that weren’t obvious; meanwhile I found a decent howto guide for Ubound in minutes – ‘What is DNS Privacy and how to set it up for OpenWRT‘ – thanks Torsten Grote.

Before I got started both of the routers needed to be upgraded to the latest version of OpenWRT, as although Unbound is available for v15.05.1 ‘Chaos Calmer’ it’s not a new enough version to support TLS – I needed OpenWRT 17.01.4, which has Unbound v1.6.8. The first upgrade was a little fraught as despite doing ‘keep config’ the WRTNode in my garage decided to reset back to defaults (including dropping off my 10. network onto 192.168.1.1) – so I had to add a NIC on another subnet to one of my VMs to rope it back in. The upgrades also ditched the existing BIND setup, but that was somewhat to be expected, and I had it all under version control in git anyway.

My initial plan was to put Unbound in front of BIND, but after many wasted hours I gave up on that and went for BIND in front of Unbound. In either case Unbound is taking care of doing DNS over TLS to 1.1.1.1 for all the stuff off my network, and BIND is authoritative for the things on my network. I’m sure it would be better for Unbound to be up front, but I just couldn’t get it to work with one of my BIND zones – every single A record with a 10. address was returning an authority list but no answer. I even tried moving that zone to Knot, which behaved the same way.

Here are the config files I ended up with for Unbound:

do-tcp: yes prefetch: yes qname-minimisation: yes rrset-roundrobin: yes use-caps-for-id: yes do-ip6: no do-not-query-localhost: no #leftover from using Unbound in front of BIND port: 2053

forward-zone:

name: "."

forward-addr: 1.1.1.1@853#cloudflare-dns.com

forward-addr: 1.0.0.1@853#cloudflare-dns.com

forward-addr: 9.9.9.9@853#dns.quad9.net

forward-addr: 149.112.112.112@853#dns.quad9.net

forward-ssl-upstream: yes

and here’s the start of my BIND named.conf (with the zone config omitted as that’s particular to my network and yours will be different anyway):

options {

directory "/tmp";

listen-on-v6 { none; };

forwarders {

127.0.0.1 port 2053;

};

auth-nxdomain no; # conform to RFC1035

notify yes; # notify slave server(s)

};

Could I have just used BIND?

Probably, but it fell into ‘life’s too short’, and I expect Unbound works better as a caching server anyway.

Could I have just used Unbound?

If my DNS needs were just a little simpler then yes. Having started out without the ability to do authoritative DNS Unbound has become quite capable in this area since I last looked at it; but it doesn’t yet do CNAMEs how I’d like them, and it’s a definite no for my SSH over DNS tunnelling setup (or at least being able to test that at home).

Could I have just used Knot?

I tried the Knot ‘tiny proxy’ but couldn’t get it to work. The 2.3 version of Knot packaged for OpenWRT is pretty ancient, and although this is the DNS server that Cloudflare use as the basis for 1.1.1.1 it’s short on howtos etc. beyond the basic online docs.

Conclusion

This is the DNS setup I’ve been wanting for a long while. I might lose a little speed with the TLS connection (and the TCP underneath it), but I gain speed back with Cloudflare being nearby, and Unbound caching on my network; I also gain privacy. Plain old DNS over UDP has been one of the most glaring privacy issues on the Internet, and I shudder to think of how that’s been exploited.

Filed under: howto, networking | 2 Comments

Tags: 1.1.1.1, Bind, CloudFlare, DNS, OpenWRT, privacy, Unbound

T-Shirt Sizes and the Copycloud

TL;DR

T-Shirt sizes are frequently used to create the VM types and cost structure for private clouds, but if the sizing isn’t informed by data this can lead to stranded resources and inefficient capacity management. It’s the antitheses of dynamic capacity management where every VM is sized according to the resources it actually consumes, ensuring that as much workload as possible fits onto the minimal physical footprint.

Background

A little while ago I wrote about Virtual Machine Capacity Management, and I won’t repeat the stuff about T-shirt sizing and fits. That post was aimed at the issues with public clouds. This post is about what happens if the idea of T-Shirt sizing is applied to private environments.

Copying T-shirt sizes is one of the ways that private clouds pretend that they’re like public clouds, but it’s a move that throws away the inherent flexibility that’s available to fit allocations to employment and make best use of the physical capacity. It’s worth noting that the problem gets exacerbated by the fact that those private clouds generally lack T-shirt ‘fits’ found in the different instance type families of public clouds. A further complicating factor is where T-shirt sizes are arbitrary and often aligned to simple units rather than being based on practical sizing data.

A look at the potential issue

If we size on RAM and say that Medium is 1GB and Large is 2GB then any app running (say) a 1GB Java VM is going to need an Large even though in practice it will be using something like 1.25GB RAM (1GB for the Java VM + a little overhead for the OS and embedded tools). In which case every VM is swallowing .75GB more than it needs – effectively we could do buy 5 get 3 free.

Could a rogue app eat all my resources?

The ‘rogue app’ thing is nothing but fear, uncertainty and doubt (FUD) to justify not using dynamic capacity management. Such apps would be showing up now as badly performing (due to exhausting the CPU or RAM allocations that constrain them), so if there were potential rogues in a given estate they’d be obvious already. Even if we do accept that there might be a population of rogues, if we take the example above we’d have to have more than 3/8 of the VMs being rogue to ruin the overall outcome, and that’s a frankly ridiculous proposition.

Showing a better way

The way ahead here is to show the savings, and this can be done using the ‘watch and see’ mode present in most dynamic capacity management platforms. This allows for the capture of data to model the optimum allocation and associated savings – so that’s the way to put a $ figure onto how arbitrary T-shirt sizing steals from the available capacity pool.

T-Shirts can be made to fit better

If T-Shirts still look desirable to simplify a billing/cost structure then the dynamic capacity management data can be used to determine a set of best (or at least better) fit sizes versus unit based. So returning to the example above Medium might be 1.25GB rather than 1GB, and then every VM running a 1GB Java heap can fit in a medium.

So why do public clouds stick to standard sizes?

Not all public clouds force arbitrary sizing – Google Compute Engine has custom machine types. It’s also likely that the sizes and fits elsewhere are based on extensive usage data to provide VMs that mostly fit most of the time.

That said, one of the early issues with public cloud was ‘noisy neighbour’ and so as IaaS become more sophisticated the instance types became about carving up a chunk of physical memory so that it was evenly spread across the available CPU cores (or at the finest grain hyperthreads).

Functions as a Service (FaaS) (aka ‘Serverless’) changes the game by charging for usage rather than allocation, but it achieves that by taking control of the capacity management bin packing problem. Containers as a Service models have so far mostly shown through the cost structure of underlying VMs, but as they get finer grained it’s possible for a model that carves between allocation and usage might emerge.

Conclusion

T-Shirt sizes are a blunt instrument versus the surgical precision of good quality dynamic capacity management tools. At their worst they can lead to substantial stranded capacity and corresponding wasted resources. There can be a place for them to simplify billing, but even then finer grained capacity management leads to finer grained billing and savings.

Filed under: cloud | Leave a Comment

Tags: capacity, sizing, T-shirt, VM

My MBA

TL;DR

I started out doing an MBA as it felt like a necessary step in pushing my career forward. The most important part was getting my head around strategy, and by the time that was done the journey had become more important than the destination.

The motivation

I’d not been working at Credit Suisse[1] for long when I saw a memo announcing the Managing Director selection committee. It had little mini bios for each of the committee members – the great and the good of the company, and as I read through them it was MBA, MBA, MBA, PhD in Economics, MBA… If my memory serves me well there were two people who had neither an MBA nor a PhD in Economics (and a couple who had both). I took it as a clear signal for what it took to get along in that environment. Much as I love economics from my time when I took it as a high school option I didn’t have the stomach for 9 years of study to get a (part time) PhD, so an MBA was the obvious choice.

Years later…

I found myself in San Francisco for the first time. I’d reached out to John Merrells, as at the time he was one of the few people I knew in the area. John had double booked himself for the evening we’d planned to get together, so he invited me along to a CTO dinner with the promise of meeting some interesting people. I barely saw John that evening, and instead found myself at a table with Internet Archive founder Brewster Kahle and to be Siri creator Tom Gruber. At some stage the conversation turned to how MBAs were destroying corporate America, and there came a point where I mentioned that I’d done an MBA. Brewster rounded on me asking:

Why would a smart guy like you waste your time doing THAT?

The best response I could think of at the time was:

Know your enemy.

Picking a course

A career break to do a full time MBA pretty much never crossed my mind. I’d only just left the Navy, just left the startup that I’d joined after the Navy, and my wife was pregnant. I had to put some miles on the clock in my new IT career, and keep earning. I was however spending three evenings a week away from home in London, and I had 4 longish train rides every week, which presented some good chunks of ‘spare’ time for study.

The Open University course was the first that I looked at, and although I did my due diligence in looking at alternatives it was the clear winner. It was inexpensive, accessible, and (at the time) the only distance learning course with triple accreditation. It was only later that I properly came to appreciate just how forward thinking much of the syllabus was – pretty much the opposite of the backward looking formulaic drivel that the San Francisco CTOs complained about.

Foundation

My first year was spent on a ‘foundations of senior management’ module that introduced marketing, finance, HR and change management. A key component of the course was to contextualise theory from the reading materials in the everyday practice of the students; so many of my Tutor Marked Assessments (TMAs) were about applying course concepts to the job I was in.

In retrospect I found this course interesting (especially the stuff on marketing) but not particularly challenging, but the hard part came next.

Learning to think strategically is hard

The next module was simply called Strategy, and was the only compulsory module after the foundation. It was also by far the most important part of the course, as doing it (and especially the residential school component of it) literally made me think differently, and it was a tough slog to do that.

We spent a good deal of time looking at stuff from Porter and Mitzberg and Drucker, but the point wasn’t to latch onto a simple tool and beat every problem down with it. The point was to critically evaluate all of the tools at hand, use them in context when they were useful, and see the bigger picture. If only we’d had Wardley maps to add to the repertoire.

One of the things that we spent a lot of time on was the shaping of organisation culture. My preferred definition of culture, ‘the way we do things around here’, comes from that course, and the TMA I’m most proud of from the whole course was ‘Can Mack ‘the knife’ carve CSFB a new culture’ where I looked at the methods, progress and risks involved in John Mack’s attempts to change the organisation I worked for.

Options

The final half of the course was three six month long optional modules. I picked finance, technology management and knowledge management. The first two were frankly lazy choices given that I was a technology manager working in financial services, but I still learned a good deal in both. Knowledge management was more of a stretch, and made me think harder about many things.

Along the way, the journey became more important than the destination

I’d embarked on my MBA for the certificate at the end, but as I completed the course it really didn’t matter – I didn’t bother going to a graduation ceremony.

From a learning perspective the crucial part had been getting half way – completing the strategy module.

From a career perspective just doing the course had pushed my into a new place. I’m forever grateful to Ian Haynes for his support throughout the process. He signed off on CSFB paying the course fees, and allowing me time off for residential schools[3]. More importantly though, he put opportunity in my way. When our investment bankers asked him to join a roadshow with London venture capital (VC) firms his answer was, ‘I’m too busy – take Chris instead’. This wasn’t a simple delegation – I was three layers down the management/title structure. Through the roadshow and various follow ups I got to know the General Partners at most of the top London VCs, our own tech investment banking team, and a large number of startup founders.

The thing that you miss out on by not going to a ‘good school’

Since finishing my MBA I’ve got to spend time at some of the world’s top business schools – LBS, Judge, Said, Dartmouth, and got to meet the people who studied there and talk about their experiences. Without fail they talk about ‘the network’ – the people they met on the course and the alumni who continue to support each other. The cost in time and treasure is largely about joining an exclusive club. Of course the Open University has its own network and alumni, but it’s not the same.

That said – the opportunity cost of going to a top school (especially if it’s full time) is not to be underestimated. Perhaps it’s a shame that people generally only get to learn about discounted cash flow analysis once they’re on the course.

Looking back, the one thing I’d change would be starting earlier. I had time on my hands whilst at sea in the Navy that could have been spent on study that was instead frittered away on NetHack ascensions.

Did ‘know your enemy’ help?

Absolutely yes.

I’ve spent pretty much the whole of my career as the ‘techie’, often treated with scorn by ‘the business’ – whether that’s Executive Branch officers in the Navy, or traders and bankers in financial services etc. The MBA gave me the tools and knowledge to not be bamboozled in conversations about strategy or finance or marketing or change; and that’s been incredibly helpful.

Why am I writing about this now?

I didn’t start blogging until a few years after I’d completed my MBA, so it’s not something that I’ve written about in the past (other than the occasional comment on other people’s posts). My post on Being an Engineer and a Leader made me think about how I’ve developed myself more broadly, and the MBA journey was a crucial part of that.

Conclusion

If you want to level up your career then a part time MBA is a great way to equip yourself with a broad set of tools useful to a wide variety of business contexts. Even if you don’t ultimately choose to change track or break through a ceiling, the knowledge gained can lead to a more interesting, well rounded and fulfilling career.

Notes

[1] Then Credit Suisse First Boston (CSFB).

[2] To misquote Scooby Doo – he would have gotten away with it if it wasn’t for those damn Swiss. Most strong cultures in company case studies come from founders (HP, Apple etc.) but Mack had nearly pulled off a culture change at CSFB when the board pulled the plug on him for suggesting a merger with Deutsche Bank. He’d even figured out a way to deal with the high roller ‘cowboys’ I fretted would take off once the good times returned to banking with the creation of the Alternative Assets Group (essentially a hedge fund within the bank where the ‘cowboys’ would get to play rodeo without disrupting the rest of the organisation).

[3] I think the bank got a good deal – for the same cost in money and time as sending me on a week long IT course each year they got a lot more effort and learning.

Filed under: culture | Leave a Comment

Tags: MBA, strategy

Being an Engineer and a Leader

TL;DR

Leadership and management are distinct but interconnected disciplines, and for various reasons engineers can struggle with both. My military background means that I’ve been fortunate enough to go through a few passes of structured leadership training. Some of that has been very helpful, some not so much. Engineers want to fix things, but working with other humans to make that happen isn’t a simple technical skill as it requires careful understanding of context and communications – something that situational leadership provides a model for.

Background

A couple of things have had me thinking about leadership in the past week. First there was Richard Kasperowski’s excellent ‘Core Protocols for Psychological Safety'[1] workshop at QCon London last week. He asked attendees to think about the best team we’ve ever worked in, and how that felt. Then there was Ian Miell’s interview on the SimpleLeadership podcast that touched on many of the challenges involved in culture change (that goes with any DevOps transformation).

My early challenge

I joined the Royal Navy straight from school, going to officer boot camp at Britannia Royal Naval College (BRNC) Dartmouth. I did not thrive there, and ran some risk of being booted out. On reflection the heart of the problem was that I was surrounded by stuff that was broken by design, and the engineer in me just wanted to fix everything and make it better.

A picture taken by my mum following my passing out parade at Dartmouth

I was perceived as a whiner, and whiners don’t make good leaders. The staff therefore had concerns about my leadership abilities[2].

One of Amazon’s ‘Leadership Principles‘[3] appears to be expressly designed to deal with this:

Have Backbone; Disagree and Commit

Leaders are obligated to respectfully challenge decisions when they disagree, even when doing so is uncomfortable or exhausting. Leaders have conviction and are tenacious. They do not compromise for the sake of social cohesion. Once a decision is determined, they commit wholly.

Basically – don’t whine once the decision has been taken – you’ll just be undermining the people trying to get stuff done.

My opportunity for redemption

Because I was so bad at leadering the Navy sent me for additional training on Petty Officers Leadership Course (POLC) at HMS Royal Arthur (then a standalone training establishment in Corsham, Wiltshire). Dartmouth had spent a few days teaching ‘action centred leadership‘ (aka ‘briefing by bollocking’) – it was all very shouty and reliant on position power (which of course officers have). POLC was four weeks of ‘situational leadership‘, where we learned to adapt our approach to the context at hand. Shouty was fine if there actually was a fire, but probably not appropriate most of the rest of the time. I learned two very important lessons there:

- Empowerment – seeing just how capable senior rates (Navy terminology for NCOs) were, it was clear that in my future job as their boss I mostly had to get out of their way.

- Diversity – the course group at POLC was pretty much as diverse a group possible in the early 90s Royal Navy. We had people from every branch, of every age, and at almost every level[4]. It was here that my best team ever experience referenced above happened, which in some ways is strange as we formed and reformed different teams for different tasks across the four week course. One thing that made it work was that we each left our rank badges outside the gate, as we were all trainees together. Over the duration of the course the diversity of backgrounds and experience(s) really helped to allow us as a group to solve problems together with a variety of approaches.

Putting things in practice

Years after POLC, when I made it to my first front line job, an important part of my intro speech to new joiners was, ‘my only purpose here is to make you as good as you can be’. This was very powerful, my department mainly much ran itself, I provided top cover and dealt with the exceptions, and my boss was able to concentrate on his secondary duties and getting promoted. Pretty much everybody was happy and productive, and along with Eisenhower’s prioritising work technique[5] I had an easy time (which became clear when it came to comparing notes with my peer group who in some cases tried to do everything themselves).

Influence and the (almost) individual contributor

It turns out that first front line job at sea with a department of 42 people was the zenith for my span of control in any traditional hierarchical management context. I’ve continued to be a manager since then, but typically with only a handful of direct reports and little to no hierarchical depth. In that context (which is pretty typical for modern technology organisations) it turns out that leadership can be much more important than management. Such leadership is about articulating a vision (for an outcome to be achieved) and providing the means to get there (which more often than not is about removing obstacles rather than paving roads).

This is where engineers can show their strength, because the vision has to be achievable, and engineers are experts in understanding the art of the possible. From that understanding the means to getting there (whether that’s paving roads or removing obstacles) can be refined into actionable steps towards achieving outcomes. The Amazon technique of working backwards codifies one very successful way of doing this.

The point here is that leaders don’t need the position power of managers to get things done, and that situational leadership presents a better model for understanding how that works (versus action centred leadership).

Conclusion

Engineers typically have an inherent desire to fix things, to make things better, and early on in their careers this can easily come across badly. An effective leader understands the context that they’re operating in, and the appropriate communications to use in that context, and situational leadership gives us a model for that. Applying the techniques of situational leadership provides a means to effective influence in modern organisational structures.

I was very lucky to be sent on Petty Officers Leadership Course – it was a career defining four weeks.

Notes

[1] Google have found that psychological safety is the number one determinant of high performing teams, so it’s a super important topic, which is why I wanted to learn more about the core protocols. This is also a good place to shout out to Modern Agile and its principles, and also Matt Sakaguchi’s talk ‘What Google Learned about Creating Effective Teams‘.

[2] In retrospect things would have been much easier if somebody (like my Divisional Officer) had just sat my down and said ‘this is a game, here are the rules, now you know what to do to win’, but it seems that one of those rules is nobody talks about the rules – that would be cheating.

[3] At this point it’s essential to mention Bryan Cantrill’s must watch Monktoberfest 2017 presentation ‘Principles of Technology Leadership‘, where he picks apart Amazon’s (and other’s) ‘principles’.

[4] Whilst ‘Petty Officer‘ is there in the course title attendees ranged from Leading Hands to Acting Charge Chiefs (who needed to complete the course to be eligible for promotion to Warrant Officer).

[5] When I first heard about this technique it was ascribed to Mountbatten (I’m unclear whether it was meant to be Louis Mountbatten, 1st Earl Mountbatten of Burma or his father Prince Louis of Battenberg) – the (almost certainly apocryphal) story being that as a young officer posted to a faraway place he’d diligently reply to all his correspondence, which took an enormous amount of time. On one occasion the monthly mail bag was stolen by bandits, leading to much worry about missed returns. Later it transpired that very few of those missed returns were chased up – showing that almost all of the correspondence was unnecessary busy work that could be safely ignored. It’s interesting to note here that POLC was created at the behest of Lord Louis Mountbatten and Prince Phillip was one of the first instructors.

Filed under: CTO | 2 Comments

Tags: engineering, leadership, psychological safety

Gemini – one week on

It’s been a week since I wrote about my first impressions, so here’s an update. Nothing about those first impressions has changed, but I’ve learned and tried a few more things.

Planet App Launcher

There’s a Planet button to the left of the space bar that brings up a customisable app launcher, and it’s pretty neat. The main thing I use it for is forcing portrait mode, but sometimes it’s also handy for apps.

RDP

I already wrote about how good the Gemini is for SSH, but it’s also great for Windows remote desktops (over SSH tunnels), because there’s no need to pop up a virtual keyboard over half the screen (and RDP definitely is a landscape mode app).

It’s a talking point

I was using my Gemini a fair bit at the couple of days that I spent at QCon London this week, and whilst some people studiously ignored it, and others had it pushed in their face because I’m so keen to show it off, there was a fair bit of ‘what’s that?’.

It does a good job of replacing my laptop

My laptop came out of my bag once during QCon (for me to attack something that I knew would need a desktop browser and my password manager) – in a pinch I could have used RDP for that, but since I did have my laptop with me…

The lesson here is that I’ll likely carry my laptop less.

The battery lasts well enough

I still don’t feel like I’ve pushed the envelope here, but the Gemini has been with me for some long days, and made it through.

X25

Apparently all production Gemini’s were supposed to be made with the X27 System On Chip (SOC), but it seems the first 1000 slipped through with the X25. I’m in the not really bothered camp on this, whilst I get the impression that some owners are fuming and feeling betrayed. For me this will just be something that clearly identifies my Gemini as one of the first. At some stage in the future this might bite me as software assumes X27ness, but at that stage I might be in need of a newer/better one anyway.

I’d love a 7″ Gemini

The Gemini hasn’t displaced my much loved (and now very long in the tooth) Nexus 7 2013 (LTE) from my life, and one reason for that is screen size. A Gemini with more screen and a slightly less cramped keyboard (like my old Sharp PC 3100) would be a thing of beauty.

I’ve still not tried dual boot to Linux

and that probably won’t change until things mature a little (or I find a desperate need).

Filed under: Gemini | Leave a Comment

Tags: Gemini, RDP, X25

Last night we celebrated the 10th anniversary of CloudCamp London by celebrating the 40th anniversary of the Hitch-Hikers Guide to The Galaxy (HHGTG). It was a lot of fun – probably the best CloudCamp ever.

I can’t say that I was there from the beginning, as I sadly missed the first CloudCamp London due to other commitments. I have been a regular attendee since the second CloudCamp, and at some stage along the way it seems that I’ve been pulled into the circle of Chris Purrington, Simon Wardley and Joe Baguley to be treated as one of the organisers. The truth is that Chris Purrington has always been the real organiser, and Simon, Joe and I just get to fool around front of house to some degree. I should also shout out to Alexis Richardson, who was one of the original CloudCamp London instigators, but at some stage found better uses for his time (and developed a profound dislike for pizza).

CloudCamp has been amazing. Simon taught us all about mapping. Kuan Hon taught us all about General Data Protection Regulation (GDPR) long before it was cool. It was where I got to meet Werner Vogels for the first time (when he just casually walked in with some of my colleagues). It’s where I first met Joe Weinman and many others who’ve ended up leading the way in our industry. It’s been and I hope will continue to be a vibrant community of people making stuff happen in cloud and the broader IT industry. Meetups have exploded onto the scene in the past ten years giving people lots of choice on how they can spend their after work time, so it’s great that the CloudCamp London community has held together, kept showing up, kept asking interesting questions, and kept on having fun.

Simon asked us to vote last night to rename CloudCamp to ServerlessCamp, as (in his view at least) that’s the future. With one exception we all chose to keep the broader brand that represents the broad church of views and interests in the community.

Here’s my presentation from last night, which in line with the theme was a celebration of Douglas Adams’s genius:

In keeping with the theme I wore my dressing gown and made sure to have a towel:

It’s been a great first decade for CloudCamp London – here’s to the next one.

Filed under: cloud, presentation | Leave a Comment

Tags: cloud, cloudcamp, community, HHGTG

Gemini first impressions

TL;DR

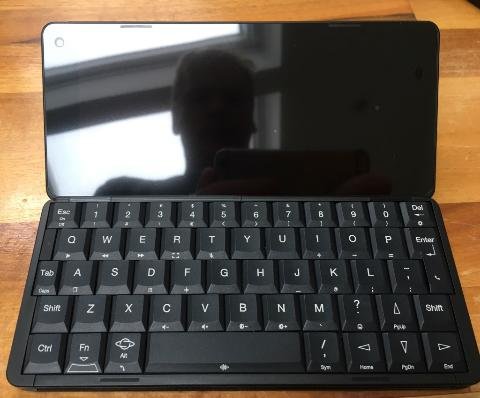

The Planet Computers Gemini is a 6″ Android (and Linux) clamshell device with a keyboard by the same designer who did the Psion Series 5. The keyboard enables on the move productivity with things like SSH that just isn’t possible with just a touch screen.

Background

I was lucky enough to hear about the Gemini very shortly after its crowd funding launch on IndieGoGo, and placed order 35 for the WiFi+LTE version.

Unboxing

The box is pretty nice, though (not shown) mine had split on one corner in transit. Everything seemed fine inside though.

The keyboardy welcome message is neat (even if it’s superfluous).

It’s supplied with a charger and USB A-C cable that has to be plugged into the left hand side to provide power. Looking at the charger specs I’d expect it to be quicker than a standard 5v USB charger.

The device itself came shrouded in a cover where the adhesive was just a little too sticky. Look very closely and it’s possible to see some spots on the screen (protector?) where some specs of dust appear to be trapped.

Setup was easy, and in line with other Android devices I’ve got for myself and family members over the last few years. It just worked out of the box.

The keyboard

I’m using the Gemini to write this. It works best when placed on a flat surface, but it’s workable when held.

As per Andrew Orlowski’s review at The Register the main issue is the space bar, which needs to be hit dead centre to work properly. I’m also just a tiny bit thrown off by the seemingly too high placement of the full stop on its key, but even as I type this I get the urge to go faster, and the whole thing has a lot more speed and accuracy than even the best virtual keyboards I’ve used. The addition on buzz feedback to the natural mechanical feedback is also very helpful in letting me know when I’ve hit a key properly.

The use of the function key to provide three things on many buttons works well, and my only complaint there might be that @ deserves better than to be a function rather than a shift.

One crucial thing is the availability of cursor keys, which makes precise navigation of text work in a way that just isn’t possible with just a touch screen. It’s also worth mentioning how naturally the interplay been touch actions and keyboard actions is. I’ve had touch enabled Windows laptops for years, and approximately never use the touch screens, but they have Trackpoints and Trackpads that move a mouse pointer around; Android is of course more naturally designed for touch.

Apps in landscape

It’s clear that whilst Android devices have pivoted between portrait and landscape since forever nobody tests their app in landscape. Buttons are often half obscured at the bottom of the screen (Feedly) or selection areas get in the way of vital output (Authy). Even when things do work landscape is often just an inefficient use of screen real estate, which would be why people don’t use/test it.

It works fine in WordPress though :)

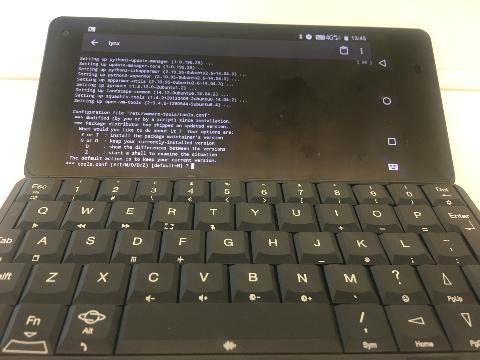

It’s great for SSH

I’ve used Connectbot with my Android devices for some time, pairing it up with Hackers Keyboard, but the Gemini keyboard is a world better for driving a command line interface. This feels like what the Gemini was born to do.

Linux

I’ve not yet had the chance to try any of the supported Linux distros, and Android offers almost everything I want. I’d expect Linux to be useful for security testing and coding whilst offline, but that’s more of a break glass in case of emergency thing, and I’d be surprised if it became a daily drive thing for me.

I haven’t even tried to use it as a phone

The Gemini can be used as a phone, but that’s not what I bought it for, and the SIM I have in it has a data only plan.

Size

I noted with some frustration that the Gemini is just fractionally too long for the in flight device restrictions that were imposed on some routes (and threatened for many more) last year. It’s a shame, because in a pinch I think I could get through a week or two with just the Gemini (and no laptop) in order to avoid checking bags with devices in.

More prosaically it does (kind of) fit in a jeans pocket, though not comfortably, and not with the other phone(s) I’m still obliged to cart around. It’s more of a jacket pocket thing, so in day to day use I’m more likely to substitute it for my (now somewhat venerable) Nexus 7 (2013) tablet than my Android phone (and in practice I can see myself using the Gemini for productivity stuff whilst watching TV/movies/Netflix on the Nexus).

Performance

I haven’t noticed performance, which means it’s (more than) good enough. @PJBenedicto asked on Twitter ‘How’s the operating temperature after prolonged use?’ and that’s also something I’ve not noticed – it runs cool (though I haven’t been watching movies on it – yet).

Conclusion

I had high expectations of the Gemini and it hasn’t disappointed. It’s great to have something so small with a useful keyboard, and I can see it transforming some aspects of my on the move productivity.

Updates

3 Mar 2018 – I think I’ve now found the first thing that Planet Computers messed up badly and will have to spend some time fixing on with future hardware – noise isolation on the headphone port. Shortly after pressing publish on this post I downloaded some Netflix episodes and tried watching, and the noise isolation on the headphone port is just awful. It’s not really noticeable when watching something, but with the headphones plugged in with nothing playing you can listen to the Gemini crunching numbers as you move around the UI, and that’s not a good thing.

Filed under: Gemini, review | 2 Comments

Tags: android, Gemini, keyboard, Planet Computing, review