Originally posted internally 15 Jul 2016:

This is something I’ve been meaning to do since TechCom, so it’s a little overdue. My intention going forward is to have a monthly cadence.

Since I have some involvement in build, sell, deliver I’ll organise along those lines:

Build

‘Modern Platform’ is due before the Technical Design Council (TDC) next week, which will be the culmination of months of work with our partners. The intention is to have a basis for all of our offerings (cloud, workplace, big data and analytics, and the platform itself) that can start small (and cheap) and scale well. Our original target was to be able to do a 100 Virtual Machine (VM) minimum footprint at $1200 per VM, we’ve not quite hit the low end on scale – it’s 150 VMs, but we’ve exceeded the price objective as they come in at $800/VM (so arguably the target is hit – it just comes with spare capacity). This is going to get us into smaller accounts that we couldn’t reach before, but also allows us to start small and scale up in larger opportunities.

Some good progress has been made in our open source efforts around our offerings with the release of AWS and Azure adapters for Agility and also a Terraform plugin. Coming soon will be adapters for Kubernetes and AWS CloudFormation. Open Sourcing these things allows our customers to engage without having to ask permission, and creates an opportunity for co-creation with a community of customers forming around our offerings.

Sell

The first version of our Key Transformation Shift (KTS) documents (aka Digital Shifts) happened at the end of last year. Since TechCom the team has been busy revising all of the documents with the main emphasis being on highlighting the interlock between each shift, and also the interlock to cyber. The version 2 documents are now in the final stages of review before publication, and there will be a big marketing push around the Digital Shifts starting in September. Along the way there will be Town Halls for each of the shifts. The Integrated Digital Services Management (IDSM) Town Hall happened already, but if you missed it then catch up with the recording on YouTube.

Over the past few weeks I’ve been pulled into a number of pursuits, ranging from a services company in Helsinki to a comms provider in London. They’re all very interesting, and each presents its own challenges. The biggest issue however seems to be (as SJ put it) ‘if only CSC knew what CSC knows’. The digital shifts and offerings are all great, but sometimes our sales force needs stuff that’s easier to digest for customers, and we can all help make that easier to come by.

Deliver

The big news in delivery is the roll out of the new GIS organisational structure and operating model (to align with build, sell, deliver). In particular the Offering Delivery Function (ODF) is taking shape under JH’s leadership. The formation of the Operations Engineering (OE) group has been keeping PF and I busy, but the new OE lead will be starting next month. OE will bring together automation skills from the Automation Centre of Excellence (ACoE) and data skills from Governance Analytics Metrics and Business Intelligence (GAMBI) along with a team of data scientists that we’re recruiting to replace the services we’ve been getting from CKM Advisors with an organic capability.

As we shift our delivery to a more infrastructure as code based model it means that ops people need to get (or sharpen up) dev skills – using source code management, configuration management and continuous integration tools. To that end we’ve created the Infrastructure as Code boot camp, which is an instructor facilitated workshop aimed at POD staff (and GIS managers). I’ve personally been getting around the UK PODs, though I was very pleased to see DE and MH running a second generation course in Chorley and then CN volunteering to run a third generation course – that’s how it’s supposed to work so that we can get scale across the organisation. Meanwhile CK has been busy getting around US PODs, and is now part way through a tour around APAC visiting the PODs there. If you’re in India watch out for him at a POD near you next week.

Retrospective

Sadly I didn’t get into a regular cadence for these newsletters as other commitments got in the way of writing time.

I ended up taking on the x86 and Distributed Compute P&L as Offering General Manager, which allowed me to shepherd Modern Platform through the Platform Lifecycle Management (PLM) process to early release. It was very gratifying to see VMware CEO Pat Gelsinger talking about one of the first Modern Platform deployments in his Dell EMC World keynote last week; and the ‘turnkey on day 1’ mantra has become a big part of what I’m working on between build and deliver in the new organisation.

The Agility adapters for Kubernetes and Cloud Formation were released, and I noted an improvement in the quality of README.md documents where I wasn’t having to do a pull request against every one before we switched the repo from private to public.

The second set of digital shifts papers were published, with much better integration between each of them, and a cyber/security thread running through them all. They’re now undergoing another revision to align with the new DXC Technology offering families, and with a bunch of new in job CTOs holding the pen.

We replaced the offering delivery function (ODF) with offering delivery and transformation (OD&T) as we became DXC Technology, but the general direction remains the same. Similarly operations engineering (OE) became operations engineering and excellence (OE&E).

I ran the last infrastructure as code boot camp in the old workshop format in the Vilnius POD in the final week of CSC, and tried the new Katacoda based approach on attendees there. The whole workshop has now been migrated to Katacoda to improve reach and scale, and I hope to have everybody in delivery (and elsewhere in the company) complete basic courses in collaborative source control, config management and CI/CD (managers included – the EVP for Global Delivery made his first pull request whilst running through a Katacoda scenario a few days ago).

Filed under: DXC blogs | Leave a Comment

Tags: build, deliver, digital, modern platform, sell

Originally posted internally 26 May 2016:

Last week I called in on Brad Meiseles, the senior director of engineering responsible for VIC. It’s a product I’ve been watching since the earliest rumblings around what Project Bonneville did with the VMfork technology that had originally been envisaged as something for quicker launching virtual desktop infrastructure (VDI).

VIC has taken a while to hatch, as it’s a complete rewrite of what was done with Bonneville, but it might be one of the most important things to emerge from VMware this year. Containers are a big threat to VMware and all of the vSphere (and associated management) licenses they sell, but VIC gives VMware the opportunity to set up an enterprise toll gate to containers because it gives the security of VMs with hardware trust anchors at the same time as giving the quick launch, low footprint and packaging ecosystem of containers.

There’s just one problem. The underlying technology that VIC uses for its quick launching magic comes with VMfork in vSphere 6, and most of the world is still running vSphere 5.5. Furthermore containers have swept through the industry so fast because most people already have a Linux that can run them, so they haven’t had to wait for the hardware refresh cycles that went along with most ESX/vSphere deployments/upgrades. I’m hopeful that VIC will be released with vSphere 5.5 compatibility; after all quick launch matters a great deal in dev/test environments for quick cycle times, but it’s less of an issue in production, and VIC is very much aimed at being the secure production destination for containers that get developed elsewhere.

Retrospective

VIC hasn’t become as big a part of the enterprise containers conversation as I expected it to be. I think this is down to companies taking a bimodal approach (or preferably pioneers, settlers, town planners [PST]), and so there’s little mixing of existing VM environments with new container environments (which generally tend to be using Kubernetes, quite often under the auspices of Red Hat’s OpenShift).

Original Comments

VK

It is an interesting update. Would like to know if they will extend the support to other docker eco-system components/options, like Swarm or kube, networking , volume mapping options.

This is more a question. IMO, a container solutions need container eco-system of components for their POV, scale and future roadmap. Support to Docker alone is a limited option. what do you think? Cattle needs a helmsman as Kubernetes would say!

CS

Since they’re doing an implementation of the Docker APIs it will work with many other parts of the ecosystem.

It’s explicitly intended to work with Kubernetes, Swarm etc., and if you look at VMware’s broader strategy around cloud native applications (including things wearing the Photon badge) then there’s explicit support for multiple orchestration and scheduling systems.

Things get a little more tricky with aspects like networking. Firstly VMware is trying to position products like NSX as offering better capability than networking based on a host Linux kernel, and secondly any networking that assumes multiple containers sharing the same kernel (which is most Docker networking right now) will run into trouble in an environment where each container has its own kernel.

VK

Thanks.

Anything that works with kube is and should be good (read bais)

NSX is good and rightly complex and solves more than container networking. It addresses the entire enterprise L3 overlay architecture and so on.

but…It is imperative for VMware to share the licensing model easy & upfront, if we are to solution with them mixing with opensource.

Please correct me if I am in isolation saying this. The whole solutioning gets tedious if the licensing info is not available- simple and easy. I call this the Oracle-fuss.

Asking for ‘deal size’, ‘we can work it out’ responses.To circle back to your main post, project lightwave from VMware is suppose to address the LDAP requirement of photon and in turn their container solution.

If VIC -vSphere level REST support is published, it will Enroute Agility container support

Filed under: Docker, DXC blogs | 1 Comment

Tags: Docker, VIC, VMware, vSphere

The DXC Blogs – DevOps in GIS

Originally posted internally 12 Feb 2016, this was the first of what became a series of posts where I took an email reply to a broader audience. Global Infrastructure Services (GIS) was the half of CSC that I worked in before the creation of the Global Delivery Organisation (GDO) in DXC Technology:

JP Morgenthal sent out a note asking a bunch of people for their views on DevOps at DXC Technology. Here’s my reply to him:

In my IPexpo presentation some 16 months ago ‘What is DevOps, and why should infrastructure operations care?‘ I started out by saying ‘DevOps is an artefact of design for operations’, and highlighting the need for culture change (and only now do I see the typo in that deck).

With that in mind I’d say that GIS is at the start of the journey. We have a plan to redesign the organisation for operations, and we have the intent to change our culture (the way we do things around here), but activity on the ground is only just moving from planning to execution.

In my first sit down with Steve Hilton his list of priorities were: automation, automation, automation. Breaking this down a little:

1/ Operational Data Mining (ODM), which is the process by which we’re taking the data exhaust from the IT Service Management (and ancillary) systems that we look after and applying big data analysis tools to get insight into experiments that we should run to improve our operations across people, process and tools. It’s early days, but I’d say that so far we’ve been most successful in the people and process areas because we’ve found it hard to get out of our own way when it comes to tool deployment. This is a salutary reminder that DevOps is not (just) about a bunch of tools, because we’ve been able to have real impact without any tool changes (e.g. changing shift patterns to avoid a start of day overload has greatly improved SLA conformance without requiring any additional staff [or any other changes]).

2/ Becoming more responsive – this is the part where the Automation 1.5 programme kicks in, and the tools start to be meaningful. Some of it’s about streamlining how we do ITSM, and I’d note that there’s still a bunch of ITIL happening there. The rest is about ‘every good systems administrator should replace themselves with a script’, and providing the framework to create, curate, share and deploy those scripts. The SLAM.IO team in KH’s group have made great progress on wrapping triage scripts (that use Ansible) so that operators don’t have to yak shave their way through the same command line interactions every time they look at a box. This is a precursor to API driven integration, where the triage gets done before a human even sees the ticket.

3/ Becoming more proactive – once we step beyond incidents and problems we’re looking at deliberate change, and making that a push button repeatable operation rather than manually ploughing through runbooks. Agility takes centre stage here from a tooling perspective, but we also have Hanlon to help us deal with bare metal (before it becomes a cloud that Agility can work with) along with the output from DB’s Automation 2.0 team (which is in the process of being partly open sourced).

Upskilling our organisation will be a key part of the culture change, which is why I’ve been writing about the ‘Four Pillars of Modern Infrastructure‘ and encouraging people to learn Git/GitHub/Ansible/Docker and AWS. I’m now working with CK and GS on a DevOps bootcamp workshop that we’ll start delivering to the PODs next month. This follows on from work by GR, DE and others before I arrived.

We also need to be more data driven. ODM is a part of that, but more broadly I’m challenging people to ask ‘What would Google do?‘.

It’s also important to recognise that the shift to infrastructure as code doesn’t end with the code. Our code must be supported by great documentation, samples and examples to be truly effective, which is why I’ve been very clear about what I value.

This is of course a very high level view. You’re going to find some great examples of agile development and CI in the development centric parts of GIS, and I’ll leave it to the individual leaders in those areas to explain in more detail.

Retrospective

This was the first time I used ‘Design for Operations’ in the context of a relatively wide internal audience, and I’m super pleased that it’s since become a common part of how we talk internally and externally about what we do and how we’re changing. The post came shortly before the publication of The DevOps Handbook, so although I was familiar with the ‘3 DevOps ways’ of ‘Flow, Feedback and Continuous Learning by Experimentation’ from The Phoenix Project I didn’t call them out specifically. As I was later setting up the Operations Engineering (OE) group I took an approach of ‘All in on ODM’, making continuous learning by experimentation applied to operation constraints the tip of our change spear; and I generally think that improvements to flow and feedback come naturally from that. Automation 1.5 was a Death Star that we stopped building, and Automation 2.0 became part of the overall OE direction. 15 months later, and we now have a delivery organisation that’s no longer divided into Dev and Ops, so with respect to Conway’s Law things are now pointed in the right direction. I’ve also found some awesome (DevOps) engineers in our Newcastle Digital Transformation Centre who have a can do attitude, and the skills to make stuff happen.

Original Comments:

MN

This is exactly the type of aspirational view we need for the digital enterprise.

GIS solutions have to provide the foundation for these capabilities in order to elevate the value chain.

Many parts of the traditional role of GIS (equipment integration) are approaching utility if not already there. If you look at AWS alone, they are attacking some very mature and integrated business models with their offerings, following plans that look very much like this evolution.

Very well put Chris.

MH

I blogged about Automation a while back Manual tasks of today should be the Automated tasks of tomorrow (link lost to the demise of C3). A couple of interesting takes on it.

Filed under: DXC blogs | 2 Comments

Tags: DevOps

The DXC Blogs – Unikernels

Originally published internally 26 Jan 2016:

Last week Docker Inc acquired Cambridge based Unikernel System Ltd, which has got a lot of people asking ‘what’s a unikernel?’, a question that’s well covered in the linked piece. Going back a few years I covered the launch of Mirage OS, which is the basis for what Unikernel Systems do – they’ve since interfaced it with the Docker API so that unikernels could be managed as if they’re containers.

The acquisition caused Joyent’s CTO Bryan Cantrill to write that Unikernels are unfit for production, where he restates some points that he made when I interviewed him at QCon SF in November. Bryan makes a good point about debugging, but I think there are cases where Unikernels don’t really need to be debugged (and Bryan pretty much made the point when talking about ‘correct software’ when we spoke), which is the essence of ‘Refereeing the Unikernels Slamdown‘.

It’s worth noting that DXC Technology has a (very specialised) dog in this fight with our open source Hanlon project it’s not actually a unikernel (as it works with a regular Linux kernel), but it might be argued that it’s on the unikernel spectrum (and for further exploration of that space take a look at some of the presentations from OperatingSystems.io on topics like rump kernels)

Retrospective

Unikernels haven’t taken over the world, but they’re usefully doing the ‘correct software’ job in things like Docker for Mac. The recent release of LinuxKit also shows that Docker Inc is investing in other places along the ‘unikernel spectrum’ that I referred to, making it easy to build stripped down containers that sit on top of Linux, but aren’t strictly unikernels.

Original Comments

MH

There are number of slides and videos around Unikernels just posted from Docker at SCALE-14 (Lunix Meetup) posted at Recap: Docker at SCALE 14x | Docker Blog

TM

In my view, Chris, the Hanlon-Microkernel project is an example of a Docker container that we deploy (and run) dynamically during the process of iPXE-booting perfectly normal (albeit small) Linux kernel. To provide a bit more detail, we use the RancherOS Linux distribution (a Docker-capable Linux kernel that has a total size, for both the kernel image and it’s RAM disk, of approximately 22MB) as our iPXE-boot kernel and dynamically inject the Hanlon-Microkernel Docker container image into that Linux kernel at boot using a cloud-config that is supplied by the Hanlon server.

A Unikernel (from my understanding), is really just a Linux kernel that has been stripped down to the minimal packages and services that are necessary to run a single application. In my mind, that is quite different from the approach that is taken by RancherOS (or TinyCore Linux) where a “regular” Linux distribution is compressed to boot quickly (and often run in memory). In those operating systems you typically have all of the same processes available to you (including standard Linux commands and even services like SSH), giving you much greater access to the system if you need to debug something that has gone wrong in that system. I guess you could make the argument that it’s in the “unikernel spectrum”, but I tend to think of the approaches taken by Unikernels as being quite different from the approaches we’ve taken for years now to make small kernels (which are typically intended to run multiple services, not just one service). Just my 0.02 (in your favorite local currency)…

MN

Cantrill was belaboring the use case with points that would fit in the early days of VMware. It evolved though. As will Unikernel.

Thing is, its in the toolbag now.

NB

‘Unikernels will send us back to the DOS era’ – DTrace guru Bryan Cantrill speaks out • The Register

MN

I enjoyed the 4th paragraph quotes.

CS

Some great stuff from Brendan Gregg on Unikernel Profiling

Filed under: Docker, DXC blogs | Leave a Comment

Tags: Unikernels

Originally posted internally 12 Jan 2016:

What Would Google Do? It’s a good generic question when considering any problem in the IT space.

Often the answer is pretty obvious, where Google’s already doing something (and better still if it’s published the hows, whats and whys). Other times there’s a shape of an answer, where Google can be seen to be doing something, but it’s less clear how they’re doing it.

There is also a generic answer. Google is a data driven organisation (arguably often to the point of damaging itself and its users), so the answer to all questions is driven by data. If there’s no data then the first job is to get the data – making the mechanism to source the data if need be.

The alternative to WWGD is the HiPPO – the Highest Paid Person’s Opinion. There are a few problems with HiPPOs, which is why they’re best consigned to 60’s fictional characters like Don Draper rather than the decision making processes of modern organisations:

- HiPPOs are expensive

- HiPPOs are a bottleneck to decision making

- HiPPOs are subject to all kinds of human frailties that might misalign their opinions with the realities of the world around them, not least the ‘tyranny of expertise’

Google have a saying for dealing with this, ‘don’t bring an opinion to a data fight’.

Retrospective

As we build out operational data mining (ODM) and built the operations engineering team (OE, now OE&E) to support that there was a palpable shift in the culture of the organisation from being opinion driven to data driven. This has been empowering for front line staff, and generally made DXC Technology less political and hence a nicer place to work. As we set about building OE there were two aspects of Google practice that we borrowed from heavily. The first was Site Reliability Engineering (SRE) and the second was Google’s ‘data bazaar’ Goods.

Original comments

NB:

HiPPO is the road to irrelevance.

‘The best ideas win, independent of titles: In a social business, ideas and information flow horizontally, vertically, from the bottom and from the top; throughout the business. Ideas are like sounds, and they should be heard through the seams of the social fabric. In the absence of sound, ideas die. The most damaging syndrome is the HIPPO (highest paid person’s opinion) syndrome, whereby all the decisions are ultimately dictated by the biggest title. The best ideas must win. That’s the biggest benefit of being social.’

Recognizing Good Ideas (link broken by demise of C3, referenced HBR’s ‘Innovation Isn’t an Idea Problem‘)

Re-examine how you tackle tough problems, and make important decisions. “Decision Making By Hippo” that is, following the lead of the most highly paid person simply because they are in that position, is a very bad idea. Instead, the intelligence and capability of all the organization members can, and should, be tapped.- Andrew McAfee

If only CSC knew what CSC knows (link broken by demise of C3, referenced HP’s former CEO Lew Platt, “If only HP knew what HP knows, we would be three times more productive.”)

LEF paper ‘Energizing and Engaging Employees – Social media as a source of management innovation‘ (page 35)

Filed under: DXC blogs | 1 Comment

Tags: data science, google, HiPPO

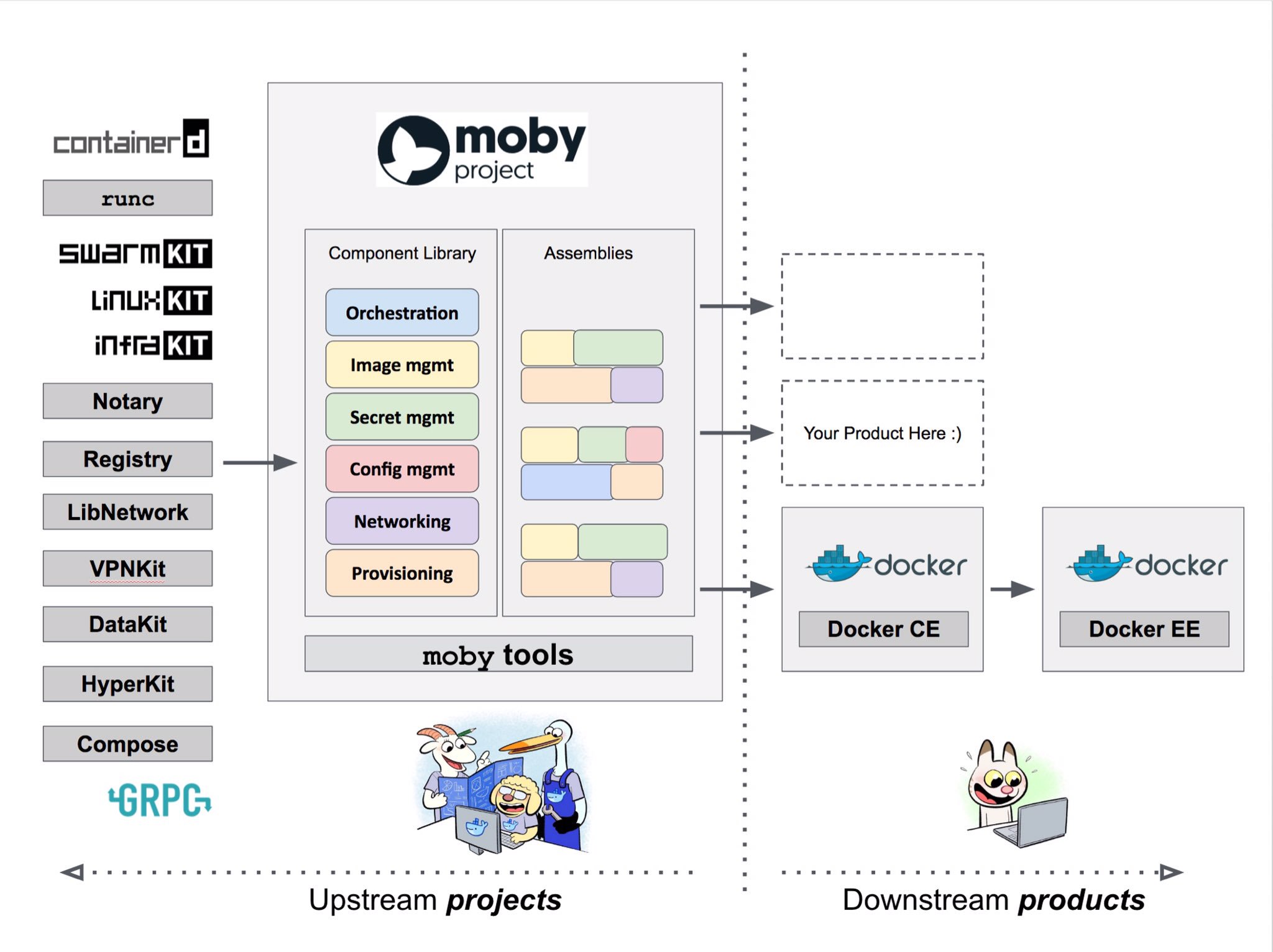

At the recent DockerCon event in Austin Docker Inc announced two significant open source projects, Moby and LinuxKit. Moby essentially marks the split of Docker the open source project from Docker Inc the company, with the docker/docker GitHub repo moved to moby/moby. LinuxKit provides a set of tools to build ‘custom Linux subsystems that only include exactly the components the runtime platform requires’.

Continue reading the full story at InfoQ.

Filed under: Docker, InfoQ news | Leave a Comment

Tags: Docker, LinuxKit, Moby, open source, Unikernels

Originally posted internally 6 Jan 2016:

I’d meant to post this before the Christmas break as a guide to things to tinker with over the break, but then I hit the point where pretty much everybody seemed to already be on leave, and it was clearly too late….

So Happy New Year, if you’re not already on top of these things then here’s a taste of what’s coming in 2016.

1) Infrastructure as code

‘Infrastructure as code’ means that infrastructure people now need to worry about source code in the same way that application developers have.

The good news is that distributed source control and collaboration is now pretty much a solved problem[1]. History might well remember Linus Torvalds more as the creator of the Git source control system than just the ‘just a hobby’ Linux kernel project it was built to support.

GitHub

Git is built around the idea of ‘local’ and ‘remote’ repositories, where local is generally a development environment. GitHub provides the remote part where source can be pulled from to avoid starting from scratch, and pushed to once changes are made.

There are alternatives to GitHub out there such as BitBucket and GitLab (there are even alternatives to Git such as Mercurial and Perforce), but we went with GitHub at DXC Technology in order to have a consistent feature set and user experience with the most popular open source projects (that are hosted on GitHub). DXC Technology has an organisation on public GitHub where we can collaborate with customers and partners, and also an enterprise GitHub (for internal ‘inner source’ code)

Fork and Pull

Like the source control systems that came before it Git supports the idea of creating code branches for developing a given feature (or fixing a given bug), with merging back into the ‘trunk’ once a branch has served its purpose. Where things get a little different, and much more powerful, is the concept of ‘fork and pull’.

A fork is a copy of a given project so that somebody can make changes without having to ask permission of the original creator. In the past forks have often been considered to be a bad thing as they lead to potentially exploding complexity as a project splinters away from its original point. This led to a point of view that open source projects that forked had governance issues that might be detrimental to the project (and its users).

Pull requests are where somebody who’s made a fork asks for their change to be incorporated back into the main project. If a fork is ‘beg forgiveness’ then a pull is ‘ask permission’. Using fork and pull together means that a project can very quickly explore the space around it, and bring back what’s of value into the main body; and it’s a process that now lies at the heart of nearly every successful open source project.

Some enterprises have struggled with fork and pull, as it doesn’t fit into traditional governance processes – it is after all a governance process (or the beginnings of one) in its own right. The good news for infrastructure people is that infrastructure as code is generally a new enough thing that there’s no need for demolition work before erecting the new building.

Learn Git/GitHub

Pro Git Book (it’s worth paying particular attention to the chapter on branching and merging, a topic that’s also well covered at learn Git branching)

2) Configuration Management

The whole point of infrastructure as code is to reach a desired configuration for the infrastructure elements in an automated, programmatic, and repeatable fashion to support infrastructure at scale. That’s normally achieved using some sort of configuration management tool, with the code being what drives it. It’s worth noting that the use of such tools move administration away from people logging into individual machines and carrying out manual tasks.

There are essentially two approaches to configuration management:

- Imperative – ‘do this, then do that, then do this other thing’. This is what scripts have been doing since the dawn of operating systems, and arguably the mantra of ‘every good systems administrator will replace themselves with a script’ means that we’ve always at least had intent to have infrastructure as code. The problem with imperative systems is that they can be very brittle. All it takes is one unexpected change and the script doesn’t do what’s expected, and potentially everything breaks.

- Declarative – ‘achieve this outcome’. Modern configuration management tools at least aspire to being declarative, which hopefully makes them less fragile than older script based systems[2].

Ansible

There are lots of popular configuration management tools in the marketplace such as Puppet, Chef, Salt and even the original CFEngine. At DXC Technology we’ve chosen Ansible (and Ansible Tower) largely because of its SSH based agentless operating model, which eliminates the need to install stuff onto things just to begin the configuration process, and also allows it to be used with infrastructure such as networking equipment where it might be impossible to install an agent for a config management tool.

Learn Ansible

3) Containers

The use of virtual machines (VMs) to provide resource isolation and workload management has been popular now for well over a decade, and has found its way into the most conservative late adopters. Containers achieve resource isolation and workload management within an operating system rather than by using an additional hypervisor. This has multiple advantages, including quick startup time (fractions of a second) and low memory overhead (due to sharing a common kernel and libraries). The containers approach has been popular in various niches ranging from small virtual private server (VPS) hosting companies to how Google manages the million plus servers spread across its global data centres. Containers are now escaping from those niches, primarily because of the Docker project.

Docker

Docker is a set of management tools for containers that brings the mantra of ‘build, ship and run’

- Build – a container from a ‘bill of materials’ (known as a Dockerfile) that describe what goes inside the container in a simple script.

- Ship – a container (or just the Dockerfile that describes it) anywhere that you can copy a file

- Run – on any environment that can support containers, ranging from a laptop to a cloud data centre.

The ‘run’ part is in many ways pretty much ubiquitous already – any Linux machine or virtual machine with a relatively new kernel can run containers, and soon the same will apply to Windows. This makes ‘build’ and ‘ship’ the more interesting part of the story, and DockerHub provides a central (GitHub like) place where things can be built and shipped from. To complement the public DockerHub DXC Technology will be deploying its own Docker Trusted Registry (which will be equivalent to our Enterprise GitHub).

Learn Docker

I already wrote about how Docker gives you installation superpowers, and put out the plea to Install Docker.

4) Cloud Services

There are all kinds of definitions of ‘cloud’ out there[3], and our industry has suffered from a great deal of ‘cloudwashing’.

The modern pillar is the collection of services that expose an application programmer interfaces (APIs) that can be used to manage the life cycle and configuration of resources (on demand). The API allows the human interface, whether that’s a command line interface (CLI) or web interface or some other bizarre tool to be supplanted; though of course once an API is in place it’s easy to build a CLI, web interface (or even something bizarre) in front of it. It’s possible to automate without APIs (by screenscraping older interfaces), but it’s much easier to automate once APIs are in place.

Infrastructure driven by APIs, better known as Infrastructure as a Service (IaaS) was misunderstood in its early days as a cost play (despite never being *that* cheap). It’s now pretty well understood that the main point is time to market (for whatever it is that depends on the infrastructure) rather than cost per se; though many organisations have the numbers to show that they can get cost savings too.

AWS

Amazon Web Services (AWS) is the elephant in the cloud room. It’s 15x larger than its nearest competitor and 5x larger than the rest of the providers put together. Modern management techniques such as ‘two pizza’ have allowed Amazon to release new features at an exponential rate, which has been combined with huge ($Bn/qtr) infrastructure investment to force every other provider out of business or into an expensive game of catch up[4].

Learn AWS

DXC Technology is part of the Amazon Partner Network (APN), so there’s a structure programme for training and certification. Better still there are cash bonuses on offer for getting professional certifications

Where do I start to get Amazon Web Services (AWS) Training (DXC Technology staff only)

Wrapping Up

These four pillars are tied together by the use of code and the APIs that they drive. I’ll write more on how these come together with techniques like continuous integration (CI) and continuous deployment (CD), and how this fits in to the concept of ‘DevOps’.

Notes

[1] The one remaining caveat here is that GitHub has (re)introduced centralised management to a distributed system, and with it a single point of failure. GitHub (and by extension its users) have been victim to numerous distributed denial of service (DDOS) attacks over the past few years (often attributed to Chinese action against projects that allow circumvention of the ‘great firewall’). This is one reason for having a separate enterprise GitHub installation (as we have at CSC). There are some other answers – check out distributed hash tables (DHT) and interplanetary file system (IPFS) for a glimpse of how things will once again become distributed.

[2] It’s worth noting that declarative systems should easily deal with idempotence, which means that if a change has already been made then it shouldn’t be duplicated.

[3] Though NIST did everybody a favour by publishing a set of standard definitions.

[4] As we see Rackspace and CenturyLink fall by the wayside it leaves Microsoft, Google and EMC pretty much alone in terms of having the resources to compete. Google still outspends Amazon on infrastructure, which is entirely fungible between Google services and Google Cloud Services – so it’s able to do interesting things with scale and pricing. Microsoft has done a great job of upselling its existing customers into its cloud offerings, so for many it’s the cloud they already bought and paid for. It should be noted that both Apple and Facebook operate hyperscale infrastructures along similar lines to the IaaS providers, they just haven’t chosen to go into that business (yet).

Retrospective

This post very much became my manifesto for my time in Global Infrastructure Services. I presented talks based on this at TechCom 2016, and it also formed the basis of the ‘infrastructure as code boot camp’ workshops in the global delivery centres (which has now become a set of online Katacoda scenarios).

We ended up not going with Ansible Tower, for reasons I’m not going to get into here and now – that’s a story for another day.

The incentive for doing AWS Certified Solution Architect training has now been withdrawn, but it helped propel us to having the second largest community of certified people.

Filed under: cloud, code, Docker, DXC blogs | 2 Comments

Tags: Ansible, aws, cloud, code, config management, containers, Docker, Fork and Pull, git, github, infrastructure

The DXC Blogs – Install Docker

Originally published internally 24 Dec 2015:

Install Docker, because it gives you installation superpowers for many other things.

Install Docker, so that you don’t have to install Ansible, or Python, or Golang, or Greylog, or pretty much whatever else you were needing to install.

Install Docker, so that the next Yak you shave will be a pre shaved Yak for somebody else.

Retrospective

This internal post was mostly just a signpost to the public post I wrote that day. We subsequently used Docker as the basis for the ‘infrastructure as code boot camp’ and spent some of the workshops explaining how much time it saved.

Filed under: Docker, DXC blogs | 1 Comment

Tags: Docker

Originally published internally 15 Dec 2015:

I recently came to a realisation that every hire I’ve made has been for an aptitude to change rather than a given set of skills. This makes my most important interview question ‘how do you keep up to date with tech?’.

Tech is changing all the time. I constantly hear statements that the rate of change is accelerating – something that I don’t happen to believe, but even with a steady rate of change like Moore’s law there’s a whole lot to keep track of. The fire hose of tech news can be hard to manage, so it’s important to have good filters. My friend JP Rangaswami often quotes Clay Shirky’s ‘filter failure‘ when talking about the danger of information overload.

My coarse filters are Feedly [1] and Twitter, but there’s a much reduced output from that, which is stuff that I find interesting enough to add to Pinboard in case I want to go back to it later. Even that’s probably too much – I bookmark a ton of stuff on politics, religion, law and order and all manner of other subjects that have nothing to do with tech. As a new iteration of my earlier experiment into directed social bookmarking I’ve created a feed that’s being pulled into the technical design council (TDC) Slack team, simply by tagging things tdc. Pinboard very usefully allows me to easily turn that into an RSS feed for consumption by your favourite aggregator (which brings us back to Feedly if you don’t already use one, and the sad demise of Google Reader).

[1] I could probably share my Feedly feed list if anybody is interested – though beware that it’s large and very specifically tuned to things I’m interested in.

Retrospective

I keep on tagging things with ‘tdc’ and occasionally I bump into somebody who’s following along and finding some use. This post also unearthed a bunch of Feedly fans.

Original comments

I’m not going to post the original comments, as they’re mostly self referential to the people who made them, and hence impossible to psuedonymise.

I did myself however discover some Feedly sharing options, and posted this great ‘2 Kinds of People‘ cartoon:

Filed under: DXC blogs | Leave a Comment

Tags: Feedly, news, Pinboard, rss, twitter

This was originally published internally on 4 Dec 2015, my 4th day at CSC:

My first few days have made me think carefully about where I see value.

This is what’s emerging:

- I value code with documentation, samples and examples more than just code, because code with documentation, samples and examples gives us a repeatable offering that we can take to market.

- I value code more than slides, because code gives us a prototype that we can take to a brave customer who’s willing to share risk.

- I value slides more than ideas, because slides give us a way to share concepts and engage with customers about what might be.

- I value ideas on their own least of all, which is not to say that ideas have no value. Ideas are what drive us forward, and DXC Technology is full of brilliant people with great ideas – I just want to see the best ones evolve as quickly as possible into code with documentation, samples and example.

BTW code, and it’s documentation, samples and examples lives in a repository such as GitHub – if you’re not already using Git (and GitHub) then learn about it here – Learn Git | Codecademy

Retrospective

After just a few days it was already clear to me that far too much ‘solutioning’ was being done in PowerPoint rather than tools that could touch a production environment. Those slides were worthless compared to code. Not completely worthless, but definitely worth less. Of course code on its own isn’t great either, as successful projects need great documentation, samples and examples; a theme that I return to later.

Original comments

CN:

+1 Chris :-)

There was a great session from EMC and Puppet at VMworld which said think of Github/Version-Controlled code as “Live Documentation”.

The presenter made everyone promise never to log in to a server again before we left!

Whilst it’s not possible to stick to that when you have legacy customers on systems that can’t, yet, accept any change to them to be pushed out centrally it’s a great goal to have in the back of your mind when doing anything. “If this change works it need to go back into the build code/docs”

Filed under: DXC blogs | 2 Comments

Tags: documentation, git, github