Big Data – a little analysis

This post was inspired by a conversation I had with a VC friend a few weeks back, just as he was about to head out to the Structure conference that would be covering this topic.

Big Data seems to be one of the huge industry buzz phrases at the moment. From the marketing it would seem like any problem can be solved simply by having a bigger pile of data and some tools to do stuff with it. I think that’s manifestly untrue – here’s why…

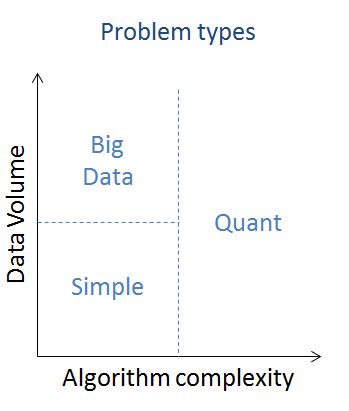

If we look at data/analysis problems there are essentially three types:

Simple problems

Low data volume, simple algorithm(s)

This is the stuff that people have been using computers for since the advent of the PC era (and in some cases before that). It’s the type of stuff that can be dealt with using a spreadsheet or a small relational database. Nothing new or interesting to see here…

Of course small databases grow into large databases, especially when individual or department scale problems flow into enterprise problems; but that doesn’t change the inherent simplicity. If the stuff that once occupied a desktop spreadsheet or database can be rammed into a giant SQL database then by and large the same algorithms and analysis can be made to work. This is why we have big iron, and when that runs out of steam we have the more recent move to scale out architectures.

Quant problems

Any data volume, complex algorithm(s)

These are the problems where you need somebody that understands algorithms – a quantitative analyst (or quant for short). I spent some time before writing this wondering if there was any distinction between ‘small data’ quant problems and ‘big data’ quant problems, but I’m pretty sure there isn’t. In my experience quants will grab as much data (and as many machines to process it) as they can lay their hands on. The trick in practice is achieving the right balance between computational tractability and time consuming optimisation in order to optimise the systemic costs[1].

Solving quant problems is an expensive business both in computation and brain power, so it tends to be confined to areas where the pay-off justifies the cost. Financial services is an obvious example, but there are others – reservoir simulation in the energy industry, computational fluid dynamics in aerospace, protein folding and DNA sequencing in pharmaceutics and even race strategy optimisation in Formula 1.

Big Data problems

Large data volume, simple algorithm(s)

There are probably two sub types here:

- Inherent big data problems – some activities simply throw off huge quantities of data. A good example is security monitoring where devices like firewalls and intrusion detection sensors create voluminous logs for analysis. Here the analyst has no choice over data volume, and must simply find a means to bring appropriate algorithms to bear.The incursion of IT into more areas of modern life is naturally creating more instances of this sub type. As more things get tagged and scanned and create a data path behind them we get bigger heaps of data that might hold some value.

- Big data rather than complex algorithm. There are cases when the overall performance of a system can be improved by using more data rather than a more complex algorithm. Google are perhaps the masters of this, and their work on machine language translation illustrates the point beautifully.

So where’s the gap between the marketing hype and reality?

If Roger Needham were alive today he might say:

Whoever thinks his problem is solved by big data, doesn’t understand his problem and doesn’t understand big data[2]

The point is that Google’s engineers are able to make an informed decision between a complex algorithm and using more data. They understand the problem, they understand algorithms and they have access to massive quantities of data.

Many businesses are presently being told that all they need to gain stunning insight that will help them whip their competition is a shiny big data tool. But there can be no value without understanding, and a tool on its own doesn’t deliver that (and it would be foolish to believe that a consultancy engagement to implement a tool helps matters much).

What is good about ‘big data’?

This post isn’t intended to be a dig at the big data concept, or the tools used to manage it. I’m simply pointing out that it’s not a panacea. Some problems need big data, and others don’t. Figuring out the nature of them problem is the first step. We might call that analysis, or we might call that ‘data science’ – perhaps the trick is figuring out where the knowledge border lies between the two.

What’s great is that we now have a ton of (mostly open source) tools that can help us manage big data problems when we find them. Hadoop, Hive, HBase, Cassandra are just some examples from the Apache stable, there are plenty more.

What can be bad about big data?

Many organisations now have in place automated systems based on simple algorithms that process vast data sets – credit card fraud detection being one good example. This has consequences for process visibility and ignoring specific data points that can ruin user experience and customer relationships. I’ll take this up further in a subsequent post, but I’m sure we’ve all at some stage been a victim of the ‘computer says no’ problem where nobody can explain why the computer said no, and it’s obviously a bad call given a common sense analysis.

Conclusion

For me big data is about a new generation of tools that allow us to work more effectively with large data sets. This is great for people who have inherent (and obvious) big data problems. For cases when it’s less obvious there’s a need for some analysis work to understand whether analysis of a larger data set might deliver more value versus using more complex algorithms.

[1] I’ve come across many IT people who only look at the costs of machines and the data centres they sit within. Machines in small numbers are cheap, but lots of them become expensive. Quants (even in small numbers) are always expensive, so there are many situations where the economic optimum is achieved by using more machines and fewer quants.

A good recent example of this is the news that Netflix never implemented the winning algorithm for its $1m challenge.

[2] Original `Whoever thinks his problem is solved by encryption, doesn’t understand his problem and doesn’t understand encryption’

Filed under: technology | 2 Comments

Tags: algorithm, analytics, big data, complexity, quant

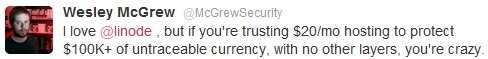

Firstly let me say that I like Linode a lot. They had a promotion running a little while ago which got me going with my first virtual private server (VPS), and I only moved off to somewhere from lowendbox after the promotion because my needs are small (and I wanted to match my spend accordingly)[1]. This tweet probably sums things up perfectly:

I first heard about the incident via Hacker News, where somebody had posted a blog post from one of the victims. The comments on both sites make for some interesting reading about security and liability. Shortly later Linode posted a statement. The true details of what went down, and whether it was an inside job as some speculate, or ‘hackers’ remains to be seen. Clearly whoever perpetrated the attack knew exactly what they were after and went straight for it – what law enforcement would normally call a ‘professional job’.

So… what can we learn from this? Here are some of my initial thoughts:

Physical access === game over

You have to be able to trust your service provider with your data. If that data has a cash equivalent of thousands of dollars then you have to be able to trust them a lot. There’s a special sort of service provider that we normally use for this – one that’s heavily regulated and where the customer (normally) gets reimbursed if mistakes are made – we call these banks. Of course regular banks haven’t got into servicing novel cryptocurrencies like bitcoin. Ian Grigg does a pretty good job of explaining why (and indeed why Bitcoin will slip into a sewer of criminality where this incident is but one example).

Bottom line – if you can’t trust the people that have physical access, then don’t do it.

Admin access === game over

If a service provider provides out of band management tools that provide the equivalent of physical access then you also need to trust whoever has access to that too. whilst it’s not clear yet who perpetrated the attack, it is pretty clear that it was done by subverting the management tools. This is particularly true when the management tool has direct control over security functionality, such as the ability to reset the root password.

In this case mileage may vary. Some VPS admin tools provide the ability to reset passwords, whilst others don’t.

Bottom line: Management tools might be convenient for service providers and their users, but can present a massive security back door for any measures taken on the machine itself.

Passwords === game over

If I look in the logs of any of my VPSs then I see a constant flood of password guessing attacks. This is why I either turn off passwords altogether with passwd -l account or disable password login in the SSH daemon.

It seems that at least some of the victims had chosen long, hard to guess (or brute force) passwords, which can raise the bar on how long an attack takes. Few people are that disciplined though. Passwords (on their own[2]) are evil, and should be avoided at all costs.

Of course SSH keys aren’t a panacea. Private keys need to be looked after very carefully.

Bottom line: Disable passwords, use SSH keys, look after the private key.

Conclusion

It seems to have become best practice in the IaaS business to build machines that only work with SSH keys, and where the management console (and the API under it) don’t have security features than can be used to subvert anything done to secure the machine[3]. These two steps go a long way towards ensuring that security in a VM/VPS has a sound foundation.

There is nothing that can be done about physical access (at least until homomorphic encryption becomes a reality) – so if you can’t trust your service provider (or at least get contractual recompense for any incident) then think again.

[1] Apart from some experimentation I pretty much never actually do much with any VPS that I run. They’re just used as end points for SSH and OpenVPN tunnels for when I want to swerve around some web filters (or keep my traffic from the prying eyes of those running WiFi).

[2] When I was running a VPS on Linode I took the precaution of adding two factor authentication (2FA) using Google Authenticator.

[3] Most management functions have serious implications for integrity and availability, but if they can’t hurt confidentiality then that’s a good start.

Filed under: security | 4 Comments

Tags: admin, Bitcoin, console, iaas, Linode, management, password, security, SSH, VM, VPS

The suckage of hotel Internet

I’ve been on the road now for a week and a half, which has brought me into contact with some of the slowest, most expensive Internet access I’ve suffered in some time. I’m used to mobile Internet being expensive and slow, but this has been even worse.

My problems started in an airport lounge in Singapore. I’d forgotten to check before leaving home that my replacement iPhone[1] had my audiobooks on it, and it turned out that they’d been missed from my iTunes sync. I have the Audible app, so I needed to download a book segment of around 90M. This would take a few minutes on my home broadband (which isn’t stunningly fast at around 4.5Mb/s). Unfortunately it was much slowed than that, and the connection kept dropping, and when the connection dropped it wouldn’t always continue – more time and bandwidth wasted as I started over. I didn’t get to listen to my audiobook on the flight – that’s OK, I managed to get some sleep.

When I checked into my hotel I thought my troubles would be over, and indeed I was able to download a few audiobooks. I woke early the following morning (a little before 4am) and thought I’d catch up with Google Reader and Twitter. Things were painfully slow so I ran a quick Speedtest:

Wow! I guess a Skype call home would be out of the question then. This is on an Internet service billed at AUD25/day (plus applicable taxes), and at that time in the morning I can hardly believe that other hotel residents were swamping their pipe. I’d also note that matters didn’t improve over the following hours/days. I complained at checkout, and thankfully the Internet charges were dropped from my bill.

For the purpose of comparison I’d note that AUD30 had got me a PAYG SIM that included 500MB of data on an HSPA network (and 500 voice minutes and unlimited SMS)[2]. So… looks like hotels are up to the same game they play with phones, offering a price point that’s even worse than mobile providers.

Another day another hotel. This time the performance isn’t too bad:

Unfortunately there’s a catch… AUD24/day only gets 100MB. After that you can pay AUD0.10/MB (up to a total daily cap of 1000MB) or switch to a throttled service that performs like this[3]:

Wow again! That’s even worse than the first speed test I did.

For a further comparison I tested the WiFi at the meeting I was attending (no charge, no caps):

That looks to me like a decent ADSL2 service. I’m not too familiar with local broadband pricing, but I expect that costs the same for a month as my hotel broadband is costing for a day or two.

It’s not news that hotels ream their customers for extras like this. But the cost, quality and limitations are pretty shocking. AUD104 for a maximum of 1000MB of data looks like it’s explicitly designed to make movie streaming cost prohibitive (to protect an in room movie distribution monopoly?). Of course (as the SOPA/PIPA advocates continuously fail to appreciate) the Internet isn’t just a medium for media distribution. These limits preclude the downloading of larger apps, and get in the way of desktop video conferencing.

I think the hotels can and should do better than this. What’s kind of perverse here is that the high end places seem to be the worst culprits for this kind of behaviour (whilst many cheaper hotels offer free access to fast pipes). The same is probably also true for many airline lounges.

[1] The original developed an ever growing yellow blotch on the screen, so I sent it back.

[2] The Aussie mobile carriers seem to make it super easy for visitors to buy their services. There were a number of providers with shops right at arrivals in SYD. I wish it were the same elsewhere (but I guess roaming tariffs provide perverse incentives where it’s better to keep somebody as another firm’s customer rather than make them your own).

[3] I was told at check in that Internet was complimentary with my room rate, so I’m not expecting to see the AUD24/day charge, but after only a morning of emailing and reading (no serious video or app usage) I’ve already blown past my 100MB quota, and with work to do I’ve selected the faster more expensive option – it’s unclear whether that will be charged.

Filed under: could_do_better, grumble, technology, travel | 1 Comment

Tags: download, hotel, Internet, lounge, mobile, speed, travel

Nanode thermometer

I first heard about Nanode (a low cost board that brings together Arduino and ethernet) via Andy Piper, then a few days later I had the fortune of seeing its creator Ken Boak speak at London’s Open Source Hardware Users Group (OSHUG). The week afterwards Ken was at the excellent Monkigras event, and did a short talk about Nanode; best of all, he had some with him. I picked up a Nanode RF kit for £20 and put it together that evening.

Nanode RF - mine doesn't have the RFM12B transceiver fitted

All did not go exactly according to plan. At first the only instructions I could find were for the older Nanode 5. I got to the point where I had a running system, but programming wasn’t working. A bit more digging around turned up the correct Nanode RF build guide, and I realised that I’d missed out putting in a voltage jumper (step 25). I was then able to get some code onto my Nanode, though more by good luck than good planning – I was still unaware of the vital step of pressing the reset button when a sketch finishes building (but immediately before it starts downloading) – that took some trial and error to discover.

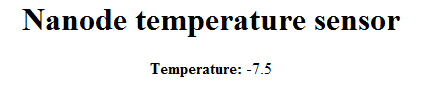

Once I had a working Nanode I could see it connecting to my home network, and then serving basic web pages. The time had come for a basic application. Since we were in a cold snap, and snow was forecast I though a temperature sensor would be fun. I found some code for a thermistor based project, and adapted it to the TMP36 temperature sensor that comes with the Oomlout ARDX kit. I then popped it out in the garage so I could measure the external temperature (but have a handy network connection).

It’s still really cold! Most of the week it’s been around -3C, but it obviously took a plunge last night. I’m now wishing I’d done something more sophisticated so I could log temp over time and draw charts. I feel some tinkering with MQTT coming on.

Filed under: Arduino, code | 2 Comments

Tags: arduino, Nanode, network, sensor, temperature, thermometer, web

In my previous post about Raspberry Pi I noted that it will probably wipe out the existing market for thin client devices. It won’t stop there though. Next up – media players…

Hardware wise the Raspi is very close to existing media players like Apple TV (2nd gen) or something like a WD TV Live – ARM CPU, HDMI, Network and USB connections. All it’s really missing is a box (which is optional anyway if it’s going to be hidden behind a TV) and remote control (or smartphone app). The software side of things has already been sorted out with XBMC, recently demoed on the Raspi (I had originally thought along the lines of VLC media player, but XBMC looks like a more comlete solution). For those that have Apple stuff it looks like AirPlay has also been figured out.

What’s kind of funny here is that most of the popular media players have developed hacking communities around them. How much easier life is going to be once we have hardware (and a software stack) that’s open to tinkering from the get go.

My own aged Kiss DP-600 is sorely in need of replacement, so hopefully the Raspi starts coming off the production line soon. Of course that means I’ll be needing at least two – one for general hacking and another for the living room. Then there’s the upstairs TV, and the kids play room. I can see myself buying LOTS of Raspberry Pis – I think they’re going to need a bigger factory, maybe even a number of bigger factories.

Filed under: media, Raspberry Pi, technology | Leave a Comment

Tags: AirPlay, HDMI, media, player, Raspberry Pi, Raspi, remote, streaming, XBMC

My friend Randy Bias very kindly came in and did a web conference presentation at work this week on his views of cloud computing (which are well summarised in a post he did at the end of last year). Inevitably the topic of security came up, and Randy, drawing on his past experience in the world of infosec, strongly advocated building security in rather than bolting it on. I’m also a fan of this approach, but it raised a couple of questions for me:

- If we’re building security in, then how do we audit the controls?

- Will platform as a service (PaaS) give us a way to build security in such that it can be evaluated independently of the custom code running on it?

The audit paradox – my first encounter

A few years ago I was approached by a team that wanted to build a client facing web service. I explained that they’d need an XML gateway/firewall, and that we’d been looking at various solutions in the marketplace. They asked why such an expensive beast was necessary, so we got into the details of XML attacks and mitigations. I’ll pick on just one thing – schema validation. The XML appliance – a piece of bolt on security – could validate an incoming message to ensure that it conformed to the expected schema.

‘No need for that’, they said, ‘we can follow the MSDN guidelines for schema validation’ (they were using .Net) – this was a genuine offer to build security in rather than bolt in on. ‘I think the IT Risk and Security guys will have a problem with that’, was my response, ‘how do they know that you’ve done it right?’.

There lies the issue – bolt on security is easy to audit. There’s a separate thing, with a separate bit of config (administered by a separate bunch of people) that stands alone from the application code.

Code security is hard. We know that from the constant stream of vulnerabilities that get found in the tools we use every day. Auditing that specific controls implemented in code are present and effective is a big problem, and that is why I think we’re still seeing so much bolting on rather than building in.

Can’t bolt on in the cloud

One of the challenges that cloud services present is an inability to bolt on extra functionality, including security, beyond that offered by the service provider. Amazon, Google etc. aren’t going to let me or you show up to their data centre and install an XML gateway, so if I want something like schema validation then I’m obliged to build it in rather than bolt it on, and I must confront the audit issue that goes with that.

PaaS to the rescue?

‘Constraints can be a win’ is one of Randy’s comments that sticks in my head. What if the runtime platform has the security built in rather than my custom code? What if security functionality, such as schema validation, is imposed rather than optional, and it’s the platform that I audit (once for all the applications)? That truly would be a win.

PaaS offers the promise of being able to do this, but frankly we’re not there yet. If we look at the antecedents of PaaS – the language frameworks, then there is cause for cautious optimism in the long term – e.g. Spring Security came along some time after Spring. A change in emphasis is needed though – security frameworks normally have lots of stuff that can be used, but precious little that must be used. If we return to the problem of auditability, the problem that must be solved is clearly providing evidence of a control and its effectiveness. This means that it must be always on, or clearly expressed in some configuration metadata rather than buried in code

Infrastructure as a service shows us that this can be done e.g. the AWS firewall is very straightforward to configure and audit (without needing to reveal any details of how it’s actually implemented). What can we do with PaaS, and how quickly?

Filed under: architecture, cloud, security, software | 6 Comments

Tags: audit, bolt on, build in, cloud, compliance, firewall, gateway, iaas, paas, schema, security, validation, xml

Arduino Simon – a little more

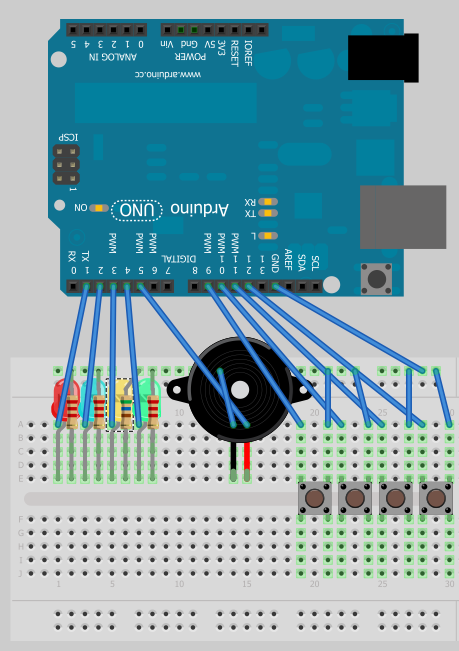

I wanted to add some diagrams to my original post, but didn’t have the right tools at hand.

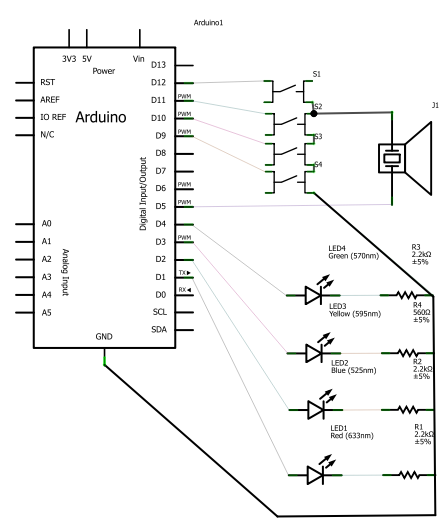

After some digging around I found a mention from @psd of Fritzing. I’m pretty happy with the results:

I’ve put the Fritzing file up onto github, and also created a project on the Fritzing site.

Filed under: Arduino, making | 1 Comment

Tags: arduino, breadboard, circuit, diagram, Fritzing, layout, Simon

The title for this post comes from an old naval tradition, where a ‘make and mend‘ was time given to fix up clothing. These days sailors get their uniform from stores, and personal clothes from shops like the rest of us; so a modern day ‘make and mend’ is simply some time off. With the rest of the family engaged in various performing arts yesterday I got some time off.

Mending

I noticed the other day that my Vespa that I use to get to the railway station in the morning wasn’t sitting on its stand properly. The bracket to push the stand down had been bent out of shape, causing it to lift the scooter on one side a little. My attempt to bend it back was a massive fail, as it just snapped off – doh! If there’s a next time I might choose a less freezing day so the metal is less brittle, or maybe even warm things up with a blow torch.

One of the more fun bits of navy engineering training was a series of workshops, which included a couple of days of welding. It didn’t make me any sort of expert, but the instruction, along with an arc welding machine I was given as partial payment for an IT consulting gig as a teenager means that I can at least make an attempt at getting bits of metal together:

I’m sure it won’t last, but it was a bit of fun, and puts off the day of having to buy a new stand.

Making

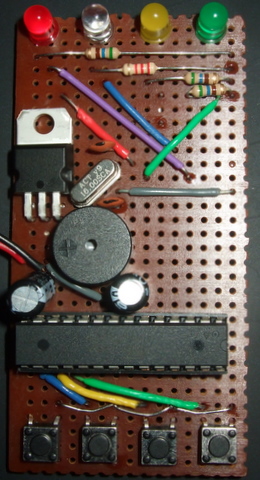

Having already fixed up the code for my Arduino Simon it was time to make a more permanent toy using some stripboard. I got an Arduino component bundle from Oomlout and some other bits and bobs like switches and a buzzer from eBay. Sadly when I went looking for stripboard I didn’t have a large enough piece to follow the instructables guide, so I had to improvise with what was on hand:

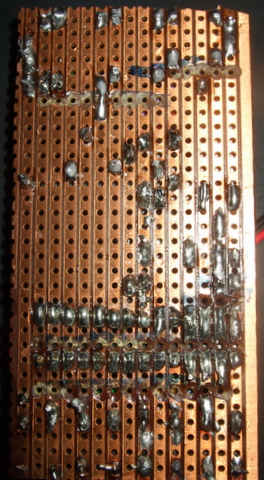

It might be technically possible to fabricate the circuit with a smaller piece of stripboard, but I’m pretty happy with how things came out. The reverse side shows that I was able to keep track cutting to a minimum (and that if I hadn’t been making it up as I went along I could have figured out that the yellow LED could go straight to its output pin):

More by good luck than good judgement it seems that I’d chosen the right pins as input and outputs to give me a simple and efficient board layout. The ATMega328 pinout was of course essential to figuring out where things needed to go.

Amazingly it worked perfectly first time with the already programmed chip from the Arduino Uno. I thought I could then just drop the chip from the component bundle into the Uno and leave it at that, but when I went to program the game I got:

avrdude: stk500_getsync(): not in sync: resp=0x00

Oops. This turned out to be because the component bundle comes with a Duemilanove bootloader rather than Uno. I was able to program the game by selecting Duemilanove in the Tools menu of the Arduino IDE, then I swapped the chips over so that my Uno bootloader chip was back in its rightful home.

I have one more change planned before I move on from this project. Right now it’s powered by a 9v battery, which is both clumsy and fragile. I’ve ordered a switchable battery box to hold 4x AAA, and I plan to hot glue the stripboard onto that so that the sharp bits on the bottom are dealt with.

Conclusion

Fixing the stand on my scooter wasn’t the best use of my time, and I’m sure I could have got loads of cheap electronic toys for the time and materials spent on my Simon. But that wouldn’t have been anything like as much fun, and I think it’s great to be able to have home made toys – especially when the kids do some of the soldering.

Filed under: Arduino, code, howto, making | Leave a Comment

Tags: arduino, avrdude, bootloader, code, electronics, game, mend, Simon, stk500_setsync():, stripboard, welding

Arduino Simon

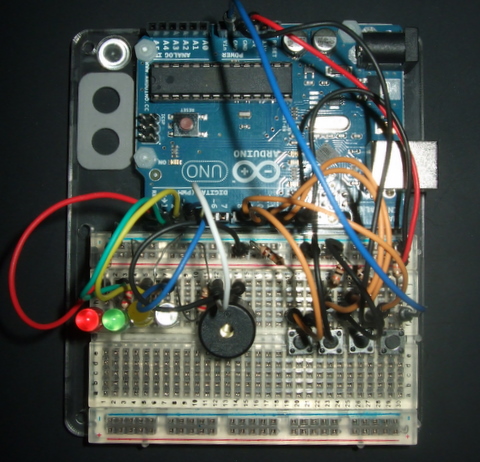

I My son got a great Xmas present in the shape of a Starter Kit for Arduino from Oomlout. After doing some of the basic projects I decided we needed something that we could get our teeth into. After a little pondering Simon came out as a worthwhile challenge. Back in the 80s I’d written a version of Simon that was published in Commodore Computing International, so I thought it would be fun to do a hardware version. A bit of poking around the web revealed that this has been done before, but I decided to start from scratch rather than copying the design and code from others (otherwise where’s the fun?).

I expected that this would be a project that might take a few weekends of tinkering, but in the end I had a playable system done in around 90 minutes. Arduino/Processing is a really productive environment, especially if you’re already familiar with electronics prototyping and a bit of C.

The electronics

- Lights – 4 LEDs (Red, Green, Yellow and Blue) between digital output pins and ground with appropriate series resistors.

- Sound – a piezo buzzer between a digital output pin and ground.

- Buttons – 4 – between ground and digital inputs. Right now I have 10k pull up resistors, but they’re probably not necessary. Tip – twist the legs of PCB mount buttons through 90 degrees to stop them pinging out of the breadboard.

The code

I’ll let the code speak for itself, but I’ve put it at the bottom to avoid breaking the flow of things[1]. Update 17 Jan – the original code is below, but revised code is on github.

Todo

- Change the tones so that the match up with the original Simon.

- Check for when a correct sequence of 20 is entered, and do some sort of winning ritual (right now the array will just overflow).

- Resequence the inputs. Blue was on Pin 13, but that was before I discovered that Pin 13 is special.

- Make use of the internal pull up resistors for the buttons (and ditch the 10K ones on the board).

- Progressively speed things up when the sequence gets longer.

- Put the code up on Github.

And then

The kids have really got into playing with this, so I’ve bought some components to transfer things onto a more permanent stripboard based version.

I’m also tempted to see if I can transfer things over to an MSP430 based microcontroller. That would be much cheaper to make a permanent toy out of (especially since TI send out free samples), but it brings with it the extra challenge of having to multiplex inputs and outputs as the MSP430s aren’t graced with the number of pins found on Arduino’s ATMega chip. This would probably involve Charlieplexing the LEDs and coming up with a resistor ladder for input.

Update 22 Jan – I did some follow up posts covering the stripboard build and with some diagrams of the original.

[1] The code:

const int led_red = 1; // Output pins for the LEDs

const int led_green = 2;

const int led_yellow = 3;

const int led_blue = 4;

const int buzzer = 5; // Output pin for the buzzer

const int red_button = 10; // Input pins for the buttons

const int green_button = 11;

const int yellow_button = 12;

const int blue_button = 9; // Pin 13 is special - didn't work as planned

long sequence[20]; // Array to hold sequence

int count = 0; // Sequence counter

long input = 5; // Button indicator

/*

playtone function taken from Oomlout sample

takes a tone variable that is half the period of desired frequency

and a duration in milliseconds

*/

void playtone(int tone, int duration) {

for (long i = 0; i < duration * 1000L; i += tone *2) {

digitalWrite(buzzer, HIGH);

delayMicroseconds(tone);

digitalWrite(buzzer, LOW);

delayMicroseconds(tone);

}

}

/*

functions to flash LEDs and play corresponding tones

very simple - turn LED on, play tone for .5s, turn LED off

*/

void flash_red() {

digitalWrite(led_red, HIGH);

playtone(1915,500);

digitalWrite(led_red, LOW);

}

void flash_green() {

digitalWrite(led_green, HIGH);

playtone(1700,500);

digitalWrite(led_green, LOW);

}

void flash_yellow() {

digitalWrite(led_yellow, HIGH);

playtone(1519,500);

digitalWrite(led_yellow, LOW);

}

void flash_blue() {

digitalWrite(led_blue, HIGH);

playtone(1432,500);

digitalWrite(led_blue, LOW);

}

// a simple test function to flash all of the LEDs in turn

void runtest() {

flash_red();

flash_green();

flash_yellow();

flash_blue();

}

/* a function to flash the LED corresponding to what is held

in the sequence

*/

void squark(long led) {

switch (led) {

case 0:

flash_red();

break;

case 1:

flash_green();

break;

case 2:

flash_yellow();

break;

case 3:

flash_blue();

break;

}

delay(50);

}

// function to build and play the sequence

void playSequence() {

sequence[count] = random(4); // add a new value to sequence

for (int i = 0; i < count; i++) { // loop for sequence length

squark(sequence[i]); // flash/beep

}

count++; // increment sequence length

}

// function to read sequence from player

void readSequence() {

for (int i=1; i < count; i++) { // loop for sequence length

while (input==5){ // wait until button pressed

if (digitalRead(red_button) == LOW) { // Red button

input = 0;

}

if (digitalRead(green_button) == LOW) { // Green button

input = 1;

}

if (digitalRead(yellow_button) == LOW) { // Yellow button

input = 2;

}

if (digitalRead(blue_button) == LOW) { // Blue button

input = 3;

}

}

if (sequence[i-1] == input) { // was it the right button?

squark(input); // flash/buzz

}

else {

playtone(3830,1000); // low tone for fail

squark(sequence[i-1]); // double flash for the right colour

squark(sequence[i-1]);

count = 0; // reset sequence

}

input = 5; // reset input

}

}

// standard sketch setup function

void setup() {

pinMode(led_red, OUTPUT); // configure LEDs and buzzer on outputs

pinMode(led_green, OUTPUT);

pinMode(led_yellow, OUTPUT);

pinMode(led_blue, OUTPUT);

pinMode(buzzer, OUTPUT);

pinMode(red_button, INPUT); // configure buttons on inputs

pinMode(green_button, INPUT);

pinMode(yellow_button, INPUT);

pinMode(blue_button, INPUT);

randomSeed(analogRead(5)); // random seed for sequence generation

//runtest();

}

// standard sketch loop function

void loop() {

playSequence(); // play the sequence

readSequence(); // read the sequence

delay(1000); // wait a sec

}

Filed under: Arduino, howto, making | 4 Comments

Tags: arduino, breadboard, button, buzzer, code, electronics, game, LED, pin 13, Simon

I ordered this card to go in my latest Microserver running the Windows 8 Developer Preview, but before it arrived I found an old NVidia Quadro NVS 285 lying around, which fitted the bill perfectly for doing dual DVI. My next thought was to upgrade the NVidia Geforce 210 in my (now rarely used) workstation. Sadly it doesn’t fit in there, as the heatsink bends around to the other side of the board, which in my workstation means it’s fighting a losing battle against the RAID card below for space. I think it would fit fine into the Microserver, provided that the next door PCIe slot isn’t occupied (or filled with something small).

I could have put it into my ‘sidecar’ Microserver, but that would be a waste since I pretty much never use that machine locally. Thus my daughter’s box became the winner. This had an old X800 in it, which despite being on the recommended hardware list appeared to be insufficient for the task of running Lego Universe.

Installation

The fold over heat sink wasn’t a problem without another board directly below. On booting up the machine (running Windows 7 x64) didn’t pull down a new driver, going for Standard VGA and the low resolutions that entails. I was however able to update the driver via Windows and get back to glorious 1920×1080. This seemed like a better idea than the likely old drivers on the supplied CD or the huge 100MB+ download from ATI (which must have huge amounts of annoying cruft in it).

Performance

The 2D performance took a slight step back in Windows Experience Index (5.8 -> 4.6) but the 3D performance leapt up to 6.1. Oddly this means that the oldest PC still in use in the house now has the highest WEI. Bringing Direct X 10 and 11 to the table surely helps, and the good news is that Lego Universe now starts up perfectly.

Conclusion

Performance wise this is the card I should have bought in favour of the GeForce 210, but I’d have been out of luck fitting it to the intended machine due to the fold over heat sink. If you have sufficient space, and want a low end GPU that can drive a decent size monitor then this card seems to beat the NVidia in almost every measure. It looks like other OEMs like VisionTek make similar cards with different heat sinks, which may in some cases be a better fit. I’m happy that my daughter’s machine can now run stuff that didn’t work before.

Update 1 – 9 Dec 2011 – Toms Hardware has a really good comparison chart showing the relative performance of various families of NVidia, ATI and Intel chipsets.

Filed under: review, technology | Leave a Comment

Tags: 5450, benchmark, DX10, DX11, fit, HD, heatsink, Lego Universe, Microserver, Radeon, Sapphire, WEI