TL;DR

We need to make space between online activities if we want to remember and appreciate them.

Background – are virtual meetings just running together?

One of my Leading Edge Forum (LEF) colleagues sent me this Washington Post article ‘All these Zoom birthdays and weddings are fine, but will we actually savor the memories?‘, which basically seems to boil down to saying that online meetings run together in a way that it’s hard to tell one from another. I think one of the issues here might be a lack of liminal space:

The word liminal comes from the Latin word ‘limen’, meaning threshold – any point or place of entering or beginning. A liminal space is the time between the ‘what was’ and the ‘next.’ It is a place of transition, a season of waiting, and not knowing.

In essence, if you’re sat in front of the same laptop, in the same room, then don’t be surprised if all your online meetings run together into some amorphous glob.

Some contrasting personal experiences

Reflecting on some contrasting personal experience of the last few months (and I realise that I’m fortunate to live in a large(ish) house with a variety of rooms on offer):

- Drinks and games with friends – we’ve done a few sessions where we’ve joined friends on Google Meet and played Jackbox party games. The setup I used for that had the friends or the game on my larger living room TV screen rather than just using my laptop standalone.

- Similarly, live comedy shows (mentioned in my last post on The Front Row) have been watched on the TV, but also with surround sound switched on (this is an area where I think the platform providers can help my allowing show producers to stream different audio sources [performers/audience] into different channels [centre/left/right/rear]).

- For drinks with a particular group of friends I’ve been using my iPad in the kids’ games room – so again a different device and place from ‘work’.

- I’ve done a couple of whisky tastings recently where the tasting was on a Facebook or YouTube stream, with a parallel tasting party on Zoom. I used my ‘work’ setup for that (as the multiple screens are handy), but I think in that case the different tastes and smells of the whisky make for a distinct experience.

My sense here is that part of what makes events memorable are the liminal spaces around them. It’s not just that I go to a restaurant or comedy club, but also the journey there and back. Mostly being at home during lockdown means there are fewer opportunities to pass through liminal spaces, but there are still ways that we can create different spaces for and around virtual events to make them more memorable.

A connection to learning?

Related… (I think), following Jez Humble’s endorsement I’ve been reading ‘Learning How to Learn‘ with the family. The book talks about focus time and diffuse time. If we’re going to focus on things in virtual events, then we need diffuse time between them (by transiting through a liminal space).

Conclusion

If we want to get value out of online work events, and enjoyment from online social events, then I think we need to create liminal space between them. At a minimum, that probably means putting down the laptop and walking away a few times throughout the day.

Filed under: culture | Leave a Comment

Tags: events, learning, liminal, space, virtual, whisky

The Front Row

This seems important enough not to just be a note in my July 2020 post when it comes.

I’ve seen a new interaction model emerge for virtual events, which I think maps into Fred Wilson’s 100/10/1[1] “rule of thumb” with social services:

- 1% will create content

- 10% will engage with it

- 100% will consume it

What we seem to have been missing at virtual events is the means for the 10% to engage, which is where The Front Row comes in…

The Front Row

Last week (and again last night) I joined a live comedy stream featuring a couple of my favourite comedians, Rachel Parris and Marcus Brigstocke hosted by Always Be Comedy. It was a little weird at first because I joined a Zoom call as a viewer to find a dozen connections from strangers chatting to each other. Those people weren’t just there to watch the comedy, they were ‘The Front Row'[2] at the ‘venue’, they were there to be part of it. When Rachel and Marcus started their show they (and we) could still hear The Front Row (and they could also see comments on chat from the whole audience).

This is a good thing for the presenters, who would otherwise be getting no feedback from their audience, and it’s also (mostly) a good thing for the broader audience, as the laughs coming from elsewhere make the whole experience more like an in real life event.

So what?

You may be thinking, ‘That’s great Chris, but I’m not into live comedy, and I’m definitely not into live comedy on another web conference when I’ve spent all day staring at a screen’; but I bring this up because I think The Front Row is just an early example of how audiences are going to be empowered to engage with online events.

Some other things I’ve been seeing

At the recent Virtual DevOps Enterprise Summit the presenters were available in Slack for Q&A whilst the recordings of their talks/demos or whatever were playing, and this resulted in some really good interactive discussions about the topics at hand.

I watched the opening keynote for Google’s Cloud Next event on DatacenterDude’s Watch Party[3], which brought people together from Nick’s online communities on Discord and YouTube to chat about the event (a bit like a group of friends/colleagues sitting together at a conference and sharing some thoughts).

What next?

I don’t know. We’re all learning about this stuff at the moment. The Front Row is just one experiment in engagement that’s showing some promise. I hope that event producers try more stuff out, and I hope platforms introduce more features to help with engagement. We also have a ton to learn from YouTube and Twitch streamers (and similar) who’ve been engaging with (often huge) remote audiences for many years before the pandemic.

I’m adding useful things I find about streaming to my Pinboard tag on the topic.

Dick Morrell also pointed me at Jono Bacon’s People Powered as a book to help understand (online) communities, but I’ve not had the chance to start reading it yet.

Update 9 Sep 2020

BBC News is covering the use of the front row in online comedy gigs with its (video) piece Lockdown comedy: How coronavirus changed stand-up.

Also Jono Bacon is starting up a book club for People Powered.

Notes

[1] I find myself often referring to this as the ‘rule of 9s’ – 90% consumer, 9% curator, 0.9% creator (and I know that leaves a stray 0.1%).

[2] Live comedy regulars will know that you don’t sit in the front row of a venue unless you want to run the risk of becoming part of the show. It’s a bit like the splash zone for shows at dolphin parks – don’t sit there unless you want to get wet. There are some comedy fans who will always choose the front row, most not so much – it’s a good reason to show up to an act on time, because you don’t want to be forced into the splash zone if you don’t want to take part.

[3] I saw some analyst commentary that Next was poorly attended, which I think fails to take account of the facts that a) analysts were given early access to the streams, so they were seeing them at a time when only press and analysts had access, and b) anybody joining a watch party wouldn’t be counted for the origin stream (though this is something that YouTube/Google should be able to get straight in terms of fan out).

Filed under: marketing, presentation, technology | Leave a Comment

Tags: audience, comedy, engagement

June 2020

As another month comes to an end, a quick digest of things that June brought…

Black Lives Matter

June seems to have been another one of those months full of “there are weeks where decades happen”. As a family we spent one of those weeks educating ourselves a little. Starting out with the BBC’s ‘Sitting In Limbo‘ about the Windrush scandal we went on to 12 Years a Slave, 13th (thanks for the pointer Bryan), Desiree Burch’s Tar Baby and The Hate U Give. We almost didn’t get past the trauma of 12 Years a Slave, and clearly if that stuff is traumatic to watch in a movie it’s even more traumatic to have it as part of a cultural heritage.

Windows upgrades

I upgraded my desktop and laptop to Windows 10 version 20.04, which brings with it the Windows Subsystem for Linux version 2 (WSL2), which has some noteworthy improvements over the earlier WSL.

I also upgraded to the new Chromium based Edge browser, as it’s been my habit to run my work O365 subscription in Edge so that it’s partitioned from personal stuff, and hopefully something like the Microsoft Employee experience.

And there’s the new Terminal application. I’m still a little sad that it doesn’t include an X-Server, but it’s come on a long way since I first looked at it. It’s taken up residence in my pinned applications, and I find myself jumping in there if I need to really quickly try something on a Linux command line.

Pi stuff

David Hunt’s post on using a Pi Zero with Pi Camera as USB Webcam inspired me to try that out. Unfortunately the cheap Pi Zero camera I bought didn’t have the low light performance to work at my desk. But that got pressed into service as a replacement for my Pi A MotionEyeOS when its USB WiFi dongle stopped working. I’ve subsequently bought the new Pi HD camera, and that certainly does have the right performance to be a better webcam.

Along with the new camera I got another Pi 4 with this gorgeous milled aluminium heatsink case from Pimoroni:

Jo (@congreted0g) put me onto cheap HDMI-USB adaptors now available on eBay. I managed to snag one for £8.79. It’s great to be able to see a Pi boot on Open Broadcast Studio (OBS) on my desktop rather than messing around with monitor inputs (especially since my ‘lab’ monitor has found a new home as part of my wife’s teaching from home rig).

Conferences

There are a whole bunch of conferences I should have been at over the past few months, but they’ve been delayed and refactored as virtual due to Covid-19. June brought the first batch events that kept to their original schedule whilst switching from in person to online – CogX, and DevOps Enterprise Summit (DOES).

DOES definitely managed to be more polished in terms of presentation. Organiser Gene Kim has obviously been thinking about things a lot, as laid out in his Love Letter to Conferences; and he was also able to enlist the help of Patrick Dubois, who’d already learned a lot from a previous event. Benedict Evans also has some good thoughts in Solving online events, because it’s going to be a while before we rush back to planes and hotels and buffets (and it might just be a good thing to have much larger communities engaging online).

It was also good to see AWS Summit going fully online. I’ve attended the London summit since it started, but for the past few years I’ve decided not to endure a visit to the ExCeL. So it was good to be able to join the breakout sessions as well as the keynotes without schlepping out to the Docklands for a performance of security theatre.

YouTube music and videos

There are times when I want to listen to or watch stuff from YouTube offline, and it turns out that ClipGrab is the tool I needed for that.

AirPods Pro

My right AirPods Pro developed an annoying rattle, and it seems that sadly this isn’t uncommon. Thankfully Apple Support were pretty swift in sending me a replacement.

Air Conditioning

The UK isn’t a place where it’s normal to have air conditioning, but the last few years have repeatedly brought days stretching to weeks when I wished I had it. Of course when the heat wave comes, the A/C units sell out in a flash. So this year I got ahead of the game by ordering a Burfam unit.

I took a bit of trouble to connect the exhaust through to a roof vent:

Which included making a plenum chamber adaptor, fashioned from an old fabric conditioner bottle:

It worked great for my trial run, but sadly seems to have stopped working now that the mercury is passing 30C :( Let’s see how Burfam support deal with it…

Filed under: monthly_update, Raspberry Pi, technology | Leave a Comment

Tags: Air Conditioning, AirPods, CogX, DevOps, DOES, Linux, MotionEye, Windows, Windows 10, WSL2

May 2020

Inspired by Ken Corless’s #kfcblog I’m trying out monthly roundup posts for stuff that didn’t deserve a whole post of their own

Retro

I wanted to try out RomWBW, but didn’t fancy going down the route of making more RC2014 modules, so I got myself an SC131 kit from Stephen Cousins. It’s wonderfully cute and compact, was a pleasure to put together, and worked on first boot :) Though spot what I messed up in the final stages of construction :/ Anyway, I now have something else to play Zork on.

It was also cool to see my colleague Eric Moore featured on Boing Boing for his SEL 810A restoration (and also on Hackaday).

Fitness

Since mid March I’ve been doing workouts on the garage cross trainer on work days, and Oculus Quest Beat Sabre on other days. I only recently discovered that my Apple watch can track ‘Fitness Gaming’ as a workout, which means May became my first perfect month for workouts (after April being my first perfect month for closing rings).

Food

Since getting an Ooni 3 last summer pizza night has become a regular weekly feature, but we’ve been mixing things up with some Chicago style deep pan pizzas. I’ve been taking inspiration from this recipe. It’s not quite Malnati’s, but better than anything I’ve had in the UK.

Bodil’s ‘trick to making instant ramyun into gourmet food‘ has become a firm favourite for something quick and tasty on a Sunday evening.

After spending some weeks scraping together the ingredients we were finally able to try this home KFC recipe. I don’t think it really tasted like the general article, but was still a big hit with the kids.

No new restaurant recommendations this month; I miss sushi :/

Culture

The shows must go on has continued to serve up some treats, and #JaysVirtualPubQuiz has become a weekly favourite. Normal People was very binge worthy, and Star Wars The Clone Wars came to a strong finish.

Work

We finally got the open source version of DevOps Dojo out the door, and I spent some time with one of the engineers behind it on a walk through demo.

Raspberry Pi

It’s now possible to boot a Pi 4 from USB, which means you can use an SSD adaptor. I’ve set mine up so that my daughter can run Mathematica on it from her Macbook, as it’s free on the Raspberry Pi (versus £hundreds for even the student edition on PC/Mac).

Filed under: monthly_update, Raspberry Pi, RC2014, retro | 1 Comment

Tags: fitness, food, Mathematica, Raspberry Pi, retro

April 2020 marks 55 years since Intel co-founder Gordon Moore published ‘Cramming more components onto integrated circuits (pdf)‘, the paper that subsequently became known as the origin for his eponymous law. For over 50 of those years Intel and its competitors kept making Moore’s law come true, but more recently efforts to push down integrated circuit feature size have been hitting trouble with limitations in economics and physics that force us to consider what happens in a post Moore’s law world.

Plot by Karl Rupp from his microprocessor trend data (CC BY 4.0 license)

Filed under: InfoQ news | Leave a Comment

Tags: ARM, hardware, Moore's law, performance, x86

Synology DS420j mini review

TL;DR

The DS420j is one of my more boring tech purchases. It does exactly the same stuff as my old DS411j from nine years ago. But has enough CPU and memory to do it with ease rather than being stretched to the limit.

Background

I bought a Synology DS411j Network Attached Storage (NAS) box in late Apr 2011, and at the time remarked that it was ‘a great little appliance – small, quiet, frugal, and fast enough’.

Over time fast enough became not quite fast enough, though the issue was more with the limited 128MB of memory rather than the Marvell Kirkwood 88F6281 ARM system on chip (SOC). As successive upgrades to DiskStation Manager (DSM) came along I found myself firstly pairing back unnecessary packages, and then just putting up with lacklustre performance due to high CPU load (due to too little free RAM).

Having everybody at home for lockdown over the past few weeks hasn’t been easy on the NAS, with all of us hitting it at once for different stuff, so it was definitely creaking under the load. I bought a DS420j from Box for £273.99 (+ £4.95 P&P because I wanted it before the weekend rather than waiting for free delivery)[1].

The new box

Is black, and has the LEDs in different places. This is of no consequence to me whatsoever, as the NAS lives on a shelf in my coat cupboard where I never see it.

It uses the same drive caddies as before, the same power supply, and has the same network and USB connectivity.

The differences come with the new 4 core SOC, a Realtek RTD1296, which provides more than 4x the previous CPU performance, and 1GB DDR4 RAM, which is 8x more, and a bit faster.

Upgrading

Moving 12TB of storage, including everything that the family has accumulated in over 20 years is not for the faint-hearted. Thankfully Synology makes it really easy, and has a well documented process.

I’d already taken a config backup and ensured I was on latest DSM before the new NAS arrived, so when I was ready to switch it was simply a matter of:

- Powering down and unplugging the DS411j.

- Switching the disk caddies to the DS420j (they’re the same, so fitted perfectly).

- Plugging in the DS420j and powering up.

- Selecting the migration option and clicking through to DSM upgrade.

- Waiting for the system to come back up and restoring the config.

- Another restart.

- Altering the DHCP assignment in my router so that the new NAS would have the IP of the old NAS, then switching between manual IP and auto IP in DSM so that the new IP is picked up.

- Upgrading the installed packages (for new binaries?).

- Re-installling Entware opkg (so that I can use tools like GNU Screen).

The entire process took a few minutes, and then everything was back online.

Afterwards

Using the new NAS is noticeably snappier than before, and when I run top the load averages I’m seeing are more like 0.11 and 11.0.

Everything else is the same as before, but I couldn’t really expect more, as I was already running the latest DSM. I don’t ask much of my NAS – share files over CIFS and NFS, back stuff up to S3, run some scripts to SCP stuff from my VPSs, that’s it.

I think I have a few more options now for upgrading the disks as I run out of space, but I have a TB or so to go before I worry too much about that.

Conclusion

This is probably one of the most boring tech purchases I’ve made in ages. But storage isn’t supposed to be exciting, and I’m glad Synology make it so easy to upgrade from their older kit.

Here’s hoping that this one lasts 9 years (or longer). It’s just a shame that the cost of storage TB/£ has remained stubbornly flat since 2011. The next spindles upgrade is going to be $EXPENSIVE.

Note

[1] and I now see it for £261.99 so my timing was bad :/

Filed under: review, technology | 7 Comments

Tags: DS411J, DS420j, NAS, Synology

Quarantine cooking

Things are not normal, so I’ve been trying to mix things up a bit with cooking at home to make up for the lack of going out.

Also one of the people I met at OSHcamp last summer retweeted this, which led to me ordering a bunch of meat from Llais Aderyn

When the box came it had:

2 packs of mince

2 packs of diced stewing steak

1 pack of braising steak

1 pork loin joint

1 lamb joint

It got to me frozen, but not cold enough to go back into the freezer, so I had lots of cooking to do over the next few days. The meat went into making:

A giant slow cooked Bolognese sauce (nine portions), which used the mince and one pack of stewing steak (as I like chunky sauces).

Slow roast pork and fries (four portions)

didn’t last long enough for a photo

Slow roast lamb (with the usual trimmings – four portions)

Shepherd’s pie (four portions) from the leftover lamb

Slow cooked casserole (six portions), which used the remaining stewing/braising steak

and that was the end of that box of meat. It’s a shame that Ben now seems to be mostly sold out, as all the meat was really tasty.

Tonight will be kimchi jjigae, inspired by @bodil’s recipe

Taking it slow

The observant amongst you will have noticed a theme. Everything was slow cooked, either in my Crock-Pot SC7500[1] or simply in a fan oven overnight at 75C. As I wrote before in The Boiling Conspiracy there is really no need for high temperatures.

My Bolognese recipe

Here’s what went into the Bolognese pictured above:

2 medium brown onions and 1 red onion, lightly sauteed before adding

2 packs mince and 1 pack stewing steak (each ~950g) which were browned off

2 tbsp plain flour

2 cans chopped tomatoes

~250g passata (left over from pizza making)

~3/4 tube of double stength tomato puree

handful of mixed herbs

ground black pepper

a glug of red wine

1 tbsp gravy granules

4 chopped mushrooms

and then left bubbling away in the slow cooker all afternoon (about 6hrs)

Note

[1] The SC7500 is great because the cooking vessel can be used on direct heat like a gas ring, and then transferred into the slow cooker base to bubble away for hours – so less fuss, and less washing up.

Filed under: cooking | Leave a Comment

Tags: Bolognese, casserole, cooking, jjigae, kimchi, lamb, meat, pie, prok, recipe, roast, slow

Background

I use Enterprise GitHub at work, and public GitHub for my own projects and contributing to open source. As the different systems use different identities some care is needed to ensure that the right identities are attached to commits.

Directory structure

I use a three level structure under a ‘git’ directory in my home directory:

- ~/git

- which github

- user/org

- repo

- user/org

- which github

- ~/git – is just so that all my git stuff is in one place and not sprawling across my home directory

- which github – speaks for itself

- user/org – will depend on projects where I’m the creator, or I need to fork then pull versus stuff where I can contribute directly into various orgs without having to fork and pull

- repo – again speaks for itself

- user/org – will depend on projects where I’m the creator, or I need to fork then pull versus stuff where I can contribute directly into various orgs without having to fork and pull

- which github – speaks for itself

So in practice I end up with something like this:

- ~/git

- github.com

- cpswan

- FaaSonK8s

- dockerfiles

- fitecluborg

- website

- cpswan

- github.mycorp.com

- chrisswan

- VirtualRoundTable

- HowDeliveryWorks

- Anthos

- OCTO

- CTOs

- chrisswan

- github.com

Config files

For commits to be properly recognised as coming from a given user GitHub needs to be able to match against the email address encoded into a commit. This isn’t a problem if you’re just using one GitHub – simply set a global email address (to the privacy preserving noreply address) and you’re good to go.

If you’re using multiple GitHubs then all is well if you’re happy using the same email across them, but there are lots of reasons why this often won’t be the case, and so it becomes necessary to use Git config conditional includes (as helpfully explained by Kevin Kuszyk in ‘Using Git with Multiple Email Addresses‘).

The top level ~/.gitconfig looks like this:

[user] name = Your Name [includeIf "gitdir:github.com/"] path = .gitconfig-github [includeIf "gitdir:github.mycorp.com/"] path = .gitconfig-mycorp

Then there’s a ~/.gitconfig-github:

[user] email = [email protected]

and a ~/.gitconfig-mycorp:

[user] email = [email protected]

With those files in place Git will use the right email address depending upon where you are in the directory structure, which means that the respective GitHub should be able to then match up the commits pushed to it to the appropriate user that matches those email addresses.

Filed under: code | Leave a Comment

Tags: .config, directory, email, enterprise, git, github

TL;DR

Best practice gets encoded into industry leading software (and that happens more quickly with SaaS applications). So if you’re not using the latest software, or if you’re customising it, then you’re almost certainly divergent from best practices, which slows things down, makes it harder to hire and train people, and creates technology debt.

Background

Marc Andreessen told us that software is eating the world, and this post is mostly about how that has played out.

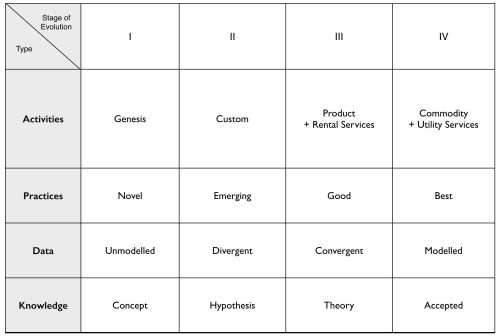

As computers replaced paper based processes it was natural for mature organisations with well established processes to imprint their process onto the new technology. In the early days everything was genesis and then custom build (per Wardley map stages of evolution), but over time the software for many things have become products and then utilities.

Standard products and utilities need to serve many different customers, so they naturally become the embodiment of best practice processes. If you’re doing something different (customisation) then you’re moving backwards in terms of evolution, and just plain doing it wrong in the eyes of anybody who’s been exposed to the latest and greatest.

An example

I first saw this in the mid noughts when I was working at a large bank. We had a highly customised version of Peoplesoft, which had all of our special processes for doing stuff. The cost of moving those customisations with each version upgrade had been deemed to be too much; and then after many years we found ourselves on a burning platform – our Peoplesoft was about to run out of support, and a forced change was upon us.

It’s at this stage that the HR leadership did something very brave and smart. We moved to the latest version of Peoplesoft and didn’t customise it at all. Instead we changed every HR process in the whole company to be the vanilla way that Peoplesoft did things. It was a bit of a wrench going through the transition, but on the other side we had much better processes. And HR could hire anybody that knew Peoplesoft and they didn’t have to spend months learning our customisations. And new employees didn’t have to get used to our wonky ways of doing things, stuff worked much the same as wherever they’d been before.

Best of all Peoplesoft upgrades henceforth were going to be a breeze – no customisations meant no costly work to re-implement them each time.

SaaS turbocharges the phenomenon

Because SaaS vendors iterate more quickly than traditional enterprise software vendors. The SaaS release cycle is every 3-6 months rather than every 1-2 years, so they’re learning from their customers faster, and responding to regulatory and other forms of environment change more quickly.

SaaS consumption also tends to be a forced march. Customers might be able to choose when they upgrade (within a given window), but not if they upgrade – there’s no option to get stranded on an old version that ultimately goes out of support.

Kubernetes for those who want to stay on premises

SaaS is great, but many companies have fears and legitimate concerns over the security, privacy and compliance issues around running a part of their business on other people’s computers. That looks like being solved by having Independent Software Vendors (ISVs) package their stuff for deployment on Kubernetes, which potentially provides many of the same operational benefits of SaaS, just without the other people’s computers bit.

I first saw this happening with the IT Operations Management (ITOM) suite from Microfocus, where (under the leadership of Tom Goguen) they were able to switch from a traditional enterprise software release cycle that brought with it substantial upheaval for upgrades, to a much more agile process internally, running into quarterly released onto a Kubernetes substrate (and Kubernetes then took care of much of the upgrade process, along with other operational imperatives like scaling and capacity management).

Conclusion

Wardley is way ahead of me here, if you customise then all you can expect is (old) emerging practices, which will be behind the best practices that come from utility services. I did however want to specifically call this out, as there’s an implication that everybody gets lifted by a rising tide, and the evolution from custom to utility. But that only happens if you’re not anchored to the past – you need to let yourself go with that tide.

Filed under: cloud, software | Leave a Comment

Tags: best practice, Kubernetes, processes, saas

Turning a Twitter thread into a post.

I wrote about the performance of AWS’s Graviton2 Arm based systems on InfoQ

The last 40 years have been a Red Queen race against Moore’s law, and Intel wasn’t a passenger, they were making it happen. I used to like Pat Gelsinger’s standard reply to ‘when will VMware run on ARM?’

It boiled down to ‘we’re sticking with x86, not just because I used to design that stuff at Intel, but because even if there was a zero watt ARM CPU it wouldn’t sufficiently move the needle on overall system performance/watt’. And then VMware started doing ARM in 2018 ;)

Graviton 2 seems to be the breakthrough system, and it didn’t happen overnight – they’ve been working on it since (before?) the Annapurna acquisition. AWS have also been very brisk in bringing the latest ARM design to market (10 months from IP availability to early release).

Even then, I’m not sure that ARM is winning so much as x86 is losing (on ability to keep throwing $ at Moore’s law’s evil twin, which is that foundry costs rise exponentially). ARM (and RISC-V) have the advantage of carrying less legacy baggage with them in the core design.

I know we’ve heard this song before for things like HP’s Moonshot, but the difference this time seems to be core for core the performance (not just performance/watt) is better. So people aren’t being asked to smear their workload across lots of tiny low powered cores.

So now it’s just recompile (if necessary) and go…

Update 20 Mar 2020

Honeycomb have written about their experiences testing Graviton instances.

Filed under: cloud | Leave a Comment

Tags: ARM, aws, Graviton2, x86