Netbook mort?

Over the past few days I’ve seen a few articles about netbooks. One was declaring that 2012 was the year the Netbook Died, another saying the netbook isn’t dead — it’s just resting (with perhaps an even more interesting Hacker News comments thread). So what’s really going on?

A couple of years ago I wrote that I wouldn’t buy another netbook. This is still pretty much true. The netbooks that are available today aren’t really any better than they were a couple of years ago (which is fine given that they were good enough), but their main crime is that they’re also not any cheaper. Stuff is supposed to get better/faster/cheaper (or at least two of those things) over time, but netbooks just haven’t kept up.

The death of my wife’s netbook (which now lives on as a media player) meant that there was space for a new machine in the household. I wanted a netbook successor, an evolved netbook, a netbook++.

The machine that I wanted to buy (but that doesn’t exist)

I was very impressed with my son’s Lenovo X121e. When it arrived it was the fastest machine in the house (by Windows Experience Index), and the subsequent addition of an SSD just made it more awesome. For much the same money (around £350) I’d expect a machine with similar physical dimensions and screen, an Ivy Bridge Core i3 (with HD4000 graphics) and a small (128GB) SSD or a larger capacity hybrid arrangement. Unfortunately such a machine doesn’t exist. The Intel based X131e is only fractionally faster than the older X121e and substantially more expensive.

The action has moved on to bigger screens

When I got the X121e I thought it was a good substitute for a MacBook Air at a much lower cost. Since then Intel’s Ultrabook marketing brand has tried to position small/light/fast machines against the MBA. It seems like netbook++ machines (like the X131e) that aren’t Ultrabooks have been positioned so that they don’t cannibalise the Ultrabook segment (by being too good/cheap). What we get instead is lots of decent spec machines that are just a bit too big. There seems to have been a recent explosion of activity in the 14″+ (mostly 15,6″) form factor with enough CPU (Core i3/i5), enough RAM (4GB) and reasonable graphics (HD4000). Most of these machines don’t come with SSD, but that’s pretty easy and cheap to fix.

So… my first culprit for the netbook not evolving into the machine I want is Intel – trying a little too hard to preserve margins via the Ultrabook branding.

Microsoft’s part

The original netbook (Asus’s Eee 700 Series) cut a lot of corners to hit its price point. Its screen and keyboard were too small, and it had hardly any storage (and had to run Linux as there wasn’t room for Windows). All that quickly changed, and it wasn’t long before netbooks came along with good enough screens, keyboards and storage. In some cases Linux was still an option (and one that would save around £30), but the mass market wanted Windows, and the manufacturers delivered. This gave Microsoft a couple of problems:

- Margin compression – MS had to sell Windows much cheaper to get it onto netbooks and still keep the overall price where it needed to be.

- The end of the hardware driven upgrade cycle – every version of Windows until 7 had demanded better hardware, but Windows 7 changed that. Vista wouldn’t run on a netbook, and so for a while netbooks provided a channel for XP (whilst more grown up machines would run Vista). But netbooks were perfectly capable of running Windows 7, and arguably the overall user experience was better than with XP.

I ran Windows 7 Ultimate on my own netbook, but nobody was going to spend the same on their OS as they did on the hardware. Enter Windows 7 Starter edition – a crippled version of Windows just for netbooks. The trouble was that it wasn’t just Windows that was crippled, MS would only license it for a crippled hardware spec (e.g. RAM limited to 2GB).

So the second culprit for the netbook not growing up is Microsoft.

What about Windows 8?

I’ve not tried Windows 8 on a netbook, but I think it would run OK if it wasn’t for the resolution requirements that might make 1024×600 screens an issue. Windows 8 would certainly run fine on a netbook++ like the X121e or similar with its 1366×768 screen.

The margin compression issue seems to have dealt with itself – in that margins for Windows have dropped across the board (which is why its finding its way onto those larger £299 machines).

I did briefly try out an Atom based machine with a touch screen[1], which could be viewed as a spiritual successor to the netbook. This class of machine seems to work well with Windows 8 (as Metro really needs touch), but I can’t help feeling that the price isn’t right yet – the premium being demanded for touch is simply too much for the added utility.

The Chromebook

Some argue that the Chromebook is the successor to the netbook, and from a technical point of view there’s a lot in common between my Samsung Series 3 Chromebook and the original Asus Eee:

- Linux based

- Small SSD rather than a larger regular hard disk

Of course time has brought a few improvements:

- Better keyboard

- Larger screen

And some limitations live on:

- No Windows apps

- and an overall limited choice of what can be run

When all’s said and done I’m finding my Chromebook good enough. It’s perfect for blogging, and I spent a day doing stuff with one of my Raspberry Pi’s using the Chromebook alongside for SSH access and reading reference material/guides.

So why would I still want an evolved netbook?

If I could have bought that mythical £350 machine described above (rather than a £229 Chromebook) then it would be so that I could:

- Run VMs, using VirtualBox or Hyper-V on Windows 8 (netbooks lacked the memory and hardware virtualisation features, like VT-x, needed for this)

- Run a development environment for embedded device tinkering (netbooks might be fine for productivity workloads, but 2GB RAM is a bit tight for a decent development environment)

I’d have probably have ended up sacrificing a few hours of battery life for that functionality (as well as the extra money) but that would be a fair trade off.

Conclusion

Whilst I wouldn’t have bought another netbook I was ready and willing to buy what the netbook should have evolved into. Sadly the market isn’t delivering that type of machine at the right price[2], which is why I bought my ARM Chromebook. It looks like Intel and Microsoft’s attempts to steer the market in their direction aren’t being entirely successful – they’ve succeeded in killing the netbook (and stopping the emergence of a successor netbook++ category), but weren’t successful in getting my money.

Notes

[1] These machines don’t seem to have hit the shops yet, and the closest I can find is the Asus S200E Vivobook

[2] There are machines at almost the right price such as the AMD based Lenovo X131e and HP DM1, but the CPU performance on those AMD APUs looked just a bit too poor to tempt me.

Filed under: technology | Leave a Comment

Tags: AMD, ARM, Chromebook, Intel, Microsoft, netbook, ssd

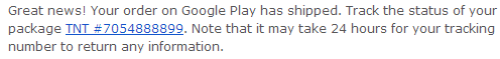

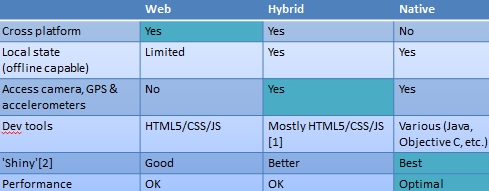

3TB

When I have to move resources.pichimney.com (and its older predecessor openelec.thestateofme.com) to a new VPS I was using a little over 1TB of bandwidth per month. I found a plan with 3TB to give me a little head room. Christmas has obviously been busy with people getting new Pis and playing with OpenELEC – this is a screen grab from my VPS management console taken a moment ago:  We might just squeeze through December without blowing the bandwidth limit, but it’s going to be tight. If the site goes down on New Years Eve then this will be why. It looks like I need to clear up some older stuff to free up some disk space too. I’ll start by getting rid of the r11xxx builds.

We might just squeeze through December without blowing the bandwidth limit, but it’s going to be tight. If the site goes down on New Years Eve then this will be why. It looks like I need to clear up some older stuff to free up some disk space too. I’ll start by getting rid of the r11xxx builds.

Update 1 (30 Dec 2012) – The other VPS that I use as part of the image building process has 1TB of bandwidth, so I’ve cut openelec.thestateofme.com over that that (as that URL still seems to get most of the traffic). If the main VPS gets really close to the limit then I’ll cut over resources.pichimney.com. I should also point out that it’s gratifying to note that I estimate there has been over 32,000 downloads of OpenELEC from my site over the past month.

Filed under: Raspberry Pi | 2 Comments

Tags: bandwidth, download, openelec, Raspberry Pi, Raspi, RPi, VPS

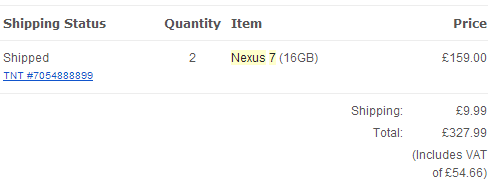

I’ve been without a laptop for a few weeks[1], and whilst tablets are fine for consumption and the occasional comment I’ve missed having a keyboard for proper creative work. I’ve been tempted by Lenovo’s Black Friday sale for the X230[2], various Ultrabooks and Netbooks[3], but by the time I’d got an SSD[4] I’d be looking at £400-£500. As soon as I heard about the new Samsung ARM based Chromebook at £229 I liked the idea[5]. Once I had the chance to play with one in a shop then I was totally convinced[6].

Since Santa didn’t bring me a Chromebook for Christmas I ordered one from John Lewis for delivery to my local Waitrose, and picked it up on the way home from a family outing this afternoon.

Things I like

The screen and keyboard are great – loads better than a 10″ Netbook, and subjectively better than the 11″ X121e. The trackpad is also nice in use, and I don’t find myself missing a trackpoint too much. The two fingers touch for right click takes a small amount of getting used to, but isn’t a big deal.

If battery life really is 8hrs then I’ll be impressed.

Size and weight is great – my daughter thought it was an 11″ Macbook Air (having briefly used one at a friend’s Christmas Eve party).

Performance seems good, with impressive boot time, and using Chrome and Google Apps feels much the same as a decent spec PC (with an SSD).

Multi user support was a breeze to set up.

Niggles

I’m using the SSH app. When I installed this on Chrome on my PC I could launch additional sessions by opening a new tab and clicking on the app, but the app doesn’t appear when I open a new tab. If I click on the app in the launcher then it just takes me back to the existing session. To get other sessions open I need to right click on the SSH tab and duplicate it.

I also tried out an RDP app on my PC before buying the Chromebook, but didn’t see the note that ‘Chromebook ARM does not currently support native client extensions but will in ChromeOS R25 expected to be released sometime in January’.

I miss some keys… like Del, and Home, and F5. I also think the resize key should go into full screen mode.

There’s OpenVPN support, but it seems to be almost impossible for a mortal to configure.

Overall

So far it’s living up to expectations, and it feels like a great little machine for not very much money. I’ll follow up with more once I’ve put some miles on the clock (and if I find resolutions to some of the specific issues).

I’m pleased to confirm that it’s great for writing blog posts on.

Notes

[1] Technically I still have a laptop, but since I started using my Lenovo X200 Tablet as my main PC in a docking station it’s become somewhat stranded there.

[2] Which is inexplicably *loads* cheaper in the US than the UK.

[3] Apparently Netbooks are dead. It’s a real shame that nobody seems to be making Intel i3 powered ones (preferably with HD4000 GPU) at a reasonable price any more, as it seems the AMD APUs that are popular now aren’t really powerful enough – I’d happily buy another X121e at the same price.

[4] If you’re not using an SSD then you’re literally waiting your life away.

[5] More so once I read that it can run Ubuntu, though that’s still a work in progress.

[6] Though the mix up of labels for ARM powered and Core i5 Chromebooks initially gave me some false expectations. Suffice to say that the i5 version has some nice features like Ethernet and Displayport, but I don’t think it’s worth the extra money.

Filed under: could_do_better, review, technology | 2 Comments

Tags: apps, ARM, Chromebook, google, RDP, Samsung, SSH, VNC

STM32F3 no dice (yet)

The STM32F3 is the latest in the lineup of Discovery boards from STMicroelectronics. There’s a smaller/cheaper board – the STM32F0 and a more expensive board with a higher spec CPU – the STM32F4. The F3 would be pretty boring on its own, so it’s been spiced up with some interesting onboard peripherals:

- A compass

- 3 axis accelerometer

- 3 axis gyroscope

- An 8 LED circle that’s ideal for direction indication

So it’s perfect for applications that you want to shake, twist or turn. It comes with a demo app that flashes the LED ring, indicates board tilt, and points North.

I was going to knock up a quick dice app that would use shake to roll, but the frst challenge was to get a development environment up and running.

IDE agony

The geting started guide has instructions for using four different integrated development environments (IDEs). Unfortunately the world of embedded software still has commercial (closed source) development tools, like enterprise development did a decade ago. I’ve been a little spoiled by TI bundling their own Eclipse based tools for the MSP430 and Stellaris dev boards. STMicro have instructions for four commercial IDEs:

- IAR Embedded workbench (30day time limit or 32k size limit)

- Keil MDK-ARM (32k size limit)

- Altium Tasking VX-Toolkit (details of trial version hidden behind a registration wall)

- Atollic TrueSTUDIO (30day time limit or 32k size limit)

That 32k size limit is probably fine for a dice app, but seems silly given the capabilities of the device. I looked around for open alternatives:

- Andrei has instructions for STM32F3 Discovery + Eclipse + OpenOCD, but I didn’t want to wade into figuring out how to port stuff from Ubuntu to Windows (perhaps I should just run an Ubuntu desktop VM).

- I also took a look at Yet Another GNU ARM Toolchain (YAGARTO), but that seems to be targetted to J-Link hardware (rather than ST-Link)

- There are instructions for using Eclipse with Code Sourcery Lite, but these seem targetted at the STM32F0 board. Maybe some F3 support will come along later.

Conclusion

So instead of getting going with my STM32F3 I spent the afternoon reviewing tools and their various limitations, which is a poor show compared to getting started with Arduino, MSP430 and Stellaris. I’l probably bite the bullet and download one of the size limited IDEs, but the dice will have to wait for another day.

Filed under: technology | 3 Comments

Tags: ARM, IDE, STM32F3

Styles of IT Governance

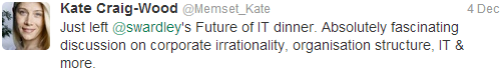

I had the pleasure of being invited along to one of Simon Wardley’s Leading Edge Forum dinners last week. Kate Craig-Wood did a great job of summing it up so I don’t have to:

I hope to return to the questions of corporate irrationality in another post.

The dinner was under Chatham House Rules, so I won’t say who got me started on the subject of IT Governance. I was however provoked into a realisation – that IT Governance is just a type of regulation, and that much can be learned by looking at what regulators do and how that works out for stakeholders.

The three types of regulation

I’ve worked in financial services for over 12 years now, and in that time I’ve observed 3 types of regulation:

- Rules – prescriptive regulation that says exactly what you can and can’t do. The best archetype for this that I can think of is the Monetary Authority of Singapore (MAS), but there are plenty of others.

- Principles – the regulator documents a number of principles that they expect participants to adhere to, but does not go into implementation detail. The US Securities and Exchange Commission (SEC) and UK Financial Services Authority (FSA) are typical examples that spring to mind.

- Comparative – the regulator expects participants to model their behaviour on each other (with some nudging towards that being a high water mark rather than lowest common denominator). This is how things work in Switzerland under the Eidgenössischen Bankenkommission (EBK).

Of course there are interactions between the models, so quite often practices that emerge from a comparative regime get encoded into a rules based regime.

How this relates to IT

Large enterprise IT shops spend billions of dollars on staff, equipment, software and services each year. Like a government they need to show that there are rules, and that the rules are being abided by. This is where IT governance comes in.

In most cases I would observe that IT governance is essentially a rules based approach. This ends up casting people who have ‘architect’ in their title into two roles:

- Drafters of legislation – much like the armies of lawyers working behind the scenes in parliaments, congresses and assemblies the world over.

- Counsel – for those that need to understand the legislation and how to abide by it (or push through new laws).

I don’t think it’s always been like that, and if I go back to my early career in enterprise IT it seemed that we were exiting a period of principle based governance, where the principles were baked into an organisation’s culture.

The opportunity

Creating, managing and supervising a large (and ever expanding) body of law rules isn’t particularly productive, so it’s worthwhile looking at where situations arise for alternative styles of governance (and whether styles can be commingled as they are in global financial services).

A particularly strong argument for the comparative approach should exist for organisations that feel they’re behind industry norms. The analogy I use here is cavity wall insulation. If I live on a street where all of my neighbours have had cavity wall insulation installed then I don’t need to make myself a discounted cash flow spreadsheet for an investment appraisal for cavity wall insulation. I should instead be asking my neighbours which contractors were good and/or cheap. If I’m cheeky then I could even ask how quickly they expect their investment to pay back (and hence benefit from their analysis). A similar argument might then extend to building a private cloud, creating a data dictionary or whatever.

Principle based approaches also have a lot to offer, as they are lighter touch (from a manpower and weight of documentation perspective), and easier to achieve buy in around.

In each case, a crucial factor should be balancing the cost to the organisation of running a given governance approach versus the expected benefit (in stopping bad things from happening).

Conclusion

Just as there are a number of different approaches to regulation, so should there be parallel approaches to IT governance in the enterprise. So much of the output of rules based approaches is one size fits all, even when it clearly doesn’t; so there are lessons to be learned, and alternatives to be tried, in finding a holistic and balanced approach. The purpose of IT governance is to ensure that the organisation is doing the right thing, and this process should start with the means of governance.

Filed under: architecture | 1 Comment

Tags: architecture, comparative, enterprise, governance, IT, law, principles, regulation, rules, strategy

BYOD

I’ve spent a good part of the last year working on mobile strategy, so I get asked a lot about Bring Your Own Device (BYOD[1]). This is going to be one of those roll up posts, so that I can stop repeating myself (so much).

It’s not about cost (of the device)

A friend last week sent me a link to this article ‘2013 Prediction: BYOD on the Decline?‘. My reply was this:

News at 11, an unheard of research firm gets some press for taking a contrarian position. They ruined it for themselves by trying to align BYO with cost savings. Same schoolboy error as cloud pundits who think that trend is about cost savings.

Cloud isn’t about cost. It’s about agility.

BYOD also isn’t about cost. It’s about giving people what they want (which approximately equals agility).

In fact cloud and BYOD are just two different aspects of a more general trend of the commoditisation of IT; cloud deals with the data center aspects, and BYOD with the end user devices that connect to services in the data center[2].

The enterprise is no longer in the driving seat

When I was growing up the military had the best computers, which is a big part of why I joined the Navy. Computers got cheaper, and became an essential tool for business. For a time the enterprise had the best computers, which is why I left the Navy and found work fixing enterprise IT problems. Now consumers have the best computers – in their pockets; time for another career change.

There are a number of companies out there trying to sell their device/platform or whatever based on it have ‘enterprise security’ features. This is a route to market that has failed (just take a look at the RIM Playbook) and will continue to fail because the Enterprise doesn’t choose devices any more.

- Consumers choose devices

- Employees take their consumer devices to work

- Devices that come to work need applications to make them more useful

Even when the Enterprise is buying devices, because the trade off between liability and control is worth it, they’ve buying the same devices that employees would choose for themselves.

MAM is where the action is, MDM is a niche

For a consumer device to be useful in a work setting it needs access to corporate data, and in most cases there is a need/desire to place controls around how that corporate data is used. There are essentially two approaches to doing this:

- Mobile Application Management (MAM) – where corporate data is secured in the context of a single application or a group of connected applications (that may share policy, authentication tokens and key management). With this approach the corporate data (and apps that manage it) can live alongside personal apps and data.

- Mobile Device Management (MDM) – where corporate data is secured by taking control (via some policy) over the entire device. This is how enterprises have been dealing with end user environments for a long time, but that was usually a corporate owned device (where this approach may still be appropriate) rather than BYO. Most users are bringing their own device to work to escape from the clutches of enterprise IT (and what the lawyers make them do), so MDM is a bad bargain for the employee. It’s also a minefield for the enterprise – what happens if employee data (e.g. precious photos) are wiped off a device? Could personal data (maybe something as simple as a list of apps installed) be accessed by admins and used inappropriately?

There is a 3rd way – virtual machine based segregation – but that approach is mostly limited to Android devices at the moment, and anything that ignores the iOS elephant in the room isn’t inclusive (and thus can’t be that strategic).

MAM isn’t without its issues, as it is essentially a castle in the air – an island of trust in a sea of untrustworthiness. This will eventually be sorted out by hardware trust anchors; but for the time being there must be some reliance on ecosystem purity (can Apple etc. keep bad stuff out) and tamper (jailbreak) detection[3].

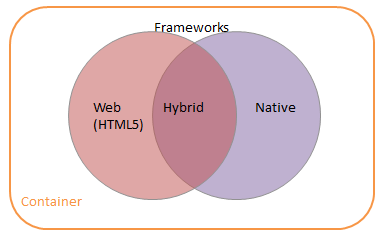

Application Frameworks

The containment of corporate data is one issue, but regardless of whether that’s done at the app level with MAM or the device level with MDM enterprises also need to figure out how to get that data into an application. There are essentially three approaches:

- Thin Client – rather than make a new app for mobile, just project out an existing application and access it via the tablet/smartphone or whatever. This can be pretty awful from a user experience point of view as the approach depends on good network connectivity, and often does a bad job at presenting apps designed for keyboard and mouse to a device that offers touch and gestures. It is however a quick and relatively easy way of preserving an existing investment in line of business applications. The connectivity issues can be dealt with by using protocols that are better optimised for mobile networks (such as Framehawk), and it’s also possible to use UI middleware to refactor desktops apps for the BYO user experience.

- Mobile Web – take an existing web site and provide a mobile version of it, reusing as much of the existing content management and UI componentry as possible. This is usually a great approach for cross platform support, but doesn’t give the shiniest native experience (and performance can be poor).

- Native App – build something specific for a given target platform for the best user experience and performance. This can be perceived as an expensive approach, though getting mobile apps (which are after all just the UI piece of what’s usually a much larger app ecosystem) developed can be small change compared to other enterprise projects.

It’s also possible to hybridise 2&3, though this involves trade offs on performance and flexibility that need to be carefully considered. Hybrid should not be a default choice just because it looks like it covers all the bases (just look at Facebook backing out of their hybrid approach).

Conclusion

BYOD may presently look like a trend, but it isn’t some temporary fad. It’s an artefact of consumer technology transforming the role of IT in the enterprise. That transformation places demands on IT that broadly fall into two areas: containment (of sensitive data) and frameworks (to develop apps that use/present that data). MAM is the most appropriate approach to containment for BYOD, and frameworks should be evaluated against specific selection criteria to determine the right approach on a case by case basis.

Notes

[1] It’s remarkable how quickly the conversation moved on from Bring Your Own Computer (BYOC) to Bring Your Own Device (BYOD) – normally meaning a tablet, but usually expanded to include smartphones that support similar environments to tablets.

[2] At some stage in the (not that distant) future the cloud will invert, and be materially present at the edge, on the devices that we presently consider to be mere access points.

[3] For the time being things are much easier in the iOS ecosystem, which is going to get problematic when all of those shiny new Android tablets that people get for Christmas show up in the New Year.

Filed under: technology | Leave a Comment

Tags: android, architecture, BYO, BYOC, BYOD, iOS, iPad, iphone, mobile, smartphone, strategy, tablet

Raspberry Pi Satellite TV

My kids got quite into a few of the FreeSat channels whilst on a recent holiday, so I thought that after all the fun I had getting DVB-T to work on my Raspberry Pi I’d have a go at DVB-S.

Another cheap receiver off eBay

A quick search of ‘USB DVB-S’ led me to this receiver for the bargain price of £15.86, and the Linux TV Wiki seemed to confirm that it was supported. Sadly I was about to find out the difference between ‘supported’ and working out of the box; though not straight away, as it took many weeks for the receiver to get to me from Hong Kong.

Driver drama

After plugging it into my Raspberry Pi running a recent build of OpenELEC nothing happened. This wasn’t a total surprise, as I’ve recently been doing a fair bit of digging around in the driver mechanism getting DVB-T cards working for various devices.

When I took a look in ~/OpenELEC.tv/projects/RPi/linux/linux.arm.conf I found:

# CONFIG_DVB_USB_LME2510 is not set

I changed this as follows, cleared out the build directory and kicked off a rebuild:

CONFIG_DVB_USB_LME2510=m

Whilst OpenELEC was building I hooked the receiver up to my Windows 8 Microserver to prove to myself that it was working. I had issues there with drivers too, as the ones supplied aren’t signed, meaning that I had to restart Windows and allow the installation of unsigned drivers (thankfully the Arduino folks have a useful HowTo guide for this).

I got Satellite TV working using the supplied Blaze software, but the Pop Girl channel that my daughter likes was missing. It turned out that the Blaze setup for Astra at 28.2E didn’t include the multiplex, and I had to add it manually using details from KingOfSat. For good measure I also tried out Windows Media Center (having got a free license key from the recent Microsoft Promotion). The setup process gave me some confidence that I’d end up with a sensible EPG/channel list, but in reality there was loads missing. I’m now glad that I didn’t pay for Media Center.

With Windows play over I went back to the RPi with my lme2510 build, and things were looking promising (dmesg | grep 2510):

[ 8.644049] LME2510(C): Firmware Status: 6 (44)

[ 13.482794] LME2510(C): FRM Loading dvb-usb-lme2510c-rs2000.fw file

[ 13.482828] LME2510(C): FRM Starting Firmware Download

Sadly the adaptor wasn’t showing up in TV HeadEnd.

Firmware frustration

A quick look in /lib/firmware on my RPi showed that there wasn’t any dvb-usb-lme2510c-rs2000.fw file to be downloaded. The Linux TV Wiki and TvBoxSpy[1] had instructions for creating the firmware file, but it wasn’t obvious to me that I needed to start out with the Windows driver.

My attempt with the US2B0D_x64.sys that had installed on my Windows 8 box failed, so I installed onto an old XP netbook and pulled USB2B0D.sys off there. That didn’t work either, probably because it was a newer file. In the end I found the right version of the driver file in a forum post. All this fuss because the manufacturers don’t support Linux, and exert copyright on their Windows drivers (and parts thereof) is a real pain – it’s hardly like the driver firmware is of any use without the hardware.

Having found and spliced the right Windows driver file into a Linux firmware file I then had to drop it into OpenELEC, which meant mounting up the SYSTEM file with squashfs, copying everything out, adding the firmware to /lib/firmware and then using mksquashfs to build a new SYSTEM file (and md5sum to create a new SYSTEM.md5).

With new SYSTEM files in hand I combined them with unchanged KERNEL files and put them (with checksums) into the upgrade folder on my OpenELEC RPi. One more reboot and I finally had a system that would register the DVB device:

[ 7.797065] LME2510(C): Firmware Status: 6 (44)

[ 13.044104] LME2510(C): FRM Loading dvb-usb-lme2510c-rs2000.fw file

[ 13.044131] LME2510(C): FRM Starting Firmware Download

[ 15.492746] LME2510(C): FRM Firmware Download Completed – Resetting Device

[ 15.493023] usbcore: registered new interface driver LME2510C_DVB-S

[ 16.980020] LME2510(C): Firmware Status: 6 (47)

[ 16.980055] dvb-usb: found a ‘DM04_LME2510C_DVB-S RS2000’ in warm state.

[ 16.981327] DVB: registering new adapter (DM04_LME2510C_DVB-S RS2000)

[ 17.059523] LME2510(C): FE Found M88RS2000

[ 17.059573] DVB: registering adapter 0 frontend 0 (DM04_LME2510C_DVB-S RS2000 RS2000)…

[ 17.059993] LME2510(C): TUN Found RS2000 tuner

[ 17.060059] LME2510(C): INT Interrupt Service Started

[ 17.182799] Registered IR keymap rc-lme2510

[ 17.186119] dvb-usb: DM04_LME2510C_DVB-S RS2000 successfully initialized and connected.

[ 17.186160] LME2510(C): DEV registering device driver

Final furlong

The last bit of config was in TV HeadEnd. At last I could see the DVB-S receiver in the Configuration->TV Adaptors drop down menu. Next I manually added the mux I wanted (thank you again KingOfSat), and after some trial, error and a few restarts I had services found and channels mapped.

When I browsed to tv -> BSkyB and pressed play on Pop Girl I got a frozen screen, but a little more playing around resulted in a watchable ‘Sabrina the Teenage Witch’ (even if it was squashed up from an aspect ratio perspective). Sadly it wasn’t too long before the system lost lock, and needed a cold boot and a bit more playing around to get working again.

ToDo

I’m not on the latest version of TV Headend, so an upgrade might cure the aspect ratio issue and may even help with stability.

Conclusion

I was able to get Satellite TV playing on my Raspberry Pi. The (un)reliability means that that I’d qualify this as a science project rather than a dependable piece of consumer electronics. Maybe that will improve over time, or maybe I just spent almost £16 and a day of my time learning the hard way about DVB-S on Linux.

Notes

[1] Enormous thanks are due to Malcolm Priestly as creator of the LME2510 driver that’s in the Linux kernel. He has also answered a number of forum posts that got me pointed in the right direction.

Filed under: howto, media, Raspberry Pi, technology | 13 Comments

Tags: 22f0, 2510, 28.2E, 3344, Astra, dm04, driver, dvb, DVB-S, Eutelsat, frimware, LFE2510, M88RS2000, openelec, Pop Girl, Raspberry Pi, Raspi, RPi, satellite, tv, TVHeadEnd, XBMC

There have been some important changes recently to OpenELEC, which are covered well on their blog. It was only a couple of months ago when OpenELEC 2.0 was released, and that version didn’t have Raspberry Pi support. Now OpenELEC 3.0 is in beta (see beta 1 and beta 2 announcements), and the good news for Raspberry Pi users is that it’s now part of this mainstream release.

Downloads

Raspberry Pi builds can be downloaded from openelec.tv/get-openelec, and I’m now running OpenELEC 3.0 Beta 2 build 2.95.2 on my own media player.

Image files

The easiest way to get started with OpenELEC on the Raspberry Pi is to download an SD card image file and burn it onto a card. That’s how I got started, and to help out the community I’ve been creating and hosting development builds and corresponding image files for some time.

Since the entire purpose of image files is to help people get started I’m planning to stop doing image files for development builds once OpenELEC 3.0 goes stable. Until then the resources site for PiChimney.com will have image files for dev releases and image files for the official (beta) releases. My rationale here is that people getting started should probably be using a stable build, and anybody with the wherewithal to tinker with dev builds can probably handle upgrading from a stable build (or even making their own SD card or image file).

Build server blues

For some time I was able to host everything on a single server at BigV, but when their free beta came to an end I needed to find a new home (otherwise my bandwidth bill was going to be a bit on the large side). I moved things to a virtual private server at BuyVM, as they include decent amounts of bandwidth in their packages. Unfortunately I bought an OpenVZ VPS without realising that block device loopback (and essential part of the SD card image making process) is disabled for security reasons. I’ve been making up the shortfall by using a KVM based VPS for the imaging process, but this has introduced complexity and fragility to the overall process (with interlocking scripts running across remote machines).

Summary

I’ll continue to host image files for dev and beta builds until OpenELEC 3.0 goes stable, and once it does go stable I’ll host images for the stable build and continue to run the automated build server for dev releases (but there will then be no more image files for dev).

Filed under: Raspberry Pi | 2 Comments

Tags: beta, build, image, openelec, Raspberry Pi, Raspi, release, RPi, SD card, stable, XBMC

Geeks and Guinea Pigs

Anybody who’s talked to me in recent months might be surprised to hear that I recently splashed out for a copy Windows 8, as I’ve not been a great fan of it – particularly the new Metro interface[1]. The £25 upgrade from the release preview I was running seemed like a bargain though, particularly as the Microserver I’m using it on didn’t come with any OS.

TL,DR version

Microsoft were supposed to be getting off their feature release followed by fixing it approach with Windows 8, but the Metro desktop throws a spanner into the works. If they keep Metro then I can’t see Windows 8 being deployed by many enterprises – it will be yet another ‘geeks and guinea pigs’ release – maybe not even that. If on the other hand Microsoft can backtrack a little, and allow people (consumer and enterprise users) to use the familiar desktop, then it’s a much more incremental upgrade to Windows 7, and will be more easy to adopt – and thus more popular (and successful). It’s possible that Steven Sinofsky’s departure will allow Microsoft to do this. Whatever happens though, it looks like the Windows cash cow is a lot less healthy – MS simply aren’t extracting as much money for their product any more.

Background, and the original promise

Intel has it’s ‘tick-tock‘ roadmap where it upgrades the features of its CPUs and then shrinks the fabrication process to make the CPUs smaller, cheaper to make and more power efficient. Microsoft has for many years followed a similar pattern – feature releases every other time; the difference is that the builds between feature releases can’t be shrinks as there’s no physical process – they are instead fixes, as there have usually been issues with the feature releases that have stood in the way of mass adoption:

| Feature release | Fixed Release |

| NT3.x | NT4 |

| 2000 | XP |

| Vista | 7 |

| 8 | ? |

The geeks and guinea pigs title for this post refers to the users that get the feature releases – people in IT who like trying out cutting edge stuff, and maybe a pilot group in ‘the business’.

When I first heard about Windows 8 it was when I was part of a Customer Advisory Council (and Windows 7 wasn’t even out of the door). We were told that having fixed the issues in Vista with Windows 7 there would be no more major changes, just incremental updates. No more tick tock, no more feature – fix it, just a nice gradual roll out of of improved functionality.

And then some genius decided to throw a spanner into the works, and have a consistent UI metaphor across smartphone, tablet, games console and desktop – Metro – the UI originally featured on the Zune. Once again we have a release that’s defined by a new feature – a feature that doesn’t seem to be well received outside of Redmond.

Why Metro is a disaster on the desktop

The Metro interface works great on smaller devices where the screen is used for one application at the time, and it’s clearly designed for touch screens. On the desktop though it doesn’t fit well with the keyboard and mouse. The whole point of the windows in Windows was to be able to have multiple applications open on a larger screen (or screens).

In over a year of using it myself I’ve always gone straight to the old desktop, and pinned all of the apps I use frequently so that I don’t miss the start menu. On the consumer and release previews I’ve found myself lost pretty much every time I’ve had to use the new interface, though it looks like the final release has at least sorted out the Control Panel (by going back to how it was).

Metro is right up there with the Office ribbon and Mr Paperclip in the competition for worst user experience, and it’s no surprise that the most popular app for Windows 8 is Start8 – an app to bring back the start menu.

The Enterprise angle

The general aim of Enterprise IT is to keep things going as cheaply as possible, and that means change is bad. Many organisations are still using Windows XP, and are only now upgrading to Windows 7 (as Microsoft has a gun to their head with support ending for XP). There is hence almost zero appetite for doing any more change to the environment (particularly as Windows 7 has involved costly hardware refreshes and application compatibility testing).

If Windows 8 had been the incremental update that was promised (more like Windows 7.1 perhaps) then it would have been relatively simple for organisations to move straight to it. Things might be different if MS had provided an option to avoid Metro in the Enterprise Edition; but the way things are Windows 8 is definitely one for the geeks and guinea pigs.

A word on editions

Windows 7 came with a bunch of different editions – Starter, Home Basic, Home Premium, Professional, Enterprise and Ultimate. I quite liked the Ultimate edition[2], but MS made it too expensive and too hard to get, so I expect that approximately nobody who didn’t work for MS or have an MSDN subscription ever saw it – even the most deep pocketed PC fan would only get Pro from their OEM.

Windows 8 has far fewer editions – vanilla, Pro and Enterprise. So for the consumer the choice is pretty simple. Pricing makes it even more simple. With the Pro upgrades available for £25/$40, and no option to upgrade to basic Windows 8, it seems that pretty much everybody that buys Windows 8 will buy Pro.

The cash cow stops milking

The £25/$40 upgrade pricing to Pro is supposedly time limited, and it seems to have had the desired effect in driving early adoption with 40m licenses sold so far, but there are a couple of important things going on here:

- The gap between ‘upgrade’ and ‘full’ has disappeared, as MS has allowed upgrades from the preview releases (that it hadn’t charged for).

- The price expectation for a Windows license has been set, and set low.

Even if Windows 8 doesn’t damage the PC market (and I think it will[3]) then MS is going to make less money per unit that it was before.

Conclusion

Windows 8 wasn’t supposed to be a geeks and guinea pigs release, but that’s what it is. MS are going to struggle in the consumer space because of Metro, and it will likely stop them getting anywhere in the Enterprise. Meanwhile the price point they can charge has moved against them.

I did put some money in Microsoft’s pocket for Windows 8, but only so that I could continue to have a working license for a particular machine. I have no plans to upgrade any of my Windows 7 machines – even at £25 – it simply isn’t worth the trouble, never mind the money.

It’s not too late for MS. They could easily roll the features of Start8 into a patch on Windows Update and give users (particularly corporate ones) what they want – a nice incremental upgrade rather than a feature release. It’s too soon to call that Windows 9.

Notes

[1] Which isn’t even called Metro any more due to a legal dispute, though everybody seems to still call it Metro anyway.

[2] I was able to get this via an MSDN subscription.

[3] The data I’m waiting for is how many Windows 8 PCs and laptops bought over the Black Friday weekend go back to the shop because people don’t like it. I’m told that many PC purchases happen simply because existing PCs get into poor shape (often due to malware) and it’s easier to buy a new one than to sort out the old one. MS have unfortunately moved the pivot point in a way that’s not in its favour – the pain of getting on with Metro will now balance against the pain of sorting out an old PC.

Filed under: technology | Leave a Comment

Tags: editions, upgrade, windows 7, Windows 8