If you just want to download images rather than make them then I’d suggest downloading an image of the latest official build. For the adventerous I’m still doing frequest dev builds and associated images[1], but these may be unstable. Read on if you’re interested in how this stuff is done…

The Raspberry Pi build of OpenELEC now contains a handy script to partition and write to an SD card. The script gets included if you make a release:

PROJECT=RPi ARCH=arm make release

This will create a bzip2 archive, which can be extracted thus[2]:

mkdir ~/OpenELEC.tv/releases cd ~/OpenELEC.tv/releases tar -xvf ../target/OpenELEC-RPi.arm-devel-date-release.tar.bz2 cd /OpenELEC-RPi.arm-devel-date-release.tar.bz2 sudo dd if=/dev/zero of=/dev/sdb bs=1M sudo ./create_sdcard /dev/sdb

The script assumes that an SD card is mounted as /dev/sdb, but there’s a quicker and easier way to do things if you want an image. If you’re using VirtualBox (or some other virtualisation system) then simply add a second hard disk. Make it small (e.g. 900MB) so that the image will fit onto an SD card later on[3].

Once the (fake) SD card has been created then an image file can be made:

sudo dd if=/dev/sdb of=./release.img

This will create a file the size of the (fake) card, so use gzip to compress it:

gzip release.img

Updates

The OpenELEC team accepted a change that I made to the create_sdcard script so that it can now be used with loop devices. This allows a simple file to be used to directly create an image:

sudo dd if=/dev/zero of=./release.img bs=1M count=910 sudo ./create_sdcard /dev/loop0 ./release.img

Notes

[1] I had been using a public folder on Box.net, but it seems that these files are too popular, and my monthly bandwidth allowance was blown in a couple of days.

[2] Assuming that OpenELEC was cloned into your home directory. Where I use date–release it will look something like 20120603004827-r11206 on a real file. I’ve included a line here to wipe the target disk so that the resulting image can be compressed properly.

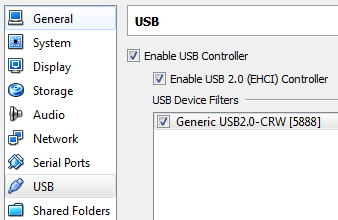

[3] Once created the image file can be written to SD using the same means as for other Raspberry Pi images (e.g. Win32DiskImager if you’re using Windows). An SD card can be mounted directly within VirtualBox by using the USB settings. In my case it appears like this:

If a real SD card is used alongside of a fake one then it will likely appear as /dev/sdc. Copying the image over is a simple case of doing:

sudo dd if=/dev/sdb of=/dev/sdc bs=1M

Filed under: howto, Raspberry Pi | 79 Comments

Tags: image, openelec, Raspberry Pi, Raspi, RPi, SD, VirtualBox, XBMC

I spent time figuring this out due to needing SD cards for my Raspberry Pi, but the instructions apply to pretty much anything on SD.

DD on Windows

Windows sadly lacks the DD utility that’s ubiquitous on Unix/Linux systems. Luckily there is a dd for Windows utility. Get the latest version here (release at time of writing is 0.63beta).

Which disk

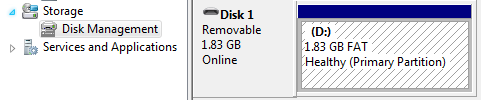

Before using DD it’s important to figure out which disk number is allocated to the SD card. This can be seen in Computer Management tool (click on the Start button then Right Click on Computer and select Manage). Go to Storage -> Disk Management:

Here the SD card is Disk 1.

Making the image

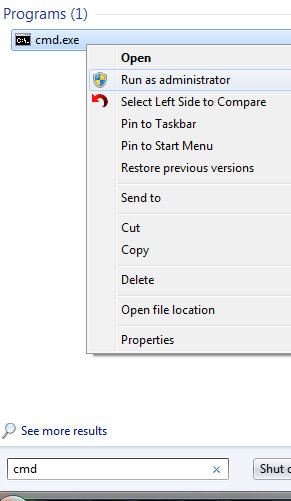

First start a Windows command line as Administrator (hit the start button, type cmd then right click on the cmd.exe that appears and select Run as Administrator). Next change directory to wherever you unzipped the DD tool.

To copy the SD card to an image file (in this case c:\temp\myimage.img) use the following command line:

dd if=\\?\Device\Harddisk1\Partition0 of=c:\temp\myimage.img bs=1M

In this case we’re using DD with 3 simple arguments:

- Input file (if) is the SD card device

- Output file (of) is the image file we’re creating

- Block size (bs) is 1 megabyte

Writing the image back to a clean SD card

The first step is to ensure that the SD is complete clean. Most cards come preformatted for use with Windows machines, cameras etc. The diskpart tool can be used to remove that. Go back to your cmd running as administrator (and be very careful if you have multiple disks that you use the right number):

diskpart

select disk 1

select partition 1

delete

exit

You’re now ready to copy the image back to the SD (simply by swapping the earlier input file and output file arguments):

dd of=\\?\Device\Harddisk1\Partition0 if=c:\temp\myimage.img bs=1M

Filed under: howto, Raspberry Pi | 9 Comments

Tags: DD, howto, image, Raspberry Pi, Raspi, RPi, SD

KVM mort

This isn’t a post about the KVM Hypervisor, which I believe is alive, well and rather good.

This is a post about keyboard, video and mouse switches.

At both home and work I have multiple machines, and monitors with multiple inputs, so all I need is a KM switch to share my keyboard and mouse rather than a KVM switch. Video switching was fine back in the days of VGA to analogue monitors, but these days it’s anachronistic (I want a digital input and though digital switches are available they’re expensive) and unnecessary (as any decent monitor has multiple [digital] inputs).

A better monitor

I could say that I want just a KM switch[1], but that might be asking for faster rewind for video tape in the age of DVD. What I really want is an integrated KM switch. Monitors already often have USB hubs that could reasonable be used for keyboard and mouse – it would be a small step (and very little silicon) to make that switchable to multiple USB outs[2].

Next up I want a means to switch between inputs that doesn’t involve pressing too many buttons. I don’t quite get why I have to go through a navigation exercise to switch inputs but it’s the same problem on my monitor at home, my monitor at work even the TV I have in my bedroom. Rather than a single button to cycle between (live) inputs I have to press something like input (or source) then go up/down a list then select an input – far too many button pushes. Of course if the monitor had a KM switch in then the monitor maker could take a hint from the KVM people and have hot keys (on the keyboard) to switch between inputs[3].

Conclusion

As I’m not in the market for a new monitor a cheap and functional KM switch would be ideal, but this stuff really should be built into the monitor to improve upon USB hub functionality already there[4].

[1] Yes, I know that I can use a KVM switch and just not use the video bit – this is in fact what I have at my work desk. The trouble is that some of them try to be too clever by doing things like not switching to an input with no video signal present. Anyway, the engineer in me weeps at the wasted components.

[2] There are probably cases where it would make sense to switch other USB peripherals that might normally be connected to a monitor based hub, and I expect there may be others when this wouldn’t be desirable. I expect it would be best to keep things limited to just keyboard and mouse.

[3] Double tapping ‘scroll lock’ to switch between my work laptop and microserver is a lot quicker than the Source->DVI/Displayport->Source dance I have to do to change inputs on my monitor.

[4] I am left wondering what monitor makers expect us to do with those multiple inputs, particularly when there are many of the same type (e.g. my monitor at home has 2 x DL-DVI so the multitude of inputs isn’t just to cater for a variety of different types of source – you’re expected to have many computers attached to the same screen)? Do they think we just have loads of keyboards on the same desk – perhaps stacked on stands like an 80s synth band?

Filed under: could_do_better, technology | 2 Comments

Tags: digital, displayport, DVI, HDMI, keyboard, KVM, monitor, mouse, switch, USB, VGA, video

Raspberry Pi on iPad

My second Raspberry Pi came at the end of last week[1], so now I have one to tinker with in addition to the first that I’m using as a media player.

It turns out that it’s not just SD cards that the raspi is fussy about, I had a real struggle getting either of my spare monitors to work with it. In the end I somehow found the combination of HDMI-DVI adaptor and cable that had worked last week and things sprung to life.

SSH

Getting a monitor to work wouldn’t be necessary if SSH was enabled by default, which sadly it isn’t.

You can start SSH from the command line with:

sudo /etc/init.d/ssh start

Alternatively you can set it to autostart by renaming one of the files in the boot partition:

sudo mv /boot/boot_enable_ssh.rc /boot/boot.rc

Once SSH is sorted out you can use your favourite client (mine is PuTTY) to connect to the raspi. I also configured key based login, but that’s a different howto[2].

VNC

The purist in me was going to just use an X server (probably Cygwin) to connect to the raspi, but VNC is easier to get going.

To install the VNC server on the raspi:

sudo apt-get install tightvncserver

Once that’s installed start it with:

vncserver :1 -geometry 1024x768 -depth 16 -pixelformat rgb565

I’ve set the resolution here to be the same as the iPad.

At this stage it might make sense to test things with a VNC client from a Windows (or Mac or Linux) box. I used the client from TightVNC .

The iPad bit

There are probably a bunch of VNC clients for the iPad, but I regularly use iSSH for a variety of things, and although at £6.99 it’s one of the most expensive apps I have on my iPad I generally think it’s worth it.

iSSH can connect to VNC servers directly or through an SSH tunnel:

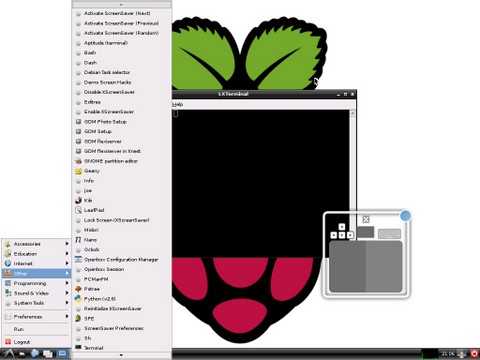

Hit save and then hit the raspi entry to connect, and I get something like this:

At this point things are probably easier if you have a bluetooth keyboard (and mouse).

Conclusion

With two protocols (SSH and VNC) configured it’s possible to do useful stuff with the Raspberry Pi remotely, and the iPad with iSSH makes a fine piece of glass to use it through.

Acknowledgement

With thanks to the My Raspberry Pi Experience blog for the VNC howto I adapted here. If you want more of a detailed walk through with loads of screen shots then take a look there.

[1] I ordered from both suppliers in the first couple of days as it was utterly unclear which was going to get their act together.

[2] If you do use PuTTY then it may make more sense to generate keys using PuTTYgen rather than ssh-keygen (another howto).

Filed under: howto, Raspberry Pi | 8 Comments

Tags: howto, iPad, iSSH, Putty, Raspberry Pi, Raspi, RPi, SSH, vnd

Bolting in security

This is a long overdue reply to Chris Hoff’s (@Beaker) ‘Building/Bolting Security In/On – A Pox On the Audit Paradox!‘, which was his response to my ‘Building security in – the audit paradox‘.

Hopefully the ding dong between Chris and I will continue, as it’s making me think harder, and hence it’s sharpening up my view on this stuff. If you want to see some live action then I’ll be on a panel with Chris at ODCA Forecast on 12 Jun in NYC[1].

I suggested in my original post that platform as a service (PaaS) might give us a means to achieve effective, and auditable, security controls around an application. When I wrote that I assumed that the control framework would be an inherent part of the platform, but it doesn’t have to be. Perhaps we can have the best of both worlds by bolting security in.

Bolting

By this I mean optional – a control that doesn’t have to be there. Of course we can expect a PaaS to have a rich selection of basic security controls, but it would be silly to expect there to be something to suit every need. It would however be great if there was a marketplace for additional controls that can be selected and implemented as needed.

As Chris points out this has already happened in the infrastructure as a service (IaaS) world with things like introspection APIs giving rise to third party security tools. Many of these look and smell like the traditional bolt on tools for a traditional (non service delivered) environment, but how they work under the hood can be much more efficient.

In

By this I meant in the code/execution path – a control that becomes an integral part of an application rather than an adjunct. Bolt on solutions have traditionally been implemented in the network, and often have some work on their hands just to figure out the context of a fragment of data in a packet. Built in solutions are embedded in the code and data (and thus don’t struggle for context) but can be hard to isolate and audit. Bolt in solutions perhaps give us the best of both worlds – isolation for an auditability perspective and integration with the run time.

The old problems don’t go away

When asked ‘why do we still use firewalls’ a former colleague of mine answered ‘to keep the lumps out'[2]. It’s all very well having sophisticated application security controls, but it’s still necessary to deal with more traditional attacks. I’d skirted over this on my original post, but Chris did a good job of highlighting some of the mechanisms out there (which I’ll repeat here to save bouncing between articles):

- Introspection APIs – I already referred to these above. They’ve primarily been used as a way to do anti virus (which is often a necessary evil for checkbox compliance/audit reasons), but there’s lot of other cools stuff that can be achieved when looking into the runtime state of a machine.

- Security as a service – if a security based service (like content filtering or load balancing) isn’t implemented in one part of the cloud then get it from another.

- Auditing frameworks – these potentially fit three needs; checkbox style evaluation of controls before choosing a service, runtime monitoring of a service and it’s controls, and an integration point for newly introduced controls to report on their status (after all a control that’s not adequately monitored might as well not exist).

- Virtual network overlays and virtual appliances – the former provides a substrate for network based controls and the later a variety of implementations.

- Software defined networking – because if reconfiguration involves people touching hardware then it’s probably not ‘cloud’.

Conclusion

Moving apps to the cloud doesn’t eliminate the need for ‘bolt on’ security controls, and brings some new options for how that’s done. ‘Building in’ security remains hard to do and even harder to prove effective to auditors (hence the ‘audit paradox’). ‘Bolting in’ security via (optional modules in) a PaaS might just let us have our cake and eat it.

[1] I have some free tickets for this event available to those who make interesting comments.

[2] I’ve got an odd feeling that Chris Hoff was in the room when this was said.

Filed under: cloud, security | Leave a Comment

Tags: @beaker, audit, bolt, bolt on, build in, Chris Hoff, cloud, control, Forecast, iaas, in, ODCA, paas, security

I’ve continued tinkering with my OpenELEC media player, and there’s too much stuff to do as just updates or comments to the original post.

Somebody gave me a nice laser cut Rasberry Pi logo at the last OSHUG meeting

Build

I started out with a canned build[1], but discussion on the OpenELEC thread on the Raspberry Pi Forums suggested that I was missing out on some features and fixes. I therefore did another build (in the OpenELEC directory):

git pull PROJECT=RPi ARCH=arm make

I’m presently running r10979, which seems to be behaving OK. I’ve uploaded some later builds to github, but not had the time to test them out myself. To get some of the later builds to compile properly I needed to delete the builds directory:

rm -rf build.OpenELEC-RPi.arm-devel/

To use these binaries simply copy the OpenELEC-RPi.arm-devel-datestamp-release.kernel file over kernel.img and OpenELEC-RPi.arm-devel-datestamp-release.system over system on a pre built SD card. As these files sit on the FAT partition this can easily be done on a Windows machine (even though it can’t see the ext4 Storage partition). The files can’t be copied in place on the Rasberry Pi because of locks.

Config.txt

This is the file that’s used to set up the Raspberry Pi as it boots. The canned build that I’m using didn’t have one, so I created my own:

mount /flash -o remount,rw touch /flash/config.txt

I’ve set mine to to start at 720p 50Hz:

echo 'hdmi_mode=19' >> /flash/config.txt

There are loads of other options that can be explored such as overclocking the CPU.

Remotes

The cheap MCE clone that I bought still isn’t working entirely to my satisfaction, but I’m less bothered about that as there are other good options. I already raved a little about XBMC Commander for the iPad in an update to my original post (it also works on the iPhone, and presumably recent iPod Touch). I’ve also tried out the Official XBMC Remote for Android, which is a little less shiny but pretty much as functional; best of all it’s free.

NFS

When I first set up CIFS to my Synology NAS I meant to try out NFS as well. At the time I didn’t as things weren’t working properly on my NAS, which turned out to be down to a full root partition stopping any writes to config changes. Having sorted that out I’m now using .config/autostart.sh to mount using NFS thus:

#! /bin/sh

(sleep 30; \

mount -t nfs nas_ip:/volume1/video /storage/videos -r; \

mount -t nfs nas_ip:/volume1/music /storage/music -r; \

mount -t nfs nas_ip:/volume1/photo /storage/pictures -r \

) &

Conclusion

That’s it for now. The dev build I’m on seems stable enough and functional enough for everyday use, so I’ll probably stick with that rather than annoying the kids with constant interruptions to their viewing. Hopefully I won’t have to wait too long for an official stable release.

Notes

[1] The original canned build is now ancient history, so I’m now linking to the latest official_images.

Updates

Update 1 (4 Jun 2012) – r11211 release bundle and image (900MB when unzipped so should fit onto 1GB and larger SD cards).

Update 2 (4 Jun 2012) I’ve put r11211 and will put subsequent bundles and images that I make into this Box.net folder.

Update 3 (5 Jun 2012) my Box.net bandwidth allowance went pretty quickly, so I’ve now put up the latest release bundles and image files on a VPS.

Update 4 (26 Jan 2013) release candidates should be used rather than dev builds in most cases, so links modified to point to those.

Filed under: howto, media, Raspberry Pi | 30 Comments

Tags: 720p, mce, Media Player, mount, nfs, openelec, Raspberry Pi, Raspi, remote, resolution, Synology, XBMC

My old Kiss Dp-600 media player has been getting progressively less reliable, so for a little while I’ve been telling the kids that I’d replace it with a Raspberry Pi. Of course getting hold of one has proven far from simple.

Some time ago the prospect of using XBMC on the Raspi was confirmed, leading me to consider that this spells the end for media player devices (or at least a change in price point). Perhaps I should have done more pre work, but in the end I waited for the device to arrive before getting started. My first search immediately took me to OpenElec and a post about building for Raspi. I downloaded the sources and after some tool chain related hiccups[1] kicked of the build process on an Ubuntu VM. This turned out to be entirely unnecessary, as I was able to download a binary image[2].

The next step was to copy the image onto an SD card. This was fairly straightforward using the Windows Image Writer, which is the same tool used to write the standard Debian images for Raspi. In my case I couldn’t quite squeeze the image onto a handy 2GB SD card[3], but I had a larger card handy that seems to work fine.

I was now able to boot into XBMC and use the cheap MCE remote I’d bought on eBay a little while ago. After fiddling with some settings I’ve been able to get things so that everything plays ago (with sound). I’m using some mount commands in .config/autostart.sh[4] to connect to CIFS shares on my NAS for videos, music and photos:

#! /bin/sh (sleep 30; \ mount -t cifs //nas_ip/video /storage/videos -o username=foo,password=S3cret; \ mount -t cifs //nas_ip/music /storage/music -o username=foo,password=S3cret; \ mount -t cifs //nas_ip/photo /storage/pictures -o username=foo,password=S3cret \ ) &

Stuff that I’d still like to change:

- SPDIF – The Raspi doesn’t have SPDIF out via its 3.5mm jack, so I have no way of piping digital audio to my AV receiver (sadly my TV doesn’t have a digital audio output). Maybe I’ll be able to use a cheap USB sound card to fix this.

- Resolution – I’ve got things going pretty well at 720p, but I haven’t found a reliable way to get 1080p output. My TV might be partly to blame here. I bought a 37″ LCD about a year too early, and the best choice at the time was Sharp’s ‘PAL Perfect‘ screen. It has a resolution of 960×540, which makes downscaling of 720p and 1080p very simple.

Reboots – don’t seem to be reliable at all. I’ve not yet managed to get a clean restart after doing ‘reboot now’ from the command line. Even pulling power seems like a hit and miss affair. I can see this being a problem for the inevitable time that the system fails whilst I’m away for a week travelling[5].- Remote – when I first tested the MCE remote on a Windows laptop most of the buttons seemed to do sensible/expected stuff. On OpenElec/XBMC the key buttons (arrows, select and back) seem to work – along with the mouse, but many of the other buttons don’t seem to work at all.

Conclusion

Updates

Update 2 (14 May 2012) – I got XBMC Commander for my iPad. It’s worth every penny of the £2.49 that I spent on it as it totally transforms the user experience. Using a remote to navigate a large media library is a pain. Using a touch screen lets you zoom around it – recommended.

Update 3 (20 May 2012) – I’ve done a Pt.2 post.

Update 4 (31 May 2012) – binary image link updated to r11170.

Update 5 (3 Jun 2012) – binary image link changed from github to Dropbox.

Update 6 (4 Jun 2012) – Dependencies in [1] updated to add libxml-parser-perl as this has caused the build to fail when I’ve used fresh VPSes.

Update 7 (5 Jun 2012) – binary image link changed to a VPS.

Notes

[1] On first running ‘PROJECT=RPi ARCH=arm make’ I hit some dependency errors:

./scripts/image ./scripts/image: 1: config/path: -dumpmachine: not found make: *** [system] Error 127

This was fairly easily fixed by following the instructions for compiling from source, which in my case running Ubuntu 10.04 meant invoking:

sudo apt-get install g++ nasm flex bison gawk gperf autoconf \ automake m4 cvs libtool byacc texinfo gettext zlib1g-dev \ libncurses5-dev git-core build-essential xsltproc libexpat1-dev \ libxml-parser-perl

[2] Thank you marshcroft for your original image – much appreciated. Now replaced by a much newer build.

[3] Clearly some 2GB SD cards have a few more blocks than others.

[4] Thanks to this thread for showing the way.

[5] There have been times that I’ve suspected the old DP-600 of subscribing to my TripIt feed – failure seemed to be always timed to the first days of a long business trip.

Filed under: howto, media, Raspberry Pi | 22 Comments

Tags: .config, 1080p, 720p, autostart.sh, cifs, mce, Media Player, mount, NAS, openelec, Raspberry Pi, Raspi, reboot, remote, resolution, restart, spdif, Synology, XBMC

Raspberry Pi Business Card Box

After months of waiting, my Raspberry Pi finally arrived on Friday[1]. Somehow I resisted the temptation to dash straight home and start playing with it, and went along to my daughter’s summer concert at school. This one has been earmarked to replace our decrepit Kiss Dp-600 streaming media player – more on that later. First though it needed a box. Since the form factor is the size of a credit card, and credit cards are the same size as business cards, I reckoned one of the plastic boxes that business cards come with might work. It does:

I could have done a better job with the RCA hole – it’s a bit too high. Hopefully somebody will come up with a nice paper template to do this properly (and I expect a laser cutter could do a much better job than me with a steak knife and a tapered reamer).

I’ve not done anything with the box lid yet, but it’s probably a good idea to keep dust out. I’m guessing that at a max power draw of 3.5W that heat dissipation shouldn’t be too much of a worry.

[1] My order number was 2864, so it looks like I just missed the earlier first batch of 2000. If there’s a next time I need to remember to fill out the interest form first before tweeting about it :(

Filed under: Raspberry Pi | 5 Comments

Tags: box, business card, Raspberry Pi, Raspi

An enterprise Ultrabook

I’ve recently had a couple of laptops on loan that have got me thinking about what the perfect enterprise laptop might feature.

Business – Lenovo X220

I was loaned this to try out a super secret new security product. Regular readers here will know that I have a fondness for Lenovo laptops, and this is probably the best one I’ve ever used – an almost perfect balance of performance, size, weight and endurance.

What I like:

- Performance – SSD and 8GB RAM are a potent combination, and I never threw anything at the Core i5 CPU that would cause it to sweat.

- DisplayPort and VGA connectors – so no snags getting hooked up to a variety of displays.

- Great keyboard.

- Wired Gigabit ethernet -making the transfer of some videos to watch on the plane painless.

- Size and weight – 1.5kg.

- Removable SSD – so I could take a snapshot of the machine as it was given to me, and revert back to that later.

What could be even better:

- The screen is OK at 1366 x 768, but I’ve used finer pitch screens before and liked them.

- I didn’t have a chance to test a docking station, so it’s not clear to me whether or not it can drive two displays digitally (like my HP laptop does at work)[1].

- Waking from sleep is a bit hit and miss, and I’ve more than once ended up shutting it down completely rather than waking it up :(

Consumer – Dell XPS13

- Looks great

- Trackpad is surprisingly nice to use (I generally prefer at TrackPoint) – this one is really big, and supports a range of multi touch gestures that ease navigation.

- The chicklet keyboard is also good, and is backlit – making typing in the dark a breeze.

- No fan or exhaust grill – so no heating up the bottle of water on my airline tray table. The quid pro quo here is that the base can get somewhat warm.

- The screen is once again 1366 x 768, and whilst the Gorilla Glass looks great it’s also a little too reflective at times.

- A single mini DisplayPort socket means carrying around a bagful of dongles to get hooked up to external displays, and limits connectivity to one screen. There’s also WiDi[2], but I’ve yet to find something that I can use that with.

- If feels heavy even though it’s 100g lighter than the X220 – clearly density matters.

Enterprise Ultrabook – the best of both worlds?

- Removable drive – so that sensitive corporate data (and precious user config/state) can be easily held onto.

- Some means to drive two external screens and the rest of the stuff on a typical work desk – keyboard, mouse, webcam, headset etc.[3]

- A means to connect to a wired network, as using WiFi with a VPN can be a time consuming and frustrating process. (This should be incorporated into the solution for connectivity for the point above).

- An optional smartcard slot[4].

The Apple Alternative

Conclusion

[1] It seems that the typical office still hasn’t got with having proper large monitors (by which I mean 27″ or 30″), having instead pairs of 19″, 22″ or 24″ screens.

[2] The first time I heard this talked about I heard ‘Wide Eye’ rather than ‘WiDi’ – somehow this made sense for a system able to wirelessly project images.

[3] Traditionally this has been done with a docking station. I think the truly modern way would be a Thunderbolt connection to a monitor that’s then the hub for everything else, but it’s not reasonable to expect new machines to drive an upgrade of everything else so perhaps some sort of (Thunderbolt) docking strip is the way ahead.

[4] The X220 doesn’t seem to have one of these (though it does have a fingerprint reader, which might be an alternative for some, and it also has an ExpressCard slot that I’m sure could be put to work in this area).

[5] Large enterprises have a habit of smashing operating systems down to their constituent parts and (at great cost) reassembling them. They also generally have enterprise licensing deals with Microsoft. I hence can’t see Boot Camp being that much of an obstacle to adoption.

Filed under: review, technology | 5 Comments

Tags: business, consumer, corporate, displayport, docking, dual screen, enterprise, laptop, network, smartcard, Thunderbolt, ultrabook, Wide Eye, WiDi

For a little while I’ve been experiencing lousy service from my credit card providers, and judging by what I hear from others I’m far from alone on this. The level of false positives from card company fraud detection systems has reached a point where it’s creating a bad customer experience, and it often seems that ‘common sense’ has been thrown out the window.

A personal example

I recently went on a family holiday to the US. Within hours of arriving my card was blocked and I found myself having to call the fraud department.

What should have happened

I booked my airline tickets using the card. It shows clearly on my statement that one of the tickets was in my name, and the destination of the flight. It should therefore have been no surprise when I showed up in Tampa on the appointed day and started spending money[1]. The key point here is that the card company had very specific data about my future movements.

What actually happened

I picked up my hire car in Tampa (card transaction for future fuelling fees etc.) and headed off towards my ultimate destination of Kissimmee. On nearing my destination I needed to fill up with fuel [2], so I stopped at a 7-11 to gas up. I tried to pay at the pump [3], but this failed, so I went into the store to pre authorise a tank full of fuel. My card was declined and I had to use another. When I checked my email shortly afterwards there was a fraud alert, and when I switched on my UK mobile it immediately got a text saying the same [4]. I called the fraud line (and got through straight away, as it was early in the UK morning), and explained that the (attempted) transactions were genuine, and that I would remain in the US for another couple of weeks. The card was unblocked and I continued spending… for a while at least.

What happened next

Two weeks later, another gas station, another transaction that I had to use another card for, another text asking me to call the fraud department. This time it took almost 9 minutes to get through, as it was Easter Saturday and still the middle of the shopping afternoon back at home. I was pretty angry – at the wait, and because it had happened again within the time that I’d specified I’d be using the card in the US. There were apparently three suspicious transactions, with the last one causing my card to be blocked:

- Buying gas at the same 7-11 that had caused the problems last time.

- Some groceries from Super Target [5].

- Another attempt to buy gas (at a place on the road back to Tampa).

What’s going on here?

I necessarily need to speculate here a little, as the card company can’t/won’t explain how its fraud detection algorithms work[6]. It’s a classic case of ‘computer says no‘. Likely there are a bunch of heuristics about transaction types that are more likely to be fraudulent[7]. My guess would be that convenience stores rank as pretty high risk, and the problem in my case is that it’s almost impossible to buy gas at anywhere that isn’t also a convenience store. Somewhere else somebody has done a cold analysis of the cost of dealing with false positives (which mainly falls on me the customer) versus the costs of fraud. There is no doubt a lot of analysis going on here.

Doing better

So data and analysis are at the heart of this, but is it the right data leading to the right analysis? I think not. As a customer I think the experience is lousy precisely because things that seem obvious to me are being apparently ignored by the card company:

- Location – if I’m buying airline tickets with my card then the card company knows in advance where I should be. These data points should take precedence over heuristics about ‘normal’ spending locations.[8]

- Inference – if I rent a car for 2 weeks then I’m pretty likely to buy some gas to go in it.

- Explicit overrides – if I tell the company where I’m going to be, and what I’m likely to be spending on then the fraud pattern matching should adjust to suit.

Conclusion

[1] In fact the fraud bells should have started ringing if I started making in person transactions somewhere other than Tampa.

[2] Why the car wasn’t supplied full like I’d paid for is another story.

[3] The pumps always ask for a zip code, which is likely where the problems begin for anybody outside of the US. Perhaps the card companies should allow users to register fake ZIP codes for such purposes (I always input the ZIP for an office where I used to work – which is generally OK for buying MTA cards in New York, and a variety of goods that are delivered online).

[4] Luckily for me it seems that I wasn’t charged some extortionate roaming rate for receiving that text.

[5] Why that transation was flagged an the other 4-5 times I bought there weren’t is a total mystery.

[6] Presumably in the belief of security by obscurity – if the bad guys don’t know how the system works then they can’t engineer around it.

[7] Possibly some big data type tools have been used behind the scenes here.

[8] For bonus points the companies should provide an easy way for me to notify travel plans for when I’m buying tickets with another card e.g. my TripIt feed has all of this data. There’s a huge opportunity here for companies to become ‘friends’ in social networks that brings utility beyond just better targeting of ads.

Filed under: could_do_better, grumble, travel | 1 Comment

Tags: algorithm, analytics, big data, card, convenience, customer, customer experience, customer service, data, fraud, gas, social, travel