A week ago I ordered a Push N Go Thomas for my nephew from T J Hughes (Amazon only had the Percy version).

The site showed that they had it in stock (unlike some of the other sellers that I’d found on Google Shopping), and I was even willing to bear the somewhat steep P&P to get it in time for Christmas.

I went through the usual check out process, and received an email with an order number. Had I been more paying attention then this might have bothered me:

What happens next?

The details of your order will now be processed and a further email confirming acceptance of your order will be sent to you, usually within 3 working days.

Yesterday – 6 days after placing that order I get another email:

We regret to inform you that we are temporarily out of stock of your requested item

As we are currently unable to confirm when we are likely to receive a further replenishment of this item(s), we are therefore sadly unable to accept your order at this time.

How can it possibly take an entire working week to figure out that you don’t have something?

If T J Hughes had a functioning stock control system and web site then I’d have never bothered.

Thanks for nothing guys – a total waste of time.

I’m also not impressed with Google here. They’re showing 4 stars based on 44 seller ratings. I took the trouble to put all of the ratings into a spreadsheet and work out my own average, which turned out to be exactly 3. So what’s juicing the ranking up to 4?

Update 22 Dec – I just had a call from the customer services manager at TJ Hughes. He told me that they have managed to find a Push N Go Thomas, and that he’s getting it sent out to me. Given the state of the postal system after all the snow we’ve had neither of us expects delivery before Christmas, but still it’s a nice gesture.

Update 17 Jan – the Push N Go Thomas that was supposed to be sent to me before Christmas never arrived. After waiting for the mail to return to normal following Christmas and all the snow it became clear that there was a problem, so I contacted TJ Hughes again. Another package was sent out last week, and it was just delivered. Since I was never after a freebie I’ve donated £18.50 to Help for Heroes (which when tax is taken into account is roughly what I was expecting to pay for the toy when I ordered it).

Filed under: could_do_better, grumble | 1 Comment

Tags: cancelled, customer service, Froogle, google, online, order, product search, ranking, shopping, T J Hughes

I moved into my house over 8 years ago. The day after that move I bought a Brabantia kitchen bin – a nice big shiny stainless steel one. As I got to know more of the neighbours it soon became something of a running joke that everybody had bought the same bin.

Some years later the hinge on the lid broke. I called up customer services and they sent me a new one (of an obviously better design).

Over time that lid also broke. The catch wouldn’t work reliably, and the plastic by where it fits around the handles got cracked (by people not putting the lid back properly). Eventually I could tolerate it no more, and I looked into getting a new lid (or maybe even an entire new bin).

As luck would have it Brabantia sell their products with a 10 year guarantee. I ordered a new lid online.

Somewhat annoyingly I received an email the next day asking me to send the old lid back, but it came with detailed packing and (free)post instructions – so no major drama. I went about fashioning a temporary lid out of some cardboard that was otherwise on its way to recycling.

A little over a week later I got another email saying that the new lid was on the way. It took some extra days to arrive because of heavy snow, but my bin now looks good as new (and the lid works better than ever).

Brabantia’s product may not be the cheapest, but their stuff is well made, and when that’s backed up by a great guarantee it makes for a happy customer.

Filed under: did_do_better | 1 Comment

Tags: Brabantia, customer service, guarantee, Touch bin

I live in the UK, and I feel like I’ve been waiting for Google Voice for way too long. I also travel frequently to the US, so I could get some use out of the service as it stands and it’s been frustrating that I couldn’t sign up.

Disclaimers

Google only offers it’s Voice service within the US, and using it elsewhere probably constitutes a violation of their terms of service.[1]

When Google does get around to launching services outside of the US it’s likely that you won’t be able to use them (with a given account) if you’re already using a US number.

Signing up

You will need:

- A proxy in the US

- A US telephone number that you’re able to answer

Like so many geographically limited services Google Voice uses IP geolocation to determine whether you’re allowed to play or not. When I browsed to voice.google.com from the UK I’d see the message shown above. Using EC2 as a proxy to give me a US IP got around that problem, and I was able to start the sign up process.

To complete the sign up you need to register at least one US phone number, and verify it by entering a code. To get a (free) US number I used IPkall. Luckily I already had a VOIP/SIP service that I could point IPkall at. Initially I had some trouble – the number IPkall gave me had already been used by multiple Google Voice subscribers, but after cancelling that number I got another that did work.

There are probably other ways to do this now. Skype has just refined their SkypeToGo service so that it can connect directly to a remote extension via the Skype network (rather than using an IVR menu as before), which means it’s possible to avoid SIP and VOIP entirely and just virtually wire up existing telephones [2].

Refinements

Once I had the basic service working I spent some time integrating Google Voice with my SIP Sorcery account. There’s a great guide for this. Along the way I also got myself a SIPgate number in the US. This involved some more proxy based hoop jumping (and a friend with a US mobile), but gives me confidence that I have a backup to IPkall if it becomes flaky.

I also configured SIP Sorcery so that incoming calls from Google Voice would be routed to my mobile and desk phones (by making use of Ribbit).

Next steps

I still need to get my US mobile registered, and since my PAYG T-Mobile doesn’t offer international roaming I’m going to have to wait until my next trip to get that sorted.

Numbers

After going through this process I now have a ridiculous quantity of telephone numbers – 11 in total. The good news is that I only need to give two out to people that want to contact me – my UK number (which is attached to Skype, and normally forwards into my Ribbit UK service) and my Google Voice US number (which through SIP Sorcery meshes into my existing handsets, desk phones and services).

Conclusion

Whilst I hope that Google Voice will soon launch in the UK and other countries there’s definitely some use that can be had out of it now for people that do business in the US and/or travel there frequently. It would be brilliant if Google Voice could replace the rats nest of Skype, SIP sorcery, IPkall, SIPgate and Ribbit that I’m presently using and let me have the entry points (numbers) that I need flexibly routed to the end points (handsets) that I have, but I won’t hold my breath on that – especially where multiple countries and billing/regulatory structures are involved.

Notes

[1] Though I should point out here that people who sign up for the service in the US aren’t prevented from using it when they travel, so to that extent the service is as global as the Internet it runs on.

[2] To use this method you just need to enter your Google Voice number as a registered phone. It’s also necessary to set Caller ID (incoming) to ‘Display my Google Voice number’ otherwise Skype won’t be able to authenticate the call origin.

Filed under: howto, technology | 1 Comment

Tags: google, google voice, IPkall, sip, SIP sorcery, SIPgate, skype, SkypeIn, SkypeToGo, UK, US, Voice, voip

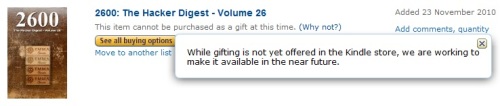

Shortly before digging into the copy of Cognitive Surplus I bought on Google ebooks the other day I read this piece comparing the relative merits of Google, Amazon and Apple’s offerings. One of the areas of the store/reading experience it didn’t touch on was gifting. Since getting my Kindle I don’t really want any more dead tree books, so I converted all of my Amazon wish list items over to Kindle format where available (as there wasn’t any proper DRM free option). Sadly gifting isn’t yet available on Amazon.co.uk, so I see this:

Notice how the ‘why not’ explanation doesn’t really provide any further information?

I’m hopeful that it will be fixed soon (though looks like not in time for Christmas), as gifting is now available in the US:

Whilst I would expect that Google will quickly catch up with this, I have my doubts. It seems that publishers are still trying to maintain a geographic sales model that doesn’t shape up well on a global internet[1]. One of the hurdles being put in the way of buyers is the need to pay from the same geography as the sale. That hurdle works fine when somebody is buying for themselves, but gets smashed when you allow people to buy stuff for each other (even indirectly through gift cards or similar).

Google has been very slow in this area. Google Voice is still stuck in the US, a situation that’s sure to be in part due to tax and payments issues. There’s also no gift card mechanism for Google Checkout (besides the fact that they accept gift cards from the payments card companies). Perhaps until now the gift of Google wasn’t that alluring – who would want a Google Apps subscription for their birthday? The Android marketplace, Chrome marketplace and Google Books surely change that though. The trouble for Google will be how do they keep upstream relationships with the ‘content’ industry in good shape whilst providing what customers want?

[1] Charles Stross has already done an excellent job of explaining Territories, Translations, and Foreign Rights in his Common Misconceptions About Publishing series, so I don’t have to.

Filed under: could_do_better, technology, wibble | Leave a Comment

Tags: amazon, apple, content, DRM, ebook, geography, gift card, gifting, google, Google checkout, iBook, payments, publishing

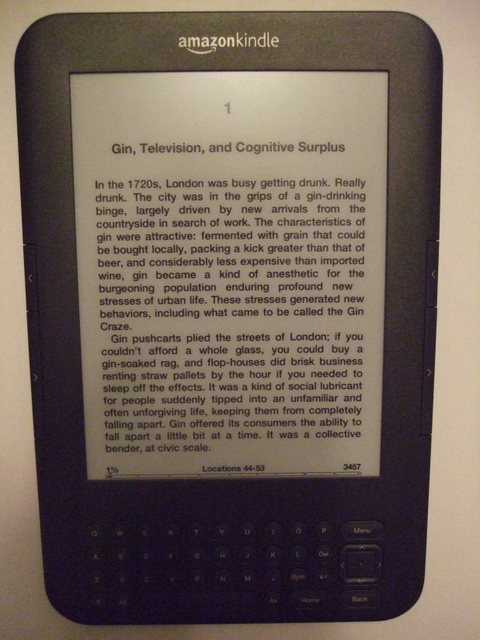

One of the big tech news items today is the launch of the much anticipated Google eBooks. Sadly the service is only available in the US at the moment, so I thought I’d have a poke around and see what the hurdles were.

US Browsing

Browsing from my regular connection at home I could only see the free (out of copyright in the US) books that have been available for some time, along with a message that ‘The latest Google eBooks are not available for sale in your location, yet…Google is working with publishers around the world to let you buy the latest ebooks from top authors. In the meantime, you can still browse millions of free and public domain Google eBooks and read them effortlessly across your devices‘. Of course I’ve heard this song before (or one much like it) with Amazon. After firing up a US based proxy I was able to browse the new store. I put a copy of Clay Shirky’s ‘Cognitive Surplus’ into my basket (after reading JP’s review of it the other day, and being disappointed that I couldn’t get it as an ebook[1]).

Payments

The next hurdle was paying. I got a message that the seller (in this case Penguin) didn’t accept cards from my country. I briefly checked out various virtual credit card and prepay card options, but nothing seemed easy or attractive. The workaround I came up with was using an additional card that’s already on my Amex account, along with a US address (Google Checkout already had the details for my main Amex – and that was the card that it wouldn’t accept). I suspect that this may not work for Visa or Mastercard as they issue additional cards with the same number.

Downloading

I could now read my book online (even using a browser with no proxy), but I wanted it on my Kindle. The first step was to download the book. It seems that Google are using Adobe’s DRM (and supported device ecosystem), so I needed to follow the instructions for those devices[2]. After a few hiccups (which I initially thought were down to having my laptop ‘authorized’ to my work email address, but that no look as if they were just flaky DRM server issues) I had a DRMed ePub file in ‘My Documents\My Digital Editions’.

Removing DRM

I used the Python scripts from this guide to strip the DRM off my ePub file – a pretty painless process.

Converting ePub to mobi

The final step was to convert my ePub file to mobi format so that my Kindle could read it. For this I used Calibre, which looks like an excellent tool with lots of features I’ve not had the time to explore yet.

That’s it :)

I now have my $12.99 copy of ‘Cognitive Surplus’ on my Kindle despite Amazon, Google and Penguin trying to stop me (though Google and Penguin got my money this time, and Amazon only got 2¢).

Notes

[1] Update 8 Dec – It seems that in the last few days a UK Kindle Edition has become available. We can now look at the price arbitrage of £12.99 versus $12.99 (£8.54 [or £10.03 if VAT had been added]).

[2] I had to install Adobe Digital Editions for this part. I already had it on my laptop, but that initially refused to do the right thing with the .acsm file. Installing on my desktop failed in both Chrome (with Flash enabled) and Firefox (where I don’t run a Flash blocker). Thankfully Adobe do have a regular download link.

Filed under: howto, technology | 4 Comments

Tags: Adobe, amazon, Amex, Calibre, convert, Digital Editions, DRM, ebook, epub, google, Google books, kindle, mobi, payments, proxy, UK, US

Region locks – My 2¢

Since I started using Amazon EC2 as a web proxy I’ve found that I’m exploiting it pretty regularly. Every time that I see one of those ‘you can’t access that content from your country’ type messages I have a choice. I can give up and move on, or I can fork out 2¢ to spin up a machine, and get access. 2¢ isn’t a lot of money, so more often than not I pay.

Video

I pay to see video content. Of course my 2¢ goes to Amazon, and not the people who made the video (and don’t want me to watch it outside the US).

File lockers

I pay to get stuff from file lockers (when I’ve already exceeded my quota from my home IP). Of course my 2¢ goes to Amazon, and not the people running the file locker (and is a whole lot less than one of their subscription plans). Oddly it seems that downloading from some of the services is faster down an SSH tunnel from Amazon in the US than it is over my regular broadband connection (WTF?).

Amazon 2 : Other services 0

So – living proof that micro-payments work for stuff on the web. What a shame that the payments are going to the wrong place. If only those other service providers had a smart new business model like Amazon’s maybe they’d get my 2¢.

Filed under: could_do_better, media, technology | 2 Comments

Tags: amazon, aws, ec2, lock, micro payments, micropayments, payments, proxy, region, region lock, SSH, tunnel, video

A tale of two clouds

Over the past few weeks I’ve been kicking the tyres on two new(ish) entrants to the IaaS space. Both services are still in beta.

Savvis Virtual Private Datacenter

I first came across this back in June at the Cloud Computing World Forum, and I signed up straight away for a trial. Sadly there was some kind of issue with the first token that they sent out, and it wasn’t until a few weeks ago that this got fixed.

To be fair to Savvis it’s worth pointing out that VPDC isn’t meant to compete head on with the likes of Amazon Web Services (AWS) etc. It’s clearly a bridge product between dedicated hosting and public cloud for those who still have concerns around security and privacy. From a contractual and delivery perspective this makes the whole thing less ‘on demand’ than I’ve become accustomed to.

The main point of contact for the user of VPDC is a Flash application that’s used to design and deploy hardware architectures. I’m a Flash hater, so this was a bad start for me, but as Flash apps go it wasn’t too grim. The experience was somewhat reminiscent of early Sun N1 demos – drag and drop machines, click to configure, wire them together.

There are a few things regarding the firewall config that I could whine about, but at least it has one.

It was when I first hit the deploy button that disappointment really set in. AWS has made me expect (near) instant gratification, and VPDC doesn’t do that. I didn’t time the first deployment (of a single machine ‘essential’ configuration), but I had plenty of time to get on with other things. I did time the second deployment (of a three tier, three machine ‘balanced’ config) and it took over an hour. I guess an hour shouldn’t matter so much when you’re buying by the month, but it just felt wrong.

The next disappointment was the sign in process. Something else that AWS has spoiled me with is key based SSH that just works. VPDC is a bit old school, and emails out machine/usernames and passwords. Quite annoyingly it doesn’t bother to put the FQDN or IP into those emails, so you have to go back to the Flash app to figure those out from machine properties. The emails also say that a password change will be forced, but that isn’t the case.

Once I got this far I had a bare machine running Linux (Windows is also on offer). There isn’t (yet) some menu of middleware and persistence tiers that you can browse. Once again I suppose that the build of such machines from scratch might be a small issue if you’re keeping them for a long time, but Savvis desperately need to offer some choices and automation in this area (and something like CohesiveFT’s ElasticServer would be a great place to start).

Overall the trial served it purpose of illustrating to me the capabilities (and limitations) of the platform. I don’t think I’ll be rushing back with my company chequebook in hand, but I think I get what’s going on here.

Brightbox

I saw some cynical reactions on Twitter when Brightbox first announced their cloud platform as being a UK first (along with some mistaken impressions that AWS had UK based services), but I was intrigued, and signed up for the beta.

Brightbox have not taken the path of least resistance, and just thrown up something based on Eucalyptus (and the AWS APIs). This could be a problem in the long term as APIs settle down into standards, but in the short term it’s quite exciting to be able to try something new.

Brightbox’s background seems to be firmly rooted in the world of Ruby hosting, so it should be no surprise that their command line tools to access their API come as Ruby Gems. I ran into some trouble trying to get things going on my old Ubuntu 9.04 test VM, so I eventually caved and brought up a new 10.10 machine on which the CLI tools installation went without a hitch.

With the CLI in hand the getting started guide had me up and running in no time. It could be just a subjective opinion, but my sense is that the Ruby CLI runs faster than the Java based EC2 equivalents – it probably comes down to the startup times of the various language interpreters. The machine that I got felt much like an EBS backed small instance on EC2, though the storage usage pattern is quite different (no S3 here). I liked the snapshot facilities a lot (though it’s not a feature I’ve played with on AWS, so I can’t compare), though I did hit some trouble when I tried to use a snapshot that wasn’t completed. The exciting news on this front is that Hybrid Logic will be putting their beta within the Brightbox beta, offering fine grained snapshots to provide fault tollerance and scalability (check out the webcast demos to see how cool this is).

My one gripe about Brightbox at this stage is the lack of a firewall (besides IPtables on a machine). I feel like things are just a little too vulnerable if left out there on the Internet without something to filter out the lumps – it’s all too easy to configure extra services on a machine that increase the (unintended) vulnerability surface area. On the plus side they use public key authentication for SSH into machines.

Overall I must say that I liked my (so far) brief experience of Brightbox, and I look forward to seeing what pricing looks like and how the service develops.

Filed under: cloud | 1 Comment

Tags: API, aws, Brightbox, cloud, Hybrid Cluster, Hybrid Logic, iaas, Ruby, Savvis, snapshot, VPDC

email like it’s 1995

The old joke

There used to be a joke that people who didn’t ‘get it’ would send an email and then call the recipient up to check if it had been received.

Now the joke’s on us

Now, I am that joke. I think we all are. Email has reached a point where it’s just not reliable enough for business use.

Update 17 Dec – clearly I’m not the only one to notice this. Babbage over at The Economist shares my pain.

Spam

The heart of the problem is of course spam

False positives

The real trouble though is that the cure is maybe worse than the disease. Our reaction to spam, and the systems that we use to filter it out are destroying the utility of email.

I can think of numerous examples in the last year or so when I’ve emailed somebody and got no reply, only to hear sometimes weeks later that my message got caught in their ‘junk folder’.

Bacn

One of the problems with spam is how to define it. There’s obvious stuff from the peddlers of dodgy drugs, body enhancements and financial scams – the stuff that none of us really want to see in our inboxes. Then there’s the stuff from marketing droids, where you had to give your email address to register for an event, but never really want to hear from them again. Then there are the mailing lists that you chose to subscribe to, but don’t want to read right now (for which the term ‘Bacn‘ was coined – it’s fine for breakfast, but you don’t want to eat it all day).

Caught in the crossfire

The problem is when legitimate mail, that’s neither spam nor bacn get’s classified as junk. I noticed last week that a perfectly normal email from a colleague had found it’s way into my Postini quarantine. When I fished it out of limbo I had a look at the headers to see if anything odd was happening. I found this:

Received-SPF: error (google.com: error in processing during lookup of [email protected]: DNS timeout) client-ip=74.125.149.185; Authentication-Results: mx.google.com; spf=temperror (google.com: error in processing during lookup of [email protected]: DNS timeout) [email protected]

Oops – looks like it’s time for me to set up SPF.

SPF

Sender Policy Framework (SPF) is a system where a domain can nominate approved senders for its email as a means to cut down spam. Sadly Google Apps doesn’t configure this for us (hardly a surprise as they don’t manage our DNS), and nor do they have any tool to help you configure SPF. Luckily there’s a bunch of stuff out there on the web, which led me to the following settings (for our Postini outbound servers, and Blackberry):

@ TXT v=spf1 ip4:207.126.144.0/20 ip4:64.18.0.0/20 ip4:74.125.148.0/22 ptr:blackberry.com ~all

This seemed to work fine, and I started seeing the good news in email headers from colleagues:

Received-SPF: pass (google.com: domain of [email protected] designates 74.125.149.180 as permitted sender) client-ip=74.125.149.180;

Troubles not over

Sadly SPF doesn’t seem to cut it with Postini, and a few days later another colleague told me that an email I’d sent him had been quarantined (it was a reply to an old thread asking if he’d made any further progress). I could see nothing more that I could do, so I raised a support case with Google/Postini. Here’s their reply in its full horror:

Chris,This is a known issue and our team is working to correct the issue. The Problem is that you internal mail goes out to the internet and back in through Postini. The Postini system is designed to be very critical on spoofed mail, because a huge percentage of spoofed mail is spam. I have no ETA as to when this will be corrected, but we do have a work around.We have a feature called IP lock. Turning this feature on will allow you to specify the IP’s you allow to spoof your domain. Once you’ve turned this on and added the Google Apps IP ranges you can add your domain to the organization level approved senders list and not have to worry about unauthorized spoofed mail making it through to your end users.Here’s a link that provides configuration instructions for the IP Lock feature.http://www.postini.com/admindoc/secur_iplock.html

and here is are our IP ranges…

64.233.160.0/19

Range:

64.233.160.0 to

64.233.191.255

216.239.32.0/19

Range:

216.239.32.0 to

216.239.63.255

66.249.80.0/20

Range:

66.249.64.0 to

66.249.95.255

72.14.192.0/18

Range:

72.14.192.0 to

72.14.255.255

209.85.128.0/17

Range:

209.85.128.0 to

209.85.255.255

66.102.0.0/20

Range:

66.102.0.0 to

66.102.15.255

74.125.0.0/16

Range:

74.125.0.0 to

74.125.255.255

64.18.0.0/20

Range:

64.18.0.0 to 64.18.15.255

207.126.144.0/20

Range:

207.126.144.0 – 207.126.159.255

Regards,

Redacted

Technical Support Engineer III

Wow – how incredibly unhelpful is that?

Postini could have a system that works (and that respects SPF showing that mail has originated from their own servers) – but they don’t.

The support engineer could have configured IP Locks for me – but he didn’t.

The support engineer could have provided me with the script that I needed to run – but he didn’t.

So… off to the documentation, and a bit of trial and error to discover that I needed to run the following batch script in the Postini services console (Orgs and Users > Batch):

# add Postini IPs

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:64.233.160.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:216.239.32.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:66.249.80.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:72.14.192.0/18

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:209.85.128.0/17

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:66.102.0.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:74.125.0.0/16

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:64.18.0.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:207.126.144.0/20

# add BlackBerry IPs

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:206.51.26.0/24

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:193.109.81.0/24

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:204.187.87.0/24

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:216.9.240.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:206.53.144.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:67.223.64.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:93.186.16.0/20

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:68.171.224.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:74.82.64.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:173.247.32.0/19

addallowedip [capitalscf.com] Account Administrators, capitalscf.com:178.239.80.0/20

# show what happened

showallowedips [capitalscf.com] Account Administrators

Note how complex the OrgName is compared to the examples in the documentation.

It’s also completely unclear to me whether IP Lock should be used in combination with having my domain as an ‘Approved Sender’ or not? For the time being I do (I can always tighten things up if a flood of spam ensues).

Fix this Google

I only use Postini for its ability to add footers with a disclaimer, and this whole thing has me wondering if it’s more trouble than it’s worth – after all the anti-spam in regular gmail is pretty good (and it’s a question for another day why that functionality isn’t better integrated into Postini quarantine – still, they’ve only had 3 years – how hard can it be?).

Or will Facebook fix it for us all?

There has been much fuss in the last few weeks about Facebook launching an integrated email/messaging service. I personally can think of few things I’d less like to do than use Facebook more. I don’t see them as a business service, and I’ve yet to hear anything about how they will deal will the spam problem. But many others seem to think what they’re doing is the shape of things to come – so I could be wrong.

[update 22 Nov] Google/Postini have now been in touch to say that I was apparently the victim of ‘a recent incident that we have since resolved’. PIR_11_Nov_16_Spam_Quarantine. This leaves me wondering how often this sort of thing happens and I don’t notice, and why the first support engineer went straight down the IP lock route?

[update 22 Nov #2] Looks like I missed a bunch of BlackBerry IP ranges first time around – one of the inherent problems with using such a fragile approach. The definitive list is here. At this stage I’m quite tempted to turn IP Lock off given that Google have come clean about their incident.

Filed under: could_do_better, grumble, howto, software | 7 Comments

Tags: bacn, batch, blackberry, business edition, DNS, email, fail, false positive, GABE, GAPE, gapps, google, Google Apps, IP Lock, Postini, premier edition, quarantine, spam, SPF, support

My friend Randy posted a few days ago on Grid, Cloud, HPC … What’s the Diff?. I started to make a comment on the blog, but it was getting too long so I moved it here.

Randy does a good job of pinning down both performance and scalability, but in my experience productivity trumps both. This is another way of saying that there are sometimes smarter ways of reaching an outcome than brute force. There’s a DARPA initiative spun up around this – High Productivity Computer Systems, which I think came about when somebody looked at what Moore’s law implied for the energy and cooling characteristics of a Hummvee full of C4I kit.

A crucial point when considering productivity is to look at the overall system (which can be a lot more than what’s in the data centre). Whilst it may be possible to squeeze the work done by hundreds of commodity machines onto a single FPGA that has implications for development and maintenance time and effort. In a banking environment where the quants that develop this stuff are far from cheap it may actually be worth throwing a few $m at servers and electricity rather than impacting upon developer productivity.

I’d argue that the lines between message passing interface (MPI) workloads and embarrassingly parallel problems (EPP) are blurrier than Randy makes out. It’s all about the ratio of compute to data, and dependency has a lot of importance – if the outcome of the next calculation depends on the results of an earlier one then you can end up shovelling a lot of data around (and high speed, low latency interconnect might be vital). On the other hand if the results of the next calculation are independent of previous results then there’s less data to be wrangled. Monte Carlo simulation, which is used a lot in finance, tends to have less data dependency than other types of algorithm.

Most ‘EPP’ are low in data dependency (usually with just initial input variables and an output result), and so the systems are designed to be stateless (in an effort to keep them simple). This causes a duty cycle effect where some time is spent loading data versus the time spent working on the data to produce a result (and if the result set is large there may also be dead time spent moving that around). Duty cycles can be improved in many cases by moving to a more stateful architecture, where input data is passed by reference rather than value and cached (so if some input data is there already from a previous calculation it can be reused immediately rather than hauled across the network). This is what ‘data grid’ is all about.

Getting back to the question of ‘grid’ versus ‘cloud’, I agree with Randy that there’s a big overlap, and it’s encouraging to see services like Amazon’s Cluster Computer Instance (and it’s new cousin the Cluster GPU instance). I will however return to a point I’ve made before – Cloud works despite the network, not because of it. The ‘thin straw’ between any data and the cloud capability that may work on it remains painful – making the duty cycle worse than it might otherwise be. For that reason I expect that even those with ‘EPP’ type problems will have to think more carefully about the question of stateful versus stateless than they may have done before. It matters a lot for the overall productivity of many of these systems.

Filed under: cloud, technology | 1 Comment

Tags: cloud, grid, MPI, parallel processing, performance, productivity, scalability

It seems that our politicians are easily fooled by the telecos and their regulatory capture. Just yesterday the UK’s Culture minister Ed Vaizey announced his support for a ‘two-speed‘ internet. The idea is superficially attractive – content providers pay a premium to have their stuff delivered faster, and the consumer benefits from improved service. It’s like the company you buy petrol from also paying your road tolls.

The problem – there is no faster. There is only slower.

This isn’t about BT etc. building the online equivalent of the M6 Toll. This is about BT etc. building the online equivalent of the M4 bus lane.

For sure it would be nice if somebody was building the extra physical infrastructure to bring me a faster internet. But that’s not what’s happening here. The UK’s ambitions are still set desperately low at providing 2Mbps services for all, and now our politicians want to allow the open part of that to be slowed down even more.

Let’s also figure out who pays, and for what… ‘Heavy bandwidth’ services (anything that distributes video) are singled out as ‘most likely to be hit with higher charges’. These services already pay for big fat pipes, and it’s fair to ask why should they pay again? It’s also fair to ask who does the paying? With YouTube and ITV.com charges could be passed back to advertisers, but I fail to see the win here. With BBC iPlayer it would seem that there’s an expectation that a part of the TV license fee should be used as a telco subsidy (having failed to get a ‘broadband tax’ into the last finance bill). Nice money if you can get it. I wonder how much BT spends on lobbying, and how many fibre to the home roll-outs that would buy?

Filed under: technology | 2 Comments

Tags: broadband, net neutrality, network, neutrality, telco